We just wrapped up the Sept 14, 2023 Computer Vision Meetup, and if you missed it or want to revisit it, here’s a recap! In this blog post you’ll find the playback recordings, highlights from the presentations and Q&A, as well as the upcoming Meetup schedule so that you can join us at a future event.

First, Thanks for Voting for Your Favorite Charity!

In lieu of swag, we gave Meetup attendees the opportunity to help guide a $200 donation to charitable causes. The charity that received the highest number of votes this month was Drink Local Drink Tap, an international non-profit focused on solving water equity and quality issues through education, advocacy, and affordable, safe clean water sources. We are sending this event’s charitable donation of $200 to Drink Local Drink Tap on behalf of the computer vision community!

Missed the Meetup? No problem. Here are playbacks and talk abstracts from the event.

ARMBench: An Object-Centric Benchmark Dataset for Robotic Manipulation

Amazon Robotic Manipulation Benchmark (ARMBench), is a large-scale, object-centric benchmark dataset for robotic manipulation in the context of a warehouse. ARMBench contains images, videos, and metadata that corresponds to 235K+ pick-and-place activities on 190K+ unique objects. The data is captured at different stages of manipulation, i.e., pre-pick, during transfer, and after placement from a robotic workcell in an Amazon warehouse. Benchmark tasks are proposed by virtue of high-quality annotations and baseline performance evaluation are presented on three visual perception challenges, namely 1) object segmentation in clutter, 2) object identification, and 3) defect detection.

Fan Wang is a Research Scientist at Amazon Robotics, with a focus on robotic manipulation and perception. She holds a Ph.D. in electrical and computer engineering from Duke University. Her undergraduate degree was in electrical and mechanical engineering from the University of Edinburgh, UK.

Chaitanya Mitash is an Applied Scientist at Amazon Robotics. His research focuses on computer vision and manipulation for item manipulation. He received his Ph.D. in computer science from Rutgers University.

Q&A

- How do you identify the pseudo-defective product that has minor damages

- What is the size of the ARMBench dataset?

- How much effort do you put into the data collection conditions? Like optimizing the lighting, camera angles etc?

- How do you gather more data on edge cases?

- What is the minimum batch_size required for each object out of 190k unique objects?

- What are the challenges faced during the time of segmentation?

Resource links

Visualizing Defects in Amazon’s ARMBench Using Embeddings and OpenAI’s CLIP

In this lightning talk, machine learning engineer Allen Lee from Voxel51 gave us a quick tour of Amazon’s recently released ARMBench dataset for training “pick and place” robots. You can learn more about how to create embeddings on the dataset using the FiftyOne Brain to derive interesting insights in the companion blog and notebook on GitHub.

From Model to the Edge, Putting Your Model into Production

This talk delves into the journey from model training to deployment at the edge – an often neglected yet vital aspect of machine learning implementation. It elucidates the essential practices and challenges associated with transitioning an AI model from a controlled environment to real-world edge devices.

Joy Timmermans is a Machine learning Engineer at Secury360, a startup offering a hardware box that turns your security cameras into a perimeter security system with no false detections. He is responsible for the model training, active learning infrastructure and managing the labeling team. If you have been in the FiftyOne Slack you probably have seen him around.

Q&A

- How do you deploy the models in hpc-ai supercomputers?

Optimizing Distributed Fine-Tuning Workloads for Stable Diffusion with the Intel Extension for PyTorch on AWS

In this talk, we explore the use of the Intel Extension for PyTorch to optimize a vision generative AI workload. The vision workload focuses on the fine-tuning of a stable diffusion model, on the AWS cloud using Intel’s 4th Generation Xeon Processors. We leverage optimizations like Intel Advanced Matrix Extensions (AMX) and mixed-precision with BF16 and FP32, to speed up training. Attendees can expect (1) A technical dive into the workload and solution (2) a brief code walkthrough (3) workload setup on AWS, and (4) a short demo.

Eduardo Alvarez is a Senior AI Solutions Engineer at Intel and a specialist in applied deep learning and AI solution design. His background includes building software tools for the energy sector, and his primary interests lie in time-series analysis, computer vision, and cloud solutions architecture. Additionally, he is a community leader in data science and ML/AI for geosciences.

Q&A

- Do you have any timing comparison with other CPUs and/or GPUs?

- What is the benefit of AMX in percent speedup both for training and deployment?

Resource links

- GitHub: intel-extension-for-pytorch

- Scaling Transformer Model Performance with Intel AI

- Intel Advanced Matrix Extensions Overview

Join the Computer Vision Meetup!

Computer Vision Meetup membership has grown to almost 6,000 members in just one year! The goal of the Meetups is to bring together communities of data scientists, machine learning engineers, and open source enthusiasts who want to share and expand their knowledge of computer vision and complementary technologies.

Join one of the 13 Meetup locations closest to your timezone.

- Ann Arbor

- Austin

- Bangalore

- Boston

- Chicago

- London

- New York

- Peninsula

- San Francisco

- Seattle

- Silicon Valley

- Singapore

- Toronto

We have exciting speakers already signed up over the next few months! Become a member of the Computer Vision Meetup closest to you, then register for the Zoom.

What’s Next?

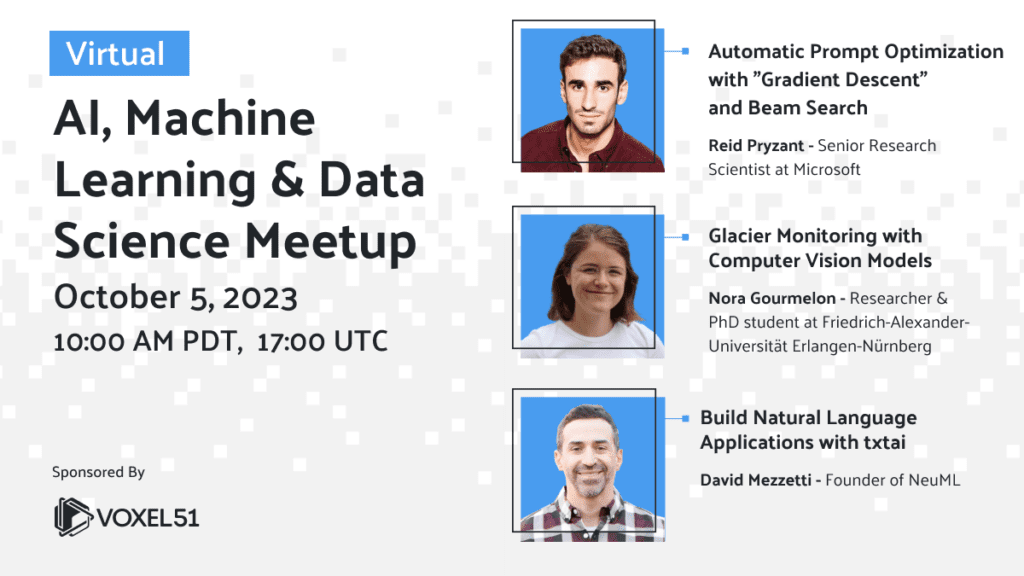

Up next on Oct 5 at 10 AM Pacific we have a great line up speakers including:

- Automatic Prompt Optimization with “Gradient Descent” and Beam Search – Reid Pryzant, Senior Research Scientist at Microsoft

- Glacier Monitoring with Computer Vision Models – Nora Gourmelon, Researcher and PhD student at Friedrich-Alexander-Universität Erlangen-Nürnberg

- Build Natural Language Applications with txtai – David Mezzetti, Founder of NeuML

Register for the Zoom here. You can find a complete schedule of upcoming Meetups on the Voxel51 Events page.

Get Involved!

There are a lot of ways to get involved in the Computer Vision Meetups. Reach out if you identify with any of these:

- You’d like to speak at an upcoming Meetup

- You have a physical meeting space in one of the Meetup locations and would like to make it available for a Meetup

- You’d like to co-organize a Meetup

- You’d like to co-sponsor a Meetup

Reach out to Meetup co-organizer Jimmy Guerrero on Meetup.com or ping me over LinkedIn to discuss how to get you plugged in.

The Computer Vision Meetup network is sponsored by Voxel51, the company behind the open source FiftyOne computer vision toolset. FiftyOne enables data science teams to improve the performance of their computer vision models by helping them curate high quality datasets, evaluate models, find mistakes, visualize embeddings, and get to production faster. It’s easy to get started, in just a few minutes.