Welcome to the seventh installment of Voxel51’s industry spotlight series. Each edition highlights how a different industry—from construction to climate tech, medicine to robotics, and more—uses computer vision, machine learning, and artificial intelligence to drive innovation. We’ll dive deep into the main computer vision tasks being used, current and future challenges, and companies at the forefront.

In this edition, we’ll focus on robotics! Read on to learn how AI and computer vision continue to revolutionize the robotics world!

Robotics Industry Overview

Robotics is an exciting and growing field, offering solutions to complex challenges, bringing boundless innovation, and unlocking limitless possibilities to enhance our lives and work. The robotics industry is diverse and encompasses a wide range of applications, including manufacturing, healthcare, agriculture, safety, fulfillment centers, and more. Robotic technologies have revolutionized various sectors by automating tasks, enhancing efficiency, and improving safety standards.

Here are some key facts and figures about the robotics industry:

- The global robotics market is expanding rapidly; one report estimates that the market value will reach US$42.82 billion by the end of 2024, grow about 11% year-over-year for the next four years, and reach US$65.59 billion by 2028

- In the same report, the service robotics segment within the robotics market is anticipated to hold the largest market volume, serving humans personally and professionally to help perform tasks or optimize workflows

- AI in robotics is also a separate market segment, which is also reported to grow at about 11% per year, reaching US$19.01 billion this year and US$36.78 billion by 2030

- Regionally, Asia-Pacific is reported to be the largest market for robotics globally, driven by substantial robot adoption

Whether automating assembly lines, assisting in surgeries, or performing hazardous tasks, robots have become integral to modern workflows, and their impact will only expand over time. As the industry continues to evolve, several key challenges and trends emerge.

Key Industry Challenges in Robotics

- Safety standards and regulations: As robots are integrated into diverse environments, ensuring human safety while working alongside them is paramount. Compliance with stringent safety standards and regulations becomes crucial to prevent accidents and ensure workplace safety.

- Human-robot interaction: As robots become more collaborative and interactive, designing intuitive interfaces for seamless human-robot interaction becomes essential. Addressing challenges related to ergonomics, communication, and trust between humans and robots is vital for efficient collaboration.

- Cybersecurity risks: With increased connectivity and integration of robotics with IoT and AI systems, cybersecurity threats pose a significant concern. Protecting robotic systems from cyberattacks, data breaches, and unauthorized access becomes imperative to safeguard critical operations and sensitive information.

- Cost: The rapid advancement of robotics technology has come with highly sophisticated parts and sensors. Significant challenges in acquiring materials and producing components lead to high costs.

- Complexity: The staggering amount of innovation in each component of a modern-day robot has made optimizing the system’s output a formidable challenge. It takes incredible engineering effort to effectively utilize, coordinate, and combine the best sensors, motors, machine learning models, and more.

- Energy efficiency: Complexity can strain robots’ ability to keep up with their intelligence, making energy efficiency a struggle.

- Ethical and societal implications: As robots become more pervasive, ethical considerations regarding their impact on employment, privacy, and societal well-being arise. Addressing concerns related to job displacement, data privacy, and ethical use of AI in robotics requires proactive engagement from industry stakeholders and policymakers.

Despite these challenges, the robotics industry continues to thrive, driven by innovation in material science, new sensors, compute resources, AI, and computer vision! Continue reading to learn about how computer vision is transforming robotics.

Applications of Computer Vision in Robotics

Object Detection and Recognition

Robotic arm manipulating a glass bottle. Image source: iStock

One of the fundamental tasks in robotics is to sense and perceive the environment and make decisions based on it. The most pure form we see of this today in robotics is object detection. Whether the robot is picking a can of soup from a bin or detecting cancer in a patient’s liver, object detection is at the center of millions of robots’ workflows.

Object recognition can refer to many different types of computer perception in robotics. For example, many industrial robots use standard camera sensors to take images and distinguish between a number of classes in either classical object detection or more advanced segmentation methods. Some robots, such as autonomous navigation systems, go above and beyond this and incorporate lidar and radar sensors into their perception systems to determine the depth and shape of objects.

The combination of computer vision and robots has revolutionized myriad processes, including warehouse fulfillment, medical procedures, car manufacturing, and agriculture.

For further reading on the use of computer vision and AI technologies in robotics, check out these articles:

- The Role of Computer Vision in Robotics: Advancements, Applications, and Future Implications

- Artificial Vision in Robotics: How Robots Track Objects

Additionally, here are a few academic papers:

- Onboard dynamic-object detection and tracking for autonomous robot navigation with RGB-D camera

- DoUnseen: Tuning-Free Class-Adaptive Object Detection of Unseen Objects for Robotic Grasping

- Multi-view Self-supervised Deep Learning for 6D Pose Estimation in the Amazon Picking Challenge

- Deep Residual Learning for Instrument Segmentation in Robotic Surgery

- Real-time Semantic Segmentation of Crop and Weed for Precision Agriculture Robots Leveraging Background Knowledge in CNNs

Environmental Monitoring and Surveillance

Keeping eyes and ears everywhere is impossible in large-scale areas like cities and public gathering spaces. In airports, hospitals, amusement parks, and other settings, robots have recently assumed a crucial role in enhancing safety and ensuring a better experience. Using computer vision, these robots monitor their surroundings to detect anything from potential threats to litter that has been thrown on the ground. The robots can then alert nearby staff to address the issue or sometimes even do it themselves!

Service robots also perform environment monitoring and surveillance to increase the safety and well-being of humans around them. Examples include disinfecting robots like the popular Roomba and UV disinfecting robots in hospitals. Both depend on computer vision techniques to avoid hindrances and detect what areas require cleaning.

Environmental monitoring and surveillance also play a role in hazardous situations. Robots such as Boston Dynamics’ Spot and drones are used to help with disaster relief and management. Thanks to modern robotics and computer vision, these robots can navigate hazardous environments to spot stranded or injured victims and bring them to safety.

Visit these resources for further reading on how computer vision is transforming environment monitoring efforts:

- The expanding roles of emergency drones in disaster management

- Cleaning robots reduce infections in hospitals and public spaces

Also, check out these academic papers:

- Search and rescue operation using UAVs: A case study

- UV Disinfection Robots: A Review

- Aerial Object Detection for Water-Based Search & Rescue

- FireBot – An Autonomous Surveillance Robot for Fire Prevention, Early Detection and Extinguishing

Visual Inspection and Quality Control

Computer vision has powered visual inspection and quality control in robotics for decades. Across the globe, robots equipped with sophisticated visual systems handle critical inspection tasks, including certifying the quality of manufactured goods and ensuring the integrity of underwater pipes. These resilient robots thrive even in harsh environments, accomplishing inspections with lightning-quick speed.

Looking for defects could mean finding the most minuscule out-of-place feature on a part. Luckily, even the hardest-to-find issues can be brought to light with computer vision technology. A great example of the culmination of advances in robotics and computer vision is Unmanned Underwater Vehicles (UUVs). These robots can detect cracks in ships’ hulls or the structural integrity of offshore structures. Inspection robots are also used in car manufacturing to determine if parts are good to ship and reduce the number of recalls!

For further reading on robot-powered inspections, visit these articles:

- Drone Pipeline Inspections: The Ultimate Beginners Guide

- Underwater Robots for Inspection of Pipelines

In addition, here are a few academic papers related to using computer vision for quality control:

- Anomaly detection for industrial quality assurance: A comparative evaluation of unsupervised deep learning models

- Anomaly Detection in Manufacturing

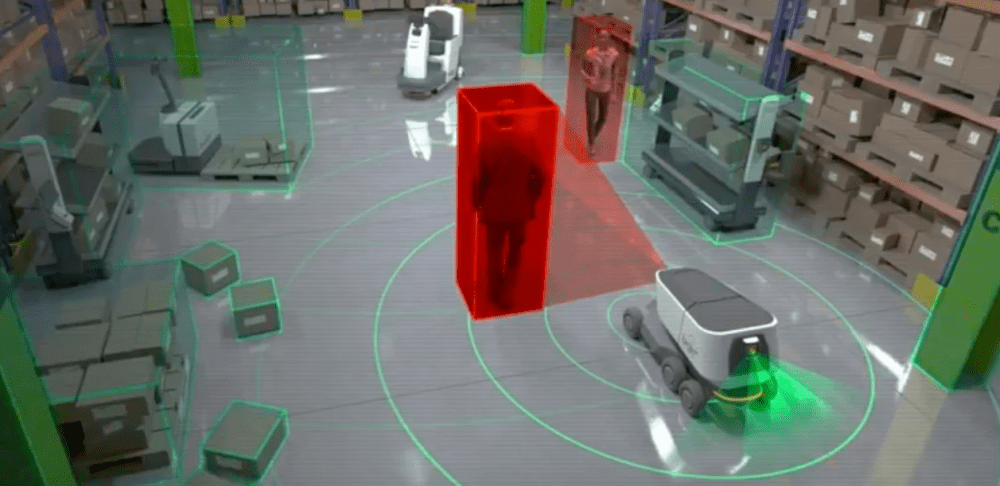

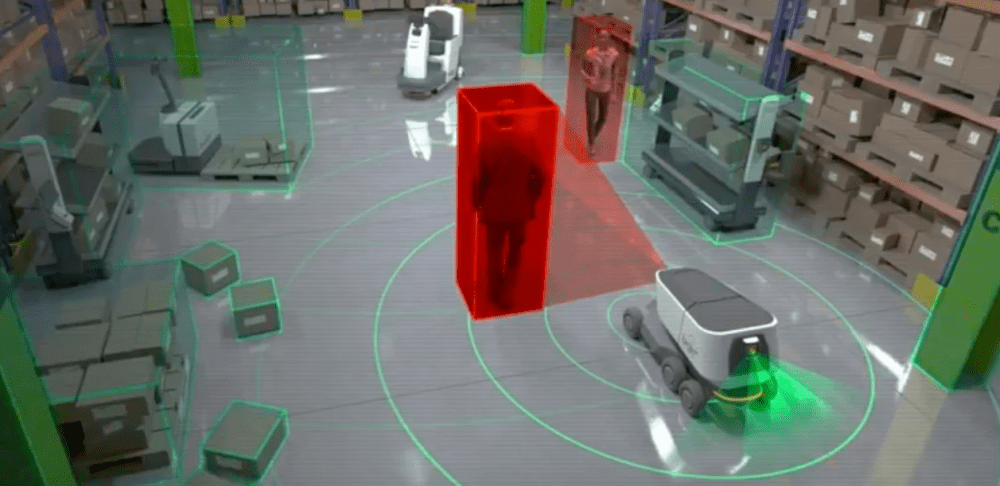

Autonomous Navigation

Autonomous robots, prized for their independence, rely on intricate navigation systems that enable them to sense their environment and make real-time decisions to correct their course. Computer vision is a crucial foundation for autonomous navigation systems, allowing them to perceive, understand, and maneuver through their surroundings.

The environment being scanned by the robot can vary significantly from use case to use case. Fortunately, the computer vision methods are very similar! Many of these robots leverage detection models like YOLOv8 or, in the past, EfficientDet. These models tend to be chosen over others due to their high speeds and accuracy. Given these robots’ low SWaP (size, weight, and power), they need models that will allow them to react fast enough to stimuli. More advanced systems incorporate lidar, radar, or a combination of both to further enhance the robot’s vision and optimize navigation.

For additional insights into autonomous navigation with computer vision, here are a couple of articles:

You can also check out these academic papers focused on computer vision in autonomous navigation:

- Autonomous Navigation of mobile robots in factory environment

- Recent Advancements in Deep Learning Applications and Methods for Autonomous Navigation: A Comprehensive Review

- A systematic review on recent advances in autonomous mobile robot navigation

Companies at the Cutting Edge of Computer Vision in Robotics

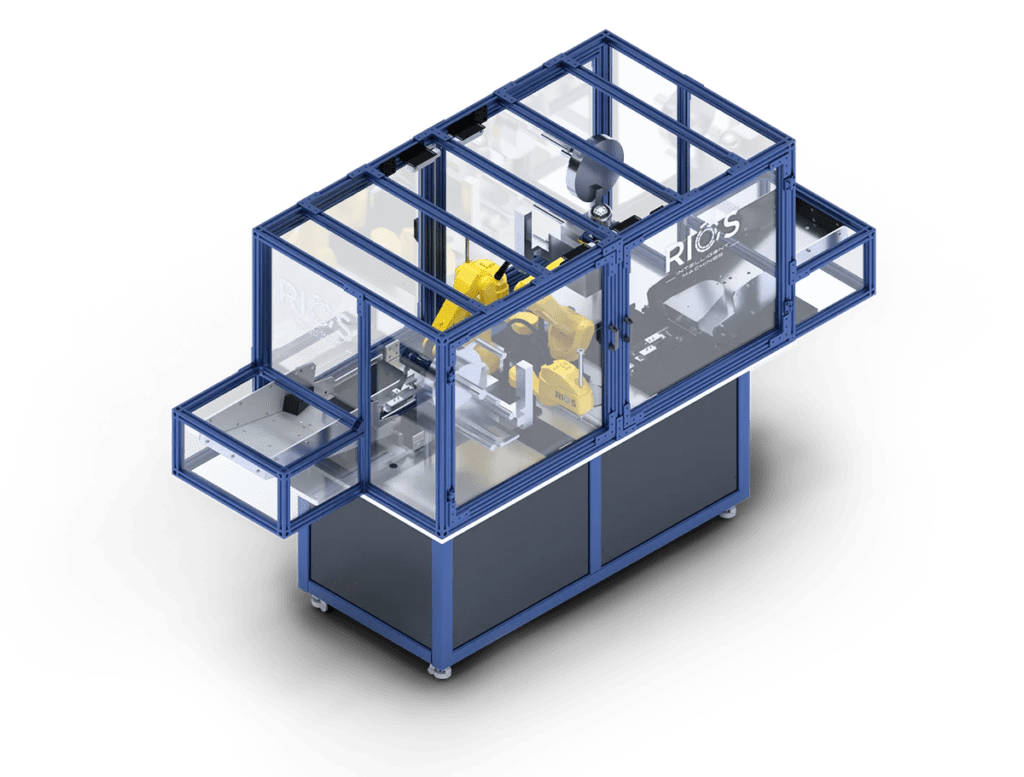

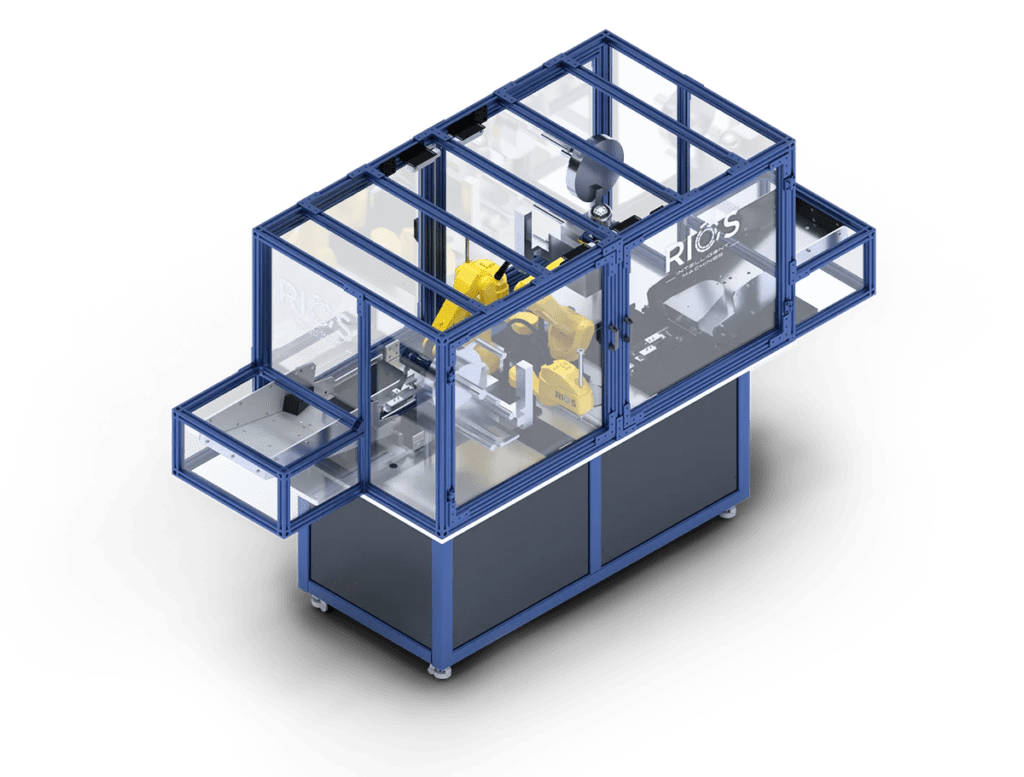

RIOS Intelligence Machines

RIOS Intelligent Machines is a game changer in the automation industry, helping enterprises automate their factories, warehouses, and supply chain operations while increasing production and eliminating defects.

RIOS accomplishes this through robust, reliable, and flexible AI-powered robotic solutions that seamlessly adapt to production requirement changes and AI-powered vision for monitoring operations, quality assurance, and handling complex operations previously not possible with mechanical robotic automation.

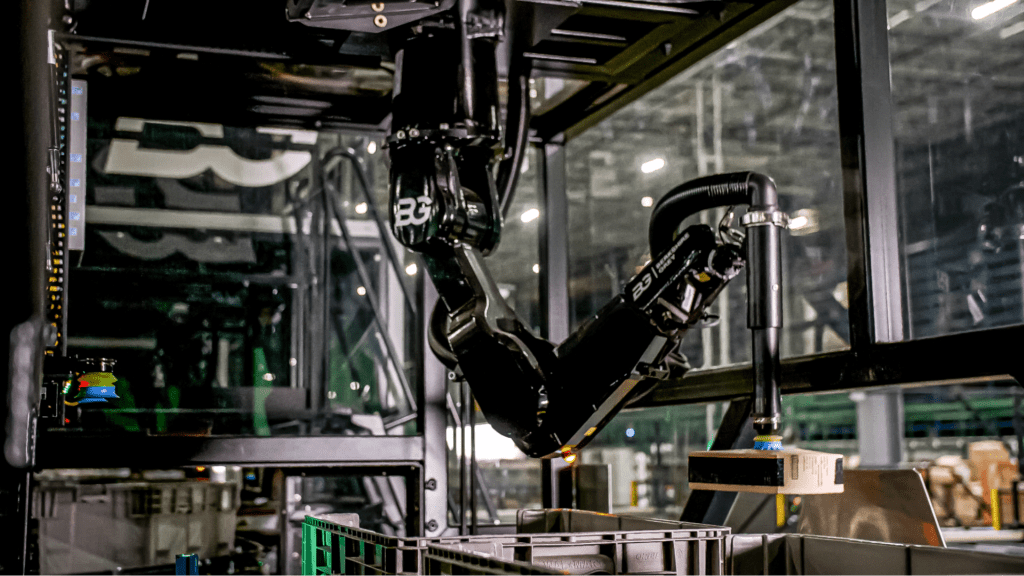

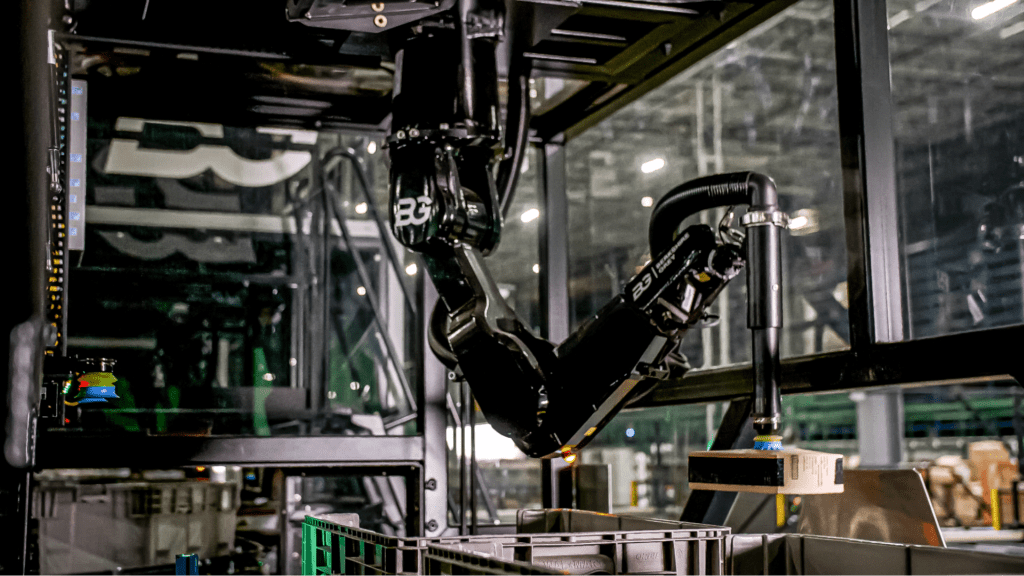

Berkshire Grey

Berkshire Grey (BG) is a formidable force in the logistics industry, flaunting a fleet of robotic solutions to optimize all supply chain operations. Berkshire Grey’s excellence shines brightest in how it adapts for its customers, offering custom system deployments, understanding customer requirements, and delivering reliable solutions that save time. To achieve this, BG has gone above and beyond, creating a flexible and powerful ecosystem of robots that can work in unison to accomplish the most complex tasks.

Computer vision is an integral part of the Berkshire Grey stack, as they rely on detection models to aid in tasks such as picking. In the case of their HyperScanner, BG uses computer vision at lightning speed to detect and sort packages coming down a line. The team is impressively deploying models all over the computer vision spectrum, adapting and evolving applications to meet customers’ needs.

Bonsai Robotics

Bonsai Robotics is at the cutting edge of agricultural and manufacturing robotics. Bonsai develops optic-based robotic solutions that can autonomously navigate the toughest terrains, including off-road, dust, high dynamic range, and obstacles. Growers can rely on Bonsai Robotics to help tend to and manage their orchards and fields, all without relying on GPS, cellular, or other connections. For manufacturers, Bonsai provides autonomy-ready hardware kits and perception, planning, controls, and other autonomy software that can be designed into new vehicle forms or retrofitted to existing machine types.

Bonsai’s system is unique in its ability to operate using top-of-the-line computer vision techniques like detection and segmentation models in addition to their proprietary SLAM stack. Combined with powerful path planning algorithms and steering mechanisms, Bonsai-enabled machines conquer complex growing tasks easily without the need for pre-mapping or recording.

Scythe Robotics

Scythe Robotics has created the M.52 autonomous mower capable of reacting to grass thickness instantly, preventing and clearing clogs without missing a blade of grass. It runs on an all-electric motor, giving you power without pollution. Scythe leverages the latest and greatest technology that allows for real-time mapping of its environment. Through segmentation models and precision localization, the mower can make a pristine cut every time.

Each mower comes equipped with eight HDR cameras and twelve ultrasonic sensors for ultra-reliable perception. The combination of the sensor suite and advanced AI enables Scythe’s M.52 autonomous mower to perform like a human operator, reliably navigating the terrain, including contours and slopes. Additionally, the M.52 uses computer vision to identify and respond to different obstacles. It will go around trees and poles but stop for people and pets. All this leads to safe, highly optimized cutting routines that free up time for employees to perform other valuable tasks that keep properties looking their best.

Woven by Toyota

Woven by Toyota is transforming Automated Driving (AD) and Advanced Driver Assistance Systems (ADAS) with innovations across the board. Safety in self-driving cars is paramount. As vehicles navigate the open road, they must safeguard the well-being of everyone—both inside and outside the car. That is why Woven by Toyota has taken every measure to be at the forefront of integrating robust, cutting-edge computer vision technology into their vehicles.

One significant area of focus for Woven by Toyota is its camera sensor technology. Sensors help perceive the environment, especially in challenging or adverse weather conditions. Additionally, the Woven by Toyota team achieves excellence in testing and simulation. Their comprehensive, large-scale driving datasets and even building Woven City, a mini futuristic test course city for mobility, have been key drivers for innovation. After all, high-quality data leads to high-quality results!

Robotics Datasets

If you are interested in exploring applications of computer vision in robotics, check out these datasets:

- Woven by Toyota Perception dataset: A robust driving dataset collected by automated vehicles. Explore the different camera views and point clouds. See it in your browser in FiftyOne, or check out additional details, including licensing information, here.

- North Campus Long-Term (NCLT) dataset: Originates from the University of Michigan North’s Campus and consists of image and sensor data collected using a Segway robot. See it in your browser in FiftyOne, or find additional details here.

- WeedCrop dataset: A dataset that can help train agriculture robots to water crops and destroy weeds. See it in your browser in FiftyOne, or learn more here.

- ARMBench (Amazon Robotic Manipulation Benchmark) dataset: A state-of-the-art robotic picking dataset that helps identify objects within a container. The dataset is excellent for training logistic robots in warehouses. See it in your browser in FiftyOne, or find additional details here.

If you want to see any of these or other robotic datasets added to the FiftyOne Dataset Zoo, get in touch, and we can work together to make this happen!

Join the FiftyOne Community!

Builders of robotics solutions can benefit from FiftyOne’s ability to quickly filter through the vast amounts of visual data collected daily from robots and other sources. Using open source FiftyOne, AI developers and scientists can curate datasets for model training or share them with colleagues for annotation or analysis of computer vision models.

Join the thousands of engineers and data scientists already using FiftyOne to solve some of the most challenging problems in computer vision today!

- 2,600+ FiftyOne Slack members

- 6,500+ stars on GitHub

- 20,600+ Meetup members

- Used by 500+ repositories

- 90+ contributors

What’s Next?

- See how computer vision impacts agriculture, manufacturing, healthcare, sports, retail, and saftey & security in our first six industry spotlight posts.

- If you like what you see on GitHub, give the project a star.

- Get started! We’ve made it easy to get up and running in minutes.

- Join the FiftyOne Slack community. We’re always happy to help.