In just two weeks, ChatGPT has taken a commanding hold of the public consciousness. More than a million people have “conversed” with OpenAI’s new chatbot, asking it to write poems and college essays, generate recipe ideas, build virtual machines, and oh so much more. It’s been used to write the intro for news articles and YouTube videos — the fact that I even need to say that ChatGPT did not write this introduction, by itself, speaks volumes.

Who would have thought that a chatbot would be simultaneously hailed as the next great disruptive technology, and decried as a virus that “has been released into the wild with no concern for the consequences”? And yet, underneath its seemingly intelligent facade, in some ways ChatGPT is frustratingly foolish.

As a machine learning engineer at a computer vision (CV) company (Voxel51), I’ve spent the last few days pushing ChatGPT to its limits to see what it “knows” about CV. I wanted to know what this language model means for the future (and present) of the field. Read on if you too are curious!

Before diving in, a few quick words of caution: first, there are an infinite number of questions one could ask ChatGPT. While I attempted a relatively thorough investigation, you may have asked an entirely orthogonal set of questions. For instance, I only asked for Python code. If you are unsatisfied with my coverage, I encourage you to go to https://chat.openai.com/chat and see for yourself. Second, ChatGPT is a generative model, so there is a degree of randomness inherent in its responses. Often when I would ask the same question multiple times, the results would look slightly different. If you ask these questions, there’s a chance that the answers you receive will also look different! Finally, and perhaps most importantly, this is only one person’s opinion.

This post is broken into six parts:

- What is ChatGPT?

- Where ChatGPT excels

- Where it falters

- Where to exercise extreme caution

- Why ChatGPT empowers CV engineers

- Just for fun

What is ChatGPT?

Released on November 30th, 2022, ChatGPT is OpenAI’s latest product to set the tech world on fire. Like GPT1, GPT2, GPT3, and InstructGPT before it, ChatGPT is a generative pretrained transformer (GPT) model, a type of large language model built with the notion of “self-attention”, which allows the model to flexibly identify which portions of the input — in this case the text from a conversation — are relevant to which other portions.

Large language models (LLMs) are trained on vast amounts of text data, such as books and articles, in order to learn the patterns and structures of human language. This allows them to generate text that sounds natural and human-like, making them useful for tasks like language translation and generating responses to questions.

For the past few years, LLMs have been rapidly growing in popularity. These models have exponentially increased in size: whereas the first transformer model, introduced in 2017, had 65 million parameters, GPT3, which was trained up until mid-2021, had 175 billion parameters. And with their size, so too has their expressive power dramatically increased. ChatGPT was built on top of an updated version of GPT3 called GPT3.5. This enormous expressive capacity, along with the data it was trained on (presumably similar to GPT3), is what enables ChatGPT to be so generally capable, comprehending inputs that include a variety of human and programming languages, mathematical equations, and chord progressions.

As a generative model, ChatGPT is specifically designed to create new text based on the patterns it has learned from the training data. What makes this new model so fascinating is that it was fine-tuned for conversation. Not only does the chatbot give eerily human responses, it is able to integrate user-provided feedback to better accomplish the desired task. Of course, it isn’t perfect, but this functionality opens the door for a range of interactive applications.

FYI: three sentences in this section were written by ChatGPT. I’ll let you guess which ones!

Where ChatGPT excels

From what I can tell, there are three main categories of computer vision applications for which ChatGPT is fairly reliable: commonplace code, dressed individual method calls, and clean concatenations of simple components. ChatGPT’s responses to queries in any of these three categories benefit from being relatively self-contained. I’ll give examples for each.

Commonplace code

This should come as no surprise, but a generative model that was trained on a large corpus, including text and code, is generally very good at generating blocks of code that occur frequently and with little variation across the internet. When a code-based solution is essentially canonical (and likely omnipresent in the training data), ChatGPT’s probabilistic predilections will, with high probability, generate the tried and true result.

Two examples of this include the fast Fourier transform (FFT), which is ubiquitous in signal processing and computer vision (among other fields):

import numpy as np

def fft(x):

"""Compute the discrete Fourier Transform of the 1D array x"""

x = np.asarray(x, dtype=float)

N = x.shape[0]

if N <= 1: # base case

return x

# split the array into even and odd components

even = fft(x[0::2])

odd = fft(x[1::2])

# compute the FFT of the even and odd components

T = [np.exp(-2j*np.pi*k/N)*odd[k] for k in range(N//2)]

return np.concatenate([even + T, even - T])

and pooling layers, which are essential ingredients in any convolutional neural network (CNN):

def min_pooling(inputs, pool_size):

# Get the dimensions of the input array

n_rows, n_cols, n_channels = inputs.shape

# Calculate the size of the output array

out_rows = n_rows // pool_size

out_cols = n_cols // pool_size

# Create an empty array to store the output

outputs = np.zeros((out_rows, out_cols, n_channels))

# Iterate over the input array and compute the output

for r in range(out_rows):

for c in range(out_cols):

for k in range(n_channels):

# Extract a patch of the input array

patch = inputs[r*pool_size:(r+1)*pool_size, c*pool_size:(c+1)*pool_size, k]

# Compute the min of the patch

outputs[r, c, k] = np.min(patch)

return outputs

Of course, these implementations are not always efficient, as in the case of the min_pooling() function above, which uses nested for loops. With semi-black box AI models like ChatGPT, it isn’t always clear if a generated solution is intentionally naive for the sake of simplicity and instruction, or if it genuinely selected what it believed to be the optimal solution. In scenarios like this, you can use the feedback oriented nature of ChatGPT to request improvements. In the case of pooling, for instance, ChatGPT can be instructed to parallelize the code or vectorize the for loops. Knowing what improvements to ask for is absolutely essential!

Dressed method calls

In a similar vein, ChatGPT has a pretty good grasp on all of the common machine learning and computer vision libraries (up until 2021), including PyTorch, TensorFlow, Scikit-learn, PIL, Skimage, and OpenCV. The chatbot is at its best when it can dress up methods from these libraries with the appropriate (boilerplate) preprocessing steps, such as input-output handling, converting a color image to grayscale, and reshaping arrays.

Some examples of this, from simplest to most complex, are sharpening an image:

from PIL import Image, ImageFilter

# Open the image

im = Image.open('image.jpg')

# Apply a sharpening filter

sharpened_im = im.filter(ImageFilter.SHARPEN)

# Save the sharpened image

sharpened_im.save('sharpened_image.jpg')

Applying Otsu thresholding:

from skimage import io

from skimage.filters import threshold_otsu

# Load the image

image = io.imread('image.png')

# Convert the image to grayscale

gray_image = np.mean(image, axis=2)

# Calculate the Otsu threshold

threshold = threshold_otsu(gray_image)

# Apply the threshold to the image to create a binary image

binary_image = gray_image > threshold

And computing the tail-distributed stochastic neighbor embedding (t-SNE) for a collection of images:

import numpy as np

from scipy.spatial.distance import pdist, squareform

from sklearn.manifold import TSNE

from scipy.spatial.distance import pdist, squareform

from PIL import Image

def tsne(images, perplexity=30.0, learning_rate=200.0, n_components=2):

# Convert the images to grayscale

grayscale_images = [np.array(image.convert('L')) for image in images]

# Stack the grayscale images into a single array

stacked_images = np.stack(grayscale_images)

# Flatten the stacked images into a single array

flattened_images = stacked_images.reshape(len(images), -1)

# Compute the pairwise distances between the flattened images

distances = squareform(pdist(flattened_images, 'euclidean'))

# Compute the low-dimensional embedding of the images using t-SNE

tsne = TSNE(perplexity=perplexity, learning_rate=learning_rate, n_components=n_components, init='random')

embedding = tsne.fit_transform(distances)

return embedding

Unfortunately, ChatGPT is not adept or even conversant in newer CV libraries like FiftyOne.

Concatenating simple components

While ChatGPT can definitely behave irrationally, the model has basic logical capabilities that enable it to synthesize multiple (simple) inputs into composite outputs: just ask ChatGPT a few questions of the form

“what do X and Y have in common”?

As an example, of this I asked ChatGPT to do the following:

“Write code to resize two images to (100,200), augment the dataset with 6 more images, blend all the input images into one, then plot all the images in a grid. Write the text of this prompt on the third plot”

It’s solution was acceptable, effectively without modification:

import matplotlib.pyplot as plt

from skimage import io, transform

from skimage.util import random_noise

# load and resize images

img1 = io.imread("image1.png")

img1 = transform.resize(img1, (100, 200))

img2 = io.imread("image2.png")

img2 = transform.resize(img2, (100, 200))

# augment dataset with 6 additional images

augmented_imgs = []

for i in range(6):

augmented_imgs.append(random_noise(img1))

augmented_imgs.append(random_noise(img2))

# blend images

blended_img = img1 * 0.5 + img2 * 0.5

for img in augmented_imgs:

blended_img += img * 0.5 / len(augmented_imgs)

# plot all images in a grid

fig, ax = plt.subplots(nrows=3, ncols=3)

ax[0, 0].imshow(img1)

ax[0, 1].imshow(img2)

ax[0, 2].imshow(blended_img)

for i in range(6):

ax[1 + i // 3, i % 3].imshow(augmented_imgs[i])

# add text to third plot

ax[0, 2].set_title("Resized, augmented, and blended images")

Surprising successes

Other tasks it was able to navigate without issue include

- Performing segmentation on 3d point clouds (it used DBSCAN)

- Using DeOldify to restore color in old images

- Perform object tracking in videos

Where it falters

As with any new technology, failure modes are potentially limitless. This discussion is not intended to be exhaustive, and I won’t even touch on general concerns such as prompt-injection attacks. After asking ChatGPT computer vision tasks for multiple days, there seem to be a few recurring issues: long-tail scenarios, mathy manipulations, and expansive code blocks. As in the previous section, I’ll give examples of each.

Look out for the long tail

In any field, there will be a variety of tasks that are staples of certain subfields, but are dwarfed by more common motifs in the sprawling corpora employed in training LLMs. ChatGPT has its fair share of trouble with these domains, and can be quite sensitive to minutiae when prompted on niche subjects. One word can mean the difference between a desired result, and an idea getting lost in the recesses of ChatGPT’s immense representational structure.

An example of this is 3D computer vision, which is a small but strong subfield of computer vision concerned with three dimensional spatial data. ChatGPT has a working knowledge of point clouds,

“A point cloud is a set of points in 3D space that represent the surface of an object. Each point in the point cloud is defined by its 3D coordinates (x, y, and z) and may also include additional information such as color or normal vector. Point clouds are commonly used in computer vision and 3D modeling applications”,

And when I ask it to “write code to define the PointNet architecture, which acts on point clouds”, its response includes the symmetric max pooling function, which allows for the model to act directly on unstructured point cloud data.

import tensorflow as tf # Define the input layer input_layer = tf.keras.layers.Input(shape=(None, 3)) # Use a shared MLP to process the input point cloud mlp1 = tf.keras.layers.Dense(64, activation="relu") mlp2 = tf.keras.layers.Dense(64, activation="relu") mlp3 = tf.keras.layers.Dense(64, activation="relu") x = mlp1(input_layer) x = mlp2(x) x = mlp3(x) # Use max pooling to get the features of the entire point cloud global_features = tf.keras.layers.GlobalMaxPooling1D()(x) # Use a fully connected layer to predict the output output_layer = tf.keras.layers.Dense(units=1, activation=None)(global_features) # Create the model model = tf.keras.Model(inputs=input_layer, outputs=output_layer)

“This code defines a PointNet architecture that takes in a point cloud as input and uses a shared multi-layer perceptron (MLP) to process each point independently. The output of the MLP for each point is then passed through a global max pooling layer, which extracts the features of the entire point cloud. Finally, a fully connected layer is used to predict the output based on the global features of the point cloud.

This is just one possible implementation of the PointNet architecture, and there are many different variations and modifications that can be made to the architecture depending on the specific requirements of your project.”

When I instead prompt ChatGPT with just “write code for the PointNet model architecture”, omitting explicit mention of point clouds, I get the following in response:

import torch

from torch import nn

class PointNet(nn.Module):

def __init__(self):

super(PointNet, self).__init__()

# define the neural network layers

self.fc1 = nn.Linear(3, 64)

self.fc2 = nn.Linear(64, 64)

self.fc3 = nn.Linear(64, 64)

self.fc4 = nn.Linear(64, 128)

self.fc5 = nn.Linear(128, 1024)

self.fc6 = nn.Linear(1024, 512)

self.fc7 = nn.Linear(512, 256)

self.fc8 = nn.Linear(256, 128)

self.fc9 = nn.Linear(128, 64)

self.fc10 = nn.Linear(64, 10)

def forward(self, x):

# apply the neural network layers to the input point cloud

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = F.relu(self.fc3(x))

x = F.relu(self.fc4(x))

x = F.relu(self.fc5(x))

x = F.relu(self.fc6(x))

x = F.relu(self.fc7(x))

x = F.relu(self.fc8(x))

x = F.relu(self.fc9(x))

x = self.fc10(x)

return x

“This code defines a PointNet class that extends the nn.Module class from PyTorch. The PointNet class defines a neural network with 10 fully connected layers, which are defined in the __init__() method of the class. The forward method applies these layers to an input point cloud and returns the output of the model.

To use the PointNet model, we would create an instance of the PointNet class and then call the forward method on an input point cloud. The output of the model would be a tensor of shape (1, 10), which contains the predictions made by the model on the input point cloud”.

Despite the chatbot explicitly referencing point clouds in its response, a symmetric function is nowhere to be found in the code. This is despite the paper introducing PointNet garnering almost 10,000 citations, the official GitHub repository accumulating 4000+ stars, and results related to the (correct) PointNet model single handedly monopolizing the first page of Google’s search results.

Math manipulations get messy

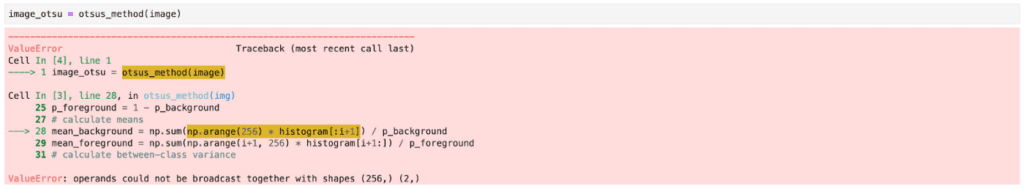

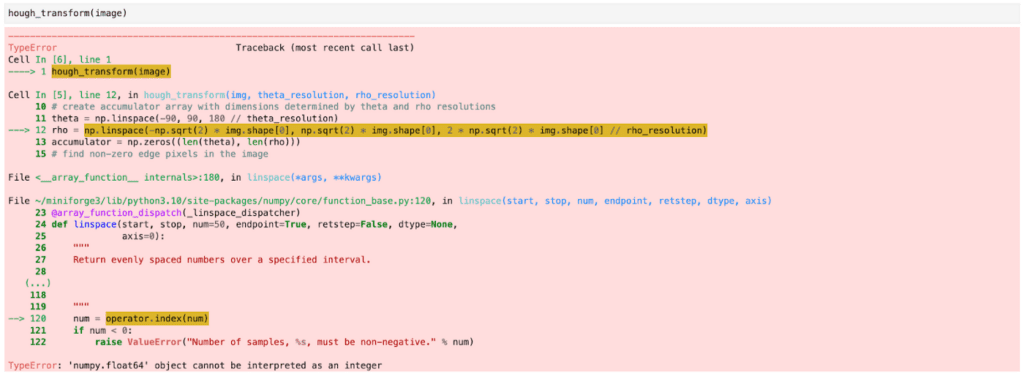

While ChatGPT is capable of solving surprisingly involved standalone math problems and even generating its own proofs, the chatbot struggles to string together multiple mathematical lines of code. For example, ChatGPT fails when asked to implement Otsu thresholding or the Hough transform from without using computer vision libraries.

Otsu threshold:

def otsus_method(img):

# calculate histogram of grayscale values

histogram = np.zeros(256)

for i in range(img.shape[0]):

for j in range(img.shape[1]):

histogram[img[i, j]] += 1

# normalize histogram

histogram = histogram / img.size

# calculate cumulative sum of normalized histogram

cumulative_sum = np.cumsum(histogram)

# calculate cumulative sum of squares of normalized histogram

cumulative_sum_of_squares = np.cumsum(histogram ** 2)

# initialize variables

optimal_threshold = 0

max_variance = 0

# iterate over all possible threshold values

for i in range(256):

# calculate background and foreground probabilities

p_background = cumulative_sum[i]

p_foreground = 1 - p_background

# calculate means

mean_background = np.sum(np.arange(256) * histogram[:i+1]) / p_background

mean_foreground = np.sum(np.arange(i+1, 256) * histogram[i+1:]) / p_foreground

# calculate between-class variance

variance = p_background * p_foreground * (mean_background - mean_foreground) ** 2

# update optimal threshold if necessary

if variance > max_variance:

max_variance = variance

optimal_threshold = i

return optimal_threshold

Hough transform:

def hough_transform(img, theta_resolution=1, rho_resolution=1):

# convert image to grayscale

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# detect edges in the image using Canny edge detection

edges = cv2.Canny(img, 50, 200)

# create accumulator array with dimensions determined by theta and rho resolutions

theta = np.linspace(-90, 90, 180 // theta_resolution)

rho = np.linspace(-np.sqrt(2) * img.shape[0], np.sqrt(2) * img.shape[0], 2 * np.sqrt(2) * img.shape[0] // rho_resolution)

accumulator = np.zeros((len(theta), len(rho)))

# find non-zero edge pixels in the image

y_idxs, x_idxs = np.nonzero(edges)

# convert x and y indices to cartesian coordinates

points = np.stack([x_idxs, y_idxs], axis=1)

# iterate over all edge points

for x, y in points:

# iterate over all theta values

for i, t in enumerate(theta):

# calculate rho for current theta value

r = x * np.cos(t) + y * np.sin(t)

# find closest rho value and increment corresponding accumulator bin

j = np.argmin(np.abs(rho - r))

accumulator[i, j] += 1

return accumulator, theta, rho

In many of these cases, the high-level logical flow isn’t necessarily wrong — or at least isn’t too far off. Instead, the choices made on one line seem to conflict with choices made on following lines: the attempted Otsu thresholding fails when it tries to broadcast together operands of incompatible shapes, and the Hough transform falters with data types.

Some of these mathematical mishaps are easily corrected, whether by converting an int to a float, or rewriting a single faulty line of code. Other times these surface-level errors may be concealing more clandestine complications. If you’re going to use ChatGPT for these types of problems, proceed with due caution. The more modular the code you can get ChatGPT to write, the better.

Expansive solutions breed errors

It may not be too big of a surprise, but the more code that is generated, the more likely it seems that there will be an error (or multiple errors). After all, one could easily argue that humans are the same. For transformer models, it is a well-known problem that coherence (and model performance) diminishes as the number of “blocks” in the output increases. Blocks containing computer vision code are no exception.

When I asked ChatGPT to “implement optical character recognition (OCR) from scratch”, for instance, the model failed irredeemably. This failure was more of a complete mishmash than a series of understandable mathematical mistakes.

Of course, that doesn’t mean ChatGPT is unable to perform OCR.

When I prompted the chatbot with the more lenient “apply optical character recognition”, it showed that at least it knows how to use the relevant method in pytesseract:

import pytesseract

# Load the license plate image

# image = cv2.imread("license_plate.png")

# Convert the image to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# Apply Otsu's thresholding to binarize the image

threshold, binary = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY+cv2.THRESH_OTSU)

# Use Tesseract to perform OCR on the license plate image

text = pytesseract.image_to_string(binary, lang="eng")

# Print the recognized text

print(text)

The problem often rears its ugly head when ChatGPT is constructing neural networks in PyTorch, TensorFlow, or Sklearn, where it may string together a bunch of layers in a way that does not accomplish anything of use.

Where to exercise extreme caution

The previous three categories only scratch the surface of where things can go wrong using ChatGPT for computer vision. If you’re not careful, the AI agent might feed you egregiously erroneous answers.

When I asked ChatGPT to give me the “inference time verse year released for the 10 best object detection models”, I received these details in response:

# Define the names and years of the 10 best object detection models model_names = ["Faster R-CNN", "YOLOv3", "SSD", "RetinaNet", "Mask R-CNN", "R-FCN", "SSD with FPN", "YOLOv4", "Grid R-CNN", "FCOS"] model_years = [2015, 2018, 2016, 2017, 2017, 2016, 2017, 2020, 2019, 2019] # Define the inference times of the 10 best object detection models (in milliseconds) model_inference_times = [100, 30, 20, 40, 60, 50, 25, 15, 35, 45]

Upon cursory glance, this might appear reasonably trustworthy. The models are all fairly well-known in object detection — and “best” is somewhat subjective anyway. The years check out too. Where this all breaks down is the inference times. These times are pulled from a variety of sources, all with different conditions and assumptions. ChatGPT’s response makes it seem like they can be compared apples to apples, which is patently false.

At the end of the day, the burden of discerning and recognizing truth lies with the human asking and interpreting ChatGPT’s responses. Asking a vague question about inference times is a bad start. But even more well-posed questions can give incorrect answers.

In a similar vein, you should probably be cautious whenever ChatGPT spits out specific numeric values that aren’t explicitly given as an example. Sometimes these values are appropriate, as when it knew to normalize AlexNet with given mean and standard deviation:

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]

When I asked ChatGPT to perform depth perception using stereo images, it included a line of code that read:

# Calculate the depth of each pixel depth = 9.0 * 0.54 / (disparity + 0.00001)

When questioned why it chose these values, it clarified:

“This formula uses the known parameters of the stereo camera system, such as the baseline (the distance between the two cameras) and the focal length, to convert the disparity value (which is the difference in horizontal position of a pixel in the left and right images) into a depth value.

The specific values used in this formula (9.0 and 0.54) are just examples, and they may not be appropriate for all stereo camera systems. In general, the formula for calculating depth from disparity is:

depth = baseline * focal_length / (disparity + epsilon)

Where baseline is the distance between the two cameras, focal_length is the focal length of the cameras, disparity is the disparity map computed from the stereo images, and epsilon is a small value used to avoid division by zero. The values of these parameters will depend on the specific stereo camera system that you are using”.

Another area of concern is the evaluation of dataset and model quality. When tasked with writing code to “evaluate the quality and diversity of an image dataset”, ChatGPT responded with the following code:

import os

from PIL import Image

from sklearn.neighbors import NearestNeighbors

# Define the path to the dataset directory

dataset_dir = './dataset'

# Load the images in the dataset

images = []

for filename in os.listdir(dataset_dir):

# Load the image

image = Image.open(os.path.join(dataset_dir, filename))

# Convert the image to grayscale and resize it to a fixed size

image = image.convert('L').resize((128, 128))

# Add the image to the list of images

images.append(image)

# Flatten the images into a single array

flattened_images = np.stack([np.array(image) for image in images]).reshape(len(images), -1)

# Compute the nearest neighbors of the flattened images

nbrs = NearestNeighbors(n_neighbors=5).fit(flattened_images)

distances, indices = nbrs.kneighbors(flattened_images)

# Compute the mean and standard deviation of the distances to the nearest neighbors

mean_distance = np.mean(distances[:, 1:])

std_distance = np.std(distances[:, 1:])

print('Mean distance to nearest neighbor:', mean_distance)

print('Standard deviation of distances:', std_distance)

Along with an accompanying exposition:

“In this example, the images variable is a list of images in the dataset. The images are first loaded from the files on disk, then converted to grayscale and resized to a fixed size using the convert and resize methods from the PIL.Image module. The images are then flattened into a single array using the stack and reshape methods from the numpy module. The nbrs variable computes the nearest neighbors of the flattened images using the NearestNeighbors class from the sklearn.neighbors module. The mean_distance and std_distance variables compute the mean and standard deviation of the distances to the nearest neighbors, which can be used as metrics to evaluate the quality and diversity of the dataset. A lower mean distance and a higher standard deviation indicate a higher-quality and more diverse dataset.”

While this approach is not necessarily, or technically wrong, it is at the very least unsophisticated.

I received a similarly uninspiring answer when I asked ChatGPT to diagnose distribution shift in an image dataset, and it used a t-test (a test which just compares the means of two groups of samples) on the raw pixel values. You can also see that ChatGPT attempted to compute the mean squared error between two sets of images, which only makes sense if the sets have the same size, but we will give the chatbot the benefit of the doubt here and move on.

# Import necessary libraries

import numpy as np

from scipy.stats import ttest_ind

from sklearn.metrics import mean_squared_error

# Load the original and new datasets

original_data = ...

new_data = ...

# Calculate the mean squared error between the original and new images

mse = mean_squared_error(original_data, new_data)

# Use a t-test to compare the means of the original and new datasets

t, p = ttest_ind(original_data, new_data)

# Print the results

print("Mean squared error:", mse)

print("T-test results: t =", t, "p =", p)

# If the mean squared error is large and the t-test p-value is small,

# this indicates a significant distribution shift in the data

Diagnosing distribution shifts in machine learning is in general a difficult problem, but at the very least, a basic understanding of the various types of possible shifts is standard, and there are a variety of more nuanced approaches than applying a t-test.

One last example is instructive: I prompted ChatGPT to “compute how mistaken my model is given a set of ground truth values, predictions, and prediction confidence scores”, the crux of its response was “you can use a metric like accuracy or precision to measure the performance of your model”, which misses the mark entirely, and doesn’t use confidence scores at all. For reference, in the open source computer vision library FiftyOne, a quantity of this kind can be computed for a Dataset dataset by invoking dataset.compute_mistakenness().

Why ChatGPT empowers CV engineers

Nevertheless, in spite of all these shortcomings, ChatGPT can still be a force for good in computer vision. At least that’s one person’s opinion.

You might have noticed a theme in the examples I’ve included. ChatGPT is a tool that can help computer vision engineers and practitioners do their jobs more efficiently. It is a very powerful tool that can also be quite dangerous. And like any tool, it is only as good as its user.

From a programming perspective, many of the same principles that ensure good human engineering can make for good machine-generated code: aim for modular design, decompose high-level tasks into pieces if possible, and leverage existing libraries instead of reinventing the wheel.

If used effectively, ChatGPT can help CV engineers write boilerplate CV code more quickly, freeing up humans to focus on the important tasks of building high-quality datasets and evaluating ML models, using toolsets from companies like Scale, V7, and Voxel51.

We are living in an exciting time. But be careful.

Just for fun

On a lighter note, no article on ChatGPT would be complete without highlighting just how peculiar, interesting, and often hilarious, its responses can be. Accordingly, I’ve prepared a few of my favorite responses that are — at least tangentially — related to computer vision:

Is a picture actually worth a thousand words?

“It is often said that a picture is worth a thousand words, meaning that a single image can convey a complex idea or story more effectively than a lengthy explanation. This is because pictures can capture the attention of the viewer and convey information quickly and efficiently.

However, the exact value of a picture in terms of words can vary depending on the context and the audience. For example, a picture that is rich in detail and visual information may be able to convey more than a thousand words, while a simpler image may be less effective. Additionally, the ability of a picture to convey meaning also depends on the viewer’s familiarity with the subject matter and their ability to interpret the image.

Overall, while a picture may be able to convey a large amount of information quickly and effectively, its value in terms of words can vary and may not always be as high as a thousand”.

Write a poem about open source computer vision package FiftyOne

“It’s easy to use and highly flexible,

With support for many different platforms and specs,

Whether you’re a researcher or a developer,

FiftyOne has something for every level.

So if you’re looking for a powerful tool,

To help with your computer vision tasks,

Give FiftyOne a try,

And see how it can make your work more efficient and fast.”

What is the most significant image of all time?

“It is difficult to determine the most significant image of all time, as the significance of an image can vary depending on the context and the perspective of the viewer. However, some images are widely considered to be significant due to their impact on history, culture, and society.

One example of a significant image is the “Migrant Mother” photograph, taken by Dorothea Lange in 1936. The photograph depicts a mother and her children living in poverty during the Great Depression, and it became a symbol of the struggles faced by many Americans during this time.

Another example of a significant image is the “Earthrise” photograph, taken by astronaut William Anders during the Apollo 8 mission in 1968. The photograph shows the Earth rising above the lunar surface, and it is credited with inspiring the environmental movement and raising awareness of the need to protect our planet.

Overall, there are many images that can be considered significant due to their historical, cultural, or societal impact. The most significant image may vary depending on the perspective and context of the viewer”.

Tell me a joke about computer vision

“Q: Why was the computer cold?

A: Because it left its Windows open”.

Make word art

“Here is a word art design:

__ __

/ \ / \

/ /\ \ / /\ \

/ / /\ \ / / /\ \

/ / /__\ \ / / /__\ \

/ / /____\ \/ / /____\ \

/ / /______\ \/ /______\ \

/ / / \/ / \ \

/ / / \/ \ \

/ / / \ \ \

/ / / \ \ \

/ / / \ \ \

/ / / \ \ \

/ / / \ \ \

/ / / \ \ \

/ / / \ \ \

/ / / \ \ \

/ / / \ \ \

/ / / \ \ \

/ / / \ \ \

“This design incorporates the words ‘computer vision’ into a geometric pattern. The words are arranged in a way that creates a visual image of a computer screen with a vision-like pattern in the background”.

NOTE: this was after I had asked it a bunch of CV questions. As far as I can tell, the words “computer vision” are not present in that image. Also when I reset the ChatGPT thread, it told me it was not able to generate word art.

FiftyOne Computer Vision toolset

FiftyOne is an open source machine learning toolset developed by Voxel51 that enables data science teams to improve the performance of their computer vision models by helping them curate high quality datasets, evaluate models, find mistakes, visualize embeddings, and get to production faster.

- If you like what you see on GitHub, give the project a star.

- Get started! We’ve made it easy to get up and running in a few minutes.

- Join the FiftyOne Slack community, we’re always happy to help.