We just wrapped up the June 8, 2023 Computer Vision Meetup, and if you missed it or want to revisit it, here’s a recap! In this blog post you’ll find the playback recordings, highlights from the presentations and Q&A, as well as the upcoming Meetup schedule so that you can join us at a future event.

First, Thanks for Voting for Your Favorite Charity!

In lieu of swag, we gave Meetup attendees the opportunity to help guide our monthly donation to charitable causes. The charity that received the highest number of votes this month was Global Empowerment Mission (GEM)! GEM aims to bring the most aid, to the most people, in the least amount of time. We are sending this event’s charitable donation of $200 to GEM on behalf of the computer vision community.

Missed the Meetup? No problem. Here are playbacks and talk abstracts from the event.

Plug-and-Play Diffusion Features for Text-Driven Image-to-Image Translation

Large-scale text-to-image generative models have been a revolutionary breakthrough in the evolution of generative AI, allowing us to synthesize diverse images that convey highly complex visual concepts. However, a pivotal challenge in leveraging such models for real-world content creation tasks is providing users with control over the generated content. In this paper, we present a new framework that takes text-to-image synthesis to the realm of image-to-image translation — given a guidance image and a target text prompt, our method harnesses the power of a pre-trained text-to-image diffusion model to generate a new image that complies with the target text, while preserving the semantic layout of the source image. Specifically, we observe and empirically demonstrate that fine-grained control over the generated structure can be achieved by manipulating spatial features and their self-attention inside the model.

Michal Geyer and Narek Tumanya are Masters students at the Weizmann Institute of Science in the Computer Vision department. Learn more about the Plug-and-Play Diffusion Features for Text-Driven Image-to-Image Translation paper on arXiv or check out their poster at CVPR 2023.

Q&A from the talk included:

- Can your approach be extended by including a portion of the image where the text is supposed to make a change/diffuse?

Re-Annotating MS COCO, an Exploration of Pixel Tolerance

The release of the COCO dataset has served as a foundation for many computer vision tasks including object and people detection. In this session, we’ll introduce the Sama-Coco dataset, a re-annotated version of COCO focused on fine-grained annotations. We’ll also cover interesting insights and learnings during the annotation phase, illustrative examples, and results of some of our experiments on annotation quality as well as how changes in labels affect model performance and prediction style.

Jerome Pasquero is Principal Product Manager at Sama. Jerome holds a Ph.D. in electrical engineering and is listed as inventor on more than 120 US patents along with published over 10 peer-reviewed journal and conference articles. Eric Zimmermann is an Applied Scientist at Sama helping to redefine annotation quality guidelines. He is also responsible for building internal curation tools which aim to improve the process on how clients and annotators interact with their data.

Q&A from the talk included:

- With respect to Sama-COCO vs MS-COCO, were any tests done to compare corrections in label only versus, mask only, versus both mask and label relative to model performance changes?

- I am currently working on airborne object segmentation. The basic idea is to segment an incoming airplane by a still camera where initially the plane is small and increases in size as it comes closer… I’m using Yolov8 segmentation. What are your opinions on how to annotate the dataset and which metric should I use to determine the efficiency of the model?

You can find the slides from the talk here.

Redefining State-of-the-Art with YOLOv5 and YOLOv8

In recent years, object detection has been one of the most challenging and demanding tasks in computer vision. YOLO (You Only Look Once) has become one of the most popular and widely used algorithms for object detection due to its fast speed and high accuracy. YOLOv5 and YOLOv8 are the latest versions of this algorithm released by Ultralytics, which redefine what “state-of-the-art” means in object detection. In this talk, we will discuss the new features of YOLOv5 and YOLOv8, which include a new backbone network, a new anchor-free detection head, and a new loss function. These new features enable faster and more accurate object detection, segmentation, and classification in real-world scenarios. We will also discuss the results of the latest benchmarks and show how YOLOv8 outperforms the previous versions of YOLO and other state-of-the-art object detection algorithms. Finally, we will discuss the potential for this technology to “do good” in real-world scenarios and across various fields, such as autonomous driving, surveillance, and robotics.

Glenn Jocher is founder and CEO of Ultralytics. In 2014 Glenn founded Ultralytics to lead the United States National Geospatial-Intelligence Agency (NGA) antineutrino analysis efforts, culminating in the miniTimeCube experiment and the world’s first-ever Global Antineutrino Map published in Nature. Today he’s driven to build the world’s best vision AI as a building block to a future AGI, and YOLOv5, YOLOv8, and Ultralytics HUB are the spearheads of this obsession.

Q&A from the talk included:

- Is the detection performance/speed noticeably faster or better for custom datasets, eg, person only, between Yolo V4, V5, and V8?

- What is more preferable while segmenting small objects? Box annotations or polygon annotations?

- Is it possible to ensemble different YOLO models trained on different sets of data for achieving a credible mAP? What are the methods or ways we can do that?

- Any tips for combining YOLOv8 and RetinaNet?

- Is it possible to use YOLOv8 with other programming languages besides Python, with C++ for example?

- What line of thinking inspired you to make changes to the architecture in V8, for example not use anchor boxes?

- Do you suggest anything else for tracking besides ByteTrack?

Join the Computer Vision Meetup!

Computer Vision Meetup membership has grown to more than 4,000 members in just under a year! The goal of the Meetups is to bring together communities of data scientists, machine learning engineers, and open source enthusiasts who want to share and expand their knowledge of computer vision and complementary technologies.

Join one of the 13 Meetup locations closest to your timezone.

- Ann Arbor

- Austin

- Bangalore

- Boston

- Chicago

- London

- New York

- Peninsula

- San Francisco

- Seattle

- Silicon Valley

- Singapore

- Toronto

We have exciting speakers already signed up over the next few months! Become a member of the Computer Vision Meetup closest to you, then register for the Zoom.

What’s Next?

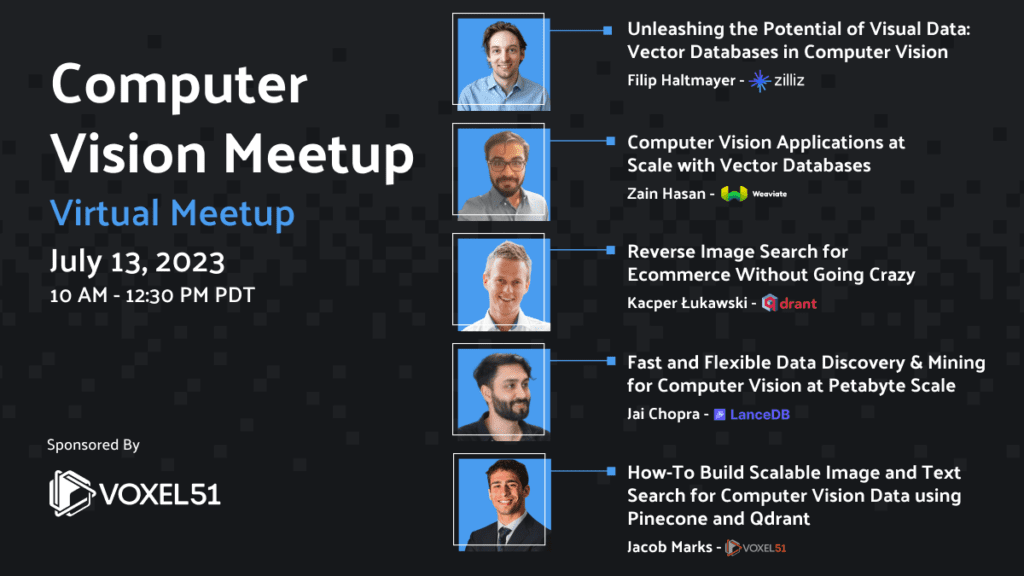

Up next on July 13 at 10 AM Pacific we have a very special vector search-themed Computer Vision Meetup happening featuring speakers from Zilliz/Milvus, Weaviate, Qdrant, LanceDB, and Voxel51.

- Unleashing the Potential of Visual Data: Vector Databases in Computer Vision – Filip Haltmayer (Zilliz)

- Computer Vision Applications at Scale with Vector Databases – Zain Hasan (Weaviate)

- Reverse Image Search for Ecommerce Without Going Crazy – Kacper Łukawski (Qdrant)

- Fast and Flexible Data Discovery & Mining for Computer Vision at Petabyte Scale – Jai Chopra (LanceDB)

- How-To Build Scalable Image and Text Search for Computer Vision Data using Pinecone and Qdrant – Jacob Marks (Voxel51)

Register for the Zoom here. You can find a complete schedule of upcoming Meetups on the Voxel51 Events page.

Get Involved!

There are a lot of ways to get involved in the Computer Vision Meetups. Reach out if you identify with any of these:

- You’d like to speak at an upcoming Meetup

- You have a physical meeting space in one of the Meetup locations and would like to make it available for a Meetup

- You’d like to co-organize a Meetup

- You’d like to co-sponsor a Meetup

Reach out to Meetup co-organizer Jimmy Guerrero on Meetup.com or over LinkedIn to discuss how to get you plugged in.

The Computer Vision Meetup network is sponsored by Voxel51, the company behind the open source FiftyOne computer vision toolset. FiftyOne enables data science teams to improve the performance of their computer vision models by helping them curate high quality datasets, evaluate models, find mistakes, visualize embeddings, and get to production faster. It’s easy to get started, in just a few minutes.