We just wrapped up the August 24, 2023 Computer Vision Meetup, and if you missed it or want to revisit it, here’s a recap! In this blog post you’ll find the playback recordings, highlights from the presentations and Q&A, as well as the upcoming Meetup schedule so that you can join us at a future event.

First, Thanks for Voting for Your Favorite Charity!

In lieu of swag, we gave Meetup attendees the opportunity to help guide a $200 donation to charitable causes. The charity that received the highest number of votes this month was Education Development Center (EDC), an organization on a mission to open doors to education, employment, and healthier lives for millions of people. We are sending this event’s charitable donation of $200 to the Education Development Center on behalf of the computer vision community!

Missed the Meetup? No problem. Here are playbacks and talk abstracts from the event.

Removing Backgrounds Automatically or with a User’s Language

Image matting, also known as removing background, refers to extracting the accurate foregrounds in the image, which benefits many downstream applications such as film production and augmented reality. To solve this ill-posed problem, previous methods require extra user inputs with large amounts of manual effort such as trimap or scribbles. In this session, we will introduce our research works, which allow users to automatically remove the background or even flexibly choose the specific foreground by user’s language. We’ll also show some fancy demos and illustrate some downstream applications.

Jizhizi Li has just finished her Ph.D. study in Artificial Intelligence at the University of Sydney. With several papers published in top-tier conferences and journals including CVPR, IJCV, IJCAI and Multimedia, her research interests include computer vision, image matting, multi-modal learning, and AIGC.

Q&A

- What is the difference between semantic and matting branch?

- What type of filter(s) do you use for noise suppression?

- How did you optimize the architecture of the encoders?

- After training, did you have any observations about the internal feature space that allows discrimination between foreground and background?

- How much training data did you use?

- Would it make sense to use a similarity measure to interpolate background material across naturally similar types of images, to preclude the use of artificially, and non-realistic background material which may essentially warp the internal feature space to non-natural material?

Resource links

- Jizhizi Li’s GitHub

- Deep Image Matting: A Comprehensive Survey

- [CVPR] Referring Image Matting (CORE A*, CCF A)

- [IJCV] Rethinking Portrait Matting with Privacy Preserving (CORE A*, CCF A, IF 13.369)

- [IJCV] Bridging Composite and Real: Towards End-to-End Deep Image Matting (CORE A*, CCF A, IF 13.369)

- Additional resources and links can be found on Jizhizi’s website

AI at the Edge: Optimizing Deep Learning Models for Real-World Applications

As AI technology continues to advance, there is a growing demand for deep learning models to tackle more complex tasks, particularly on edge devices. However, real-time performance and hardware constraints can present significant challenges in deploying these models on such devices. At SightX, we have been exploring ways to optimize deep learning models for top performance on edge devices while minimizing degradation.

In this lecture, we will share our insights and techniques for deploying AI on edge devices, specifically focusing on hardware-aware optimization of deep learning models. We’ll review practical ways to effectively deploy deep learning models in real-time scenarios.

Raz Petel, SightX’s Head of AI, has been tackling Computer Vision challenges with Deep Learning since 2015, aiming to enhance their efficiency, speed, compactness, and resilience.

Q&A

- Can you mention any of the families of embedded processors that you’re working with?

- What sort of feature extraction do you use, and do those front ends change with application?

- Have you done any comparison between efficiency obtained by pruning vs. reducing the dynamic range in terms of resolution (ie, floating point dynamic range, or int sizes if you’re using integers).

- Have you seen performance improve as you remove features incrementally?

- Does the pruning cause some negative impact to the accuracy of the model?

- Could you use an auxiliary network, like an SVM, to use recursive feature elimination (based on feature coefficient magnitudes) to rank order features for iterative removal?

- Are wrapper methods too computationally expensive to be practical (as opposed to filter methods)?

- Which one is better, pruning or quantization?

Drones, Data, and One Direction of Computer Vision

In this lightning talk, Dan covered the current state of drone applications, some of the challenges computer vision applications face when working with drone data, plus some solutions and emerging trends like Generative AI that these applications can make use of.

Dan Gural is a machine learning engineer and is part of the developer relations team at Voxel51.

Q&A

What are the best use cases for FiftyOne? For example, object detection. (Post-acquisition normalization.)

The best use cases for drone footage in FiftyOne is to curate and identify potential areas for preprocessing. Data can be curated by removing samples unlike those expected at deployment. Data can also be preprocessed to access issues such as seen here with FiftyOne plugins

Are there homography libraries in FiftyOne? (It could help with moving cameras like in drone applications.)

Homography and other preprocessing applications are not intrinsically offered by FiftyOne, but the finished images of homography no matter the shape or resolution can be supported.

Resource links

- Learn more about the open source FiftyOne computer vision toolset

- Explore datasets in your browser with Try FiftyOne (no install required!)

- Explore FiftyOne Plugins

Join the Computer Vision Meetup!

Computer Vision Meetup membership has grown to more than 5,000 members in just one year! The goal of the Meetups is to bring together communities of data scientists, machine learning engineers, and open source enthusiasts who want to share and expand their knowledge of computer vision and complementary technologies.

Join one of the 13 Meetup locations closest to your timezone.

- Ann Arbor

- Austin

- Bangalore

- Boston

- Chicago

- London

- New York

- Peninsula

- San Francisco

- Seattle

- Silicon Valley

- Singapore

- Toronto

We have exciting speakers already signed up over the next few months! Become a member of the Computer Vision Meetup closest to you, then register for the Zoom.

What’s Next?

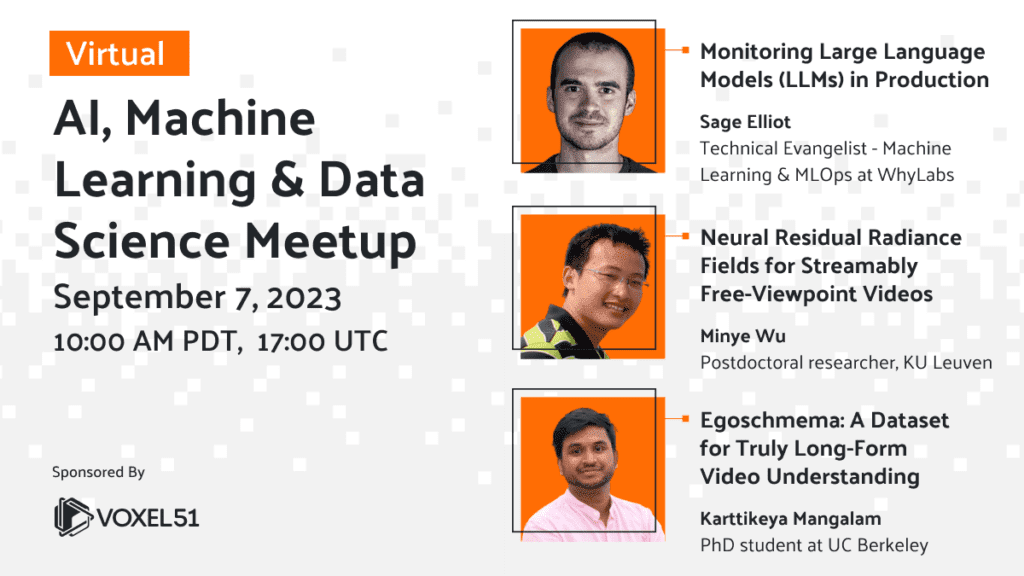

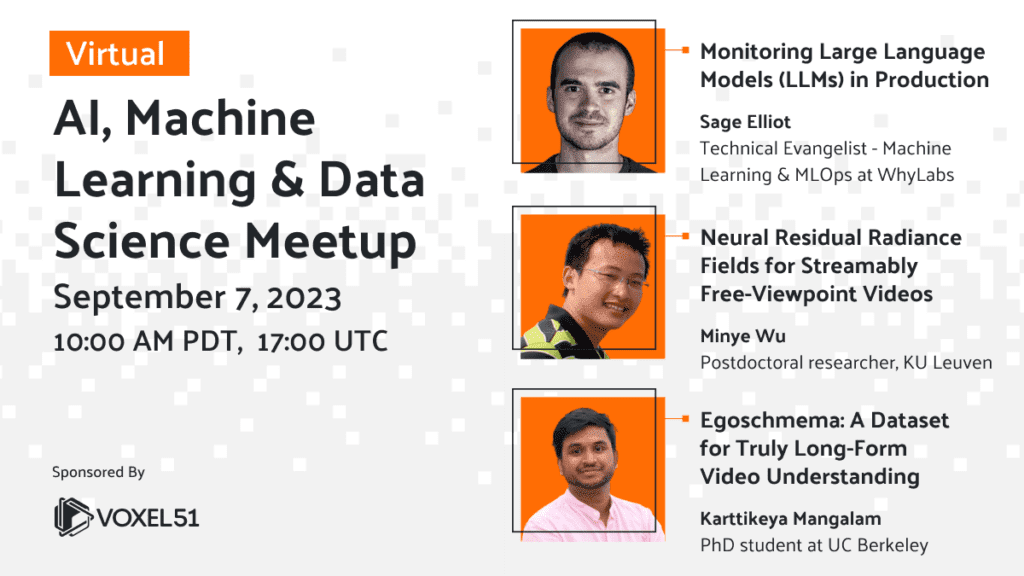

Up next on Sept 7 at 10 AM Pacific we have a great line up speakers including:

- Monitoring Large Language Models (LLMs) in Production – Sage Elliot, Technical Evangelist – Machine Learning & MLOps at WhyLabs

- Neural Residual Radiance Fields for Streamably Free-Viewpoint Videos – Minye Wu, Postdoctoral researcher, KU Leuven

- Egoschmema: A Dataset for Truly Long-Form Video Understanding – Karttikeya Mangalam, PhD student at UC Berkeley

Register for the Zoom here. You can find a complete schedule of upcoming Meetups on the Voxel51 Events page.

Get Involved!

There are a lot of ways to get involved in the Computer Vision Meetups. Reach out if you identify with any of these:

- You’d like to speak at an upcoming Meetup

- You have a physical meeting space in one of the Meetup locations and would like to make it available for a Meetup

- You’d like to co-organize a Meetup

- You’d like to co-sponsor a Meetup

Reach out to Meetup co-organizer Jimmy Guerrero on Meetup.com or ping me over LinkedIn to discuss how to get you plugged in.

The Computer Vision Meetup network is sponsored by Voxel51, the company behind the open source FiftyOne computer vision toolset. FiftyOne enables data science teams to improve the performance of their computer vision models by helping them curate high quality datasets, evaluate models, find mistakes, visualize embeddings, and get to production faster. It’s easy to get started, in just a few minutes.