Welcome to our weekly FiftyOne tips and tricks blog where we recap interesting questions and answers that have recently popped up on Slack, GitHub, Stack Overflow, and Reddit.

Wait, what’s FiftyOne?

FiftyOne is an open source machine learning toolset that enables data science teams to improve the performance of their computer vision models by helping them curate high quality datasets, evaluate models, find mistakes, visualize embeddings, and get to production faster.

- If you like what you see on GitHub, give the project a star.

- Get started! We’ve made it easy to get up and running in a few minutes.

- Join the FiftyOne Slack community, we’re always happy to help.

Ok, let’s dive into this week’s tips and tricks!

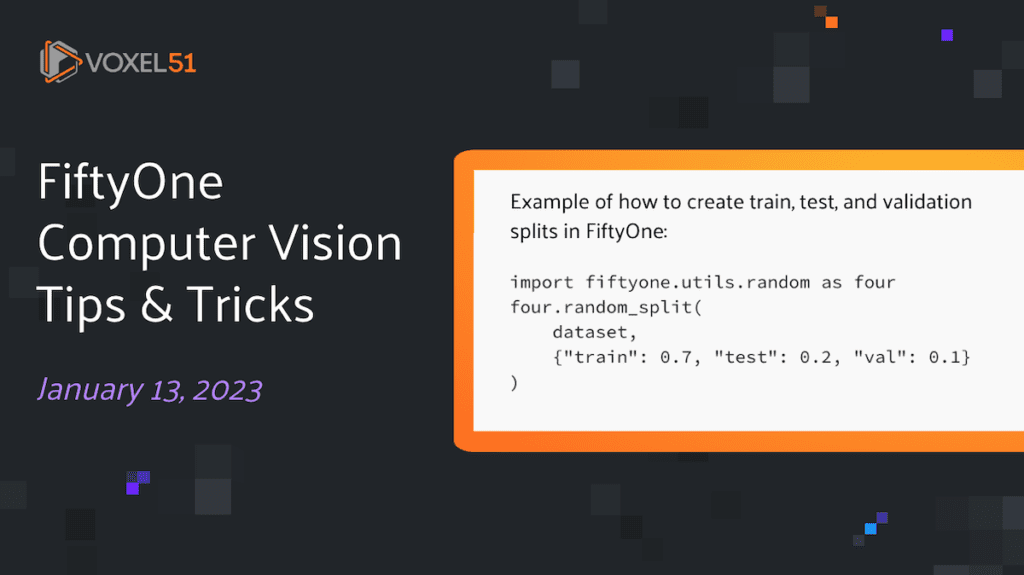

Splitting data in FiftyOne

Community Slack member Muhammad Ali asked,

“Can we separate a dataset into train, test, and validation splits in FiftyOne?”

If you want to randomly split the data, and are okay with the splits being slightly larger or smaller than the specified percentages, then you can do so with the random_split() method in FiftyOne’s random utils. To create train, test, and validation splits with approximately 70%, 20%, and 10% of the data respectively, you can do the following:

import fiftyone.utils.random as four

four.random_split(

dataset,

{"train": 0.7, "test": 0.2, "val": 0.1}

)

The information about which split a sample is in can be found in the tags field, and you can use match_tags() to get a view containing only the samples in each split:

train_view = dataset.match_tags("train")

test_view = dataset.match_tags("test")

val_view = dataset.match_tags("val")

In the FiftyOne App, you can achieve the same effect by selecting or deselecting any of these tags.

If you need more precise splitting, you can compute the number of samples that should be in each split, and index into a shuffled view of the data:

nsample = dataset.count()

ntrain = int(nsample * 0.7)

ntest = int(nsample * 0.2)

nval = nsample - (ntrain + ntest)

shuffled_view = dataset.shuffle()

train_view = shuffled_view[:ntrain]

test_view = shuffled_view[ntrain:ntrain+ntest]

val_view = shuffled_view[-nval:]

for sample in train_view.iter_samples(autosave = True):

sample.tags.append("train")

for sample in test_view.iter_samples(autosave = True):

sample.tags.append("test")

for sample in val_view.iter_samples(autosave = True):

sample.tags.append("val")

Learn more about FiftyOne’s random utils in the FiftyOne Docs.

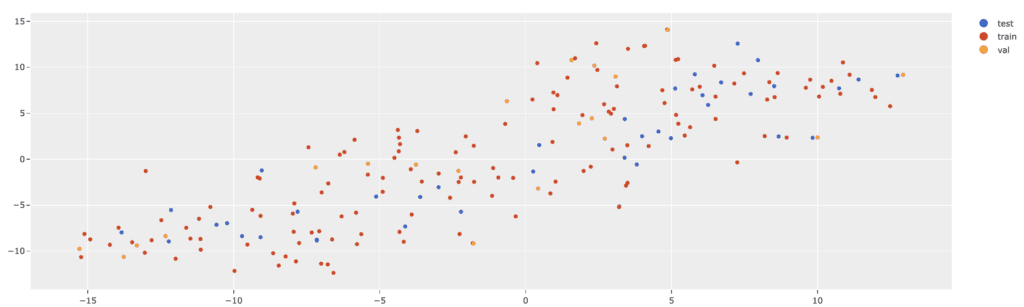

Coloring by tags in the FiftyOne App

Community Slack member Adrian Tofting asked,

“I have a use case where it would be helpful to color embeddings by tags in the FiftyOne App. Is this possible?”

By combining the FiftyOne Brain’s visualize() method with the ViewField, you can create your own view expressions that allow you to color by a variety of properties. The syntax is both easy to use and very flexible.

If you want to color points in an embedding visualization by the last tag found on each sample, you can do this with a single line of code:

import fiftyone as fo

import fiftyone.brain as fob

import fiftyone.utils.random as four

import fiftyone.zoo as foz

from fiftyone import ViewField as F

dataset = foz.load_zoo_dataset("quickstart")

## add tags to dataset

four.random_split(dataset, {"train": 0.7, "test": 0.2, "val": 0.1})

results = fob.compute_visualization(dataset, method = "tsne")

### Color by last tag

plot = results.visualize(labels=F("tags")[-1])

plot.show()

If you instead wanted to color by number of ground truth objects, you could use the following:

plot = results.visualize(

labels=F("ground_truth.detections").length()

)

plot.show()

Learn more about visualizing with the FiftyOne Brain in the FiftyOne Docs.

Dealing with duplicate data

Community Slack member George Pearse asked,

“Is there a safe way to avoid adding duplicate samples to a given dataset? What happens when you add the same collection of samples to a dataset twice with dataset.add_samples(samples)”

Duplicate data can appear in many scenarios in computer vision workflows — sometimes intentionally, and other times erroneously.

In your case, when you ran dataset.add_samples(samples) the second time, the samples that were added to the dataset were identical to those added in all but one crucial way — they were given unique ids. This is because in FiftyOne, all samples are required to have unique sample ids.

This means that if you were to use dataset.delete_samples(samples), you would only be removing the original samples from the dataset, while the copied samples with new sample ids would remain.

If you wanted to delete both the original and copied samples from the dataset, you could do so by first finding all samples with a given file path, and then deleting these.

for sample in samples:

double = dataset.match(

F("filepath") == sample.filepath

)

dataset.delete_samples(double)

Of course, this assumes that only the added samples and their copies shared a common file path.

If you want to identify and remove samples which have duplicated images stored in different locations, you can use the image deduplication procedure documented here.

Learn more about the adding and deleting samples in FiftyOne datasets in the FiftyOne Docs.

Merging datasets in COCO format

Community Slack member Dan Erez asked,

“Let’s say I have two different datasets with different classes, and both are in COCO format. Is there a way to concatenate them into a third dataset, also in COCO format, which contains all of the classes present in each?”

Fortunately, FiftyOne supports all of the functionality necessary to perform this operation! One way to approach this would be to load in the first dataset in COCO format using FiftyOne’s COCODetectionDatasetImporter with the from_dir() method:

import fiftyone as fo

dataset = fo.Dataset.from_dir(

data_path="/path/to/images",

labels_path="/path/to/coco1.json",

dataset_type=fo.types.COCODetectionDataset,

)

Then merge in the data from the second dataset using the merge_dir() method:

dataset.merge_dir(

data_path="/path/to/images",

labels_path="/path/to/coco2.json",

dataset_type=fo.types.COCODetectionDataset,

)

Finally, you can use FiftyOne’s dataset export functionality to export the composite dataset in COCO format:

dataset.export(

labels_path="/path/for/coco3.json",

dataset_type=fo.types.COCODetectionDataset,

)

Learn more about the importing, exporting, and merging datasets in the FiftyOne Docs.

Hiding labels in the FiftyOne App

Community Slack member Santiago Arias asked,

“Does anyone know if there is a way to omit some labels from a view in the FiftyOne App?”

Absolutely! There are many reasons for doing this, all of which involve retaining information while making it easier to understand your computer vision data. You can do so using either select_fields() to explicitly specify which fields to view, or using exclude_fields() to specify which fields should be omitted. In both cases, reducing the number of fields displayed in the app can make your visualization, analysis, and evaluation workflows seamless.

One example where this approach might be useful is when dealing with versatile datasets. Open Images V6, for instance, supports a variety of computer vision tasks, including detection, classification, relationships, and segmentations. To focus our present attention on only segmentations while retaining the other labels, we can employ select_fields():

import fiftyone as fo

import fiftyone.zoo as foz

dataset = foz.load_zoo_dataset(

"open-images-v7",

split="validation",

label_types = ["detections", "classifications", "segmentations"],

max_samples=50,

shuffle=True,

)

segmentation_view = dataset.select_fields("segmentations")

session = fo.launch_app(dataset)

session.view = segmentation_view.view()

Alternatively, if you’re testing a bunch of models on a single dataset, and you’ve added these predictions to the dataset, you may want to create a view containing some, but not all of these model predictions. In this case, you can exclude_fields() to exclude predictions you are not concerned with:

models = [model1, model2, model3, ...]

for model in models:

dataset.apply_model(

model,

label_field=model.name,

confidence_thresh=0.5

)

model_to_exclude = model2

view = dataset.exclude_fields(model2.name)

session = fo.launch_app(dataset)

session.view = view.view()

Learn more about selecting and excluding fields in the FiftyOne Docs.

Join the FiftyOne community!

Join the thousands of engineers and data scientists already using FiftyOne to solve some of the most challenging problems in computer vision today!

- 1,275+ FiftyOne Slack members

- 2,400+ stars on GitHub

- 2,500+ Meetup members

- Used by 224+ repositories

- 54+ contributors