The past 12 months have seen rapid advances in computer vision, from the enabling infrastructure, to new applications across industries, to algorithmic breakthroughs in research, to the explosion of AI-generated art. It would be impossible to cover all of these developments in full detail in a single blog post. Nevertheless, it is worth taking a look back to highlight some of the biggest and most exciting developments in the field

This post is broken into five parts:

- Big-picture trends in computer vision

- The latest and greatest announcements from the tech giants

- Industry-specific computer vision applications

- Computer vision papers you won’t want to miss

- Computer vision tooling news

Computer Vision trends

Transformers take hold of computer vision

Transformer models exploded onto the deep learning scene in 2017 with Attention is All You Need, setting the standard for a variety of NLP tasks and ushering in the era of large language models (LLMs). The Vision Transformer (ViT), introduced in late 2020, marked the first application of these self-attention based models in a computer vision context.

This year has seen research push transformer models to the forefront in computer vision, achieving state-of-the-art performance on a variety of tasks. Just check out the panoply of vision transformer models in Hugging Face’s model zoo, including DETR, SegFormer, Swin Transformer, and ViT! This GitHub page also provides a fairly comprehensive list of transformers in vision.

Data-centric computer vision gains traction

As computer vision matures, an increasingly large portion of machine learning development pipelines is focused on wrangling, cleaning, and augmenting data. Data quality is becoming a bottleneck for performance, and the industry is moving towards data-model co-design. The data-centric ML movement is growing in popularity.

At the helm of this effort are a new wave of startups — synthetic data generation companies (gretel, Datagen, Tonic) and evaluation, observability, and experiment tracking tools (Voxel51, Weights & Biases, CleanLab) — joining existing labeling and annotation services (Labelbox, Label Studio, CVAT, Scale, V7) in the effort.

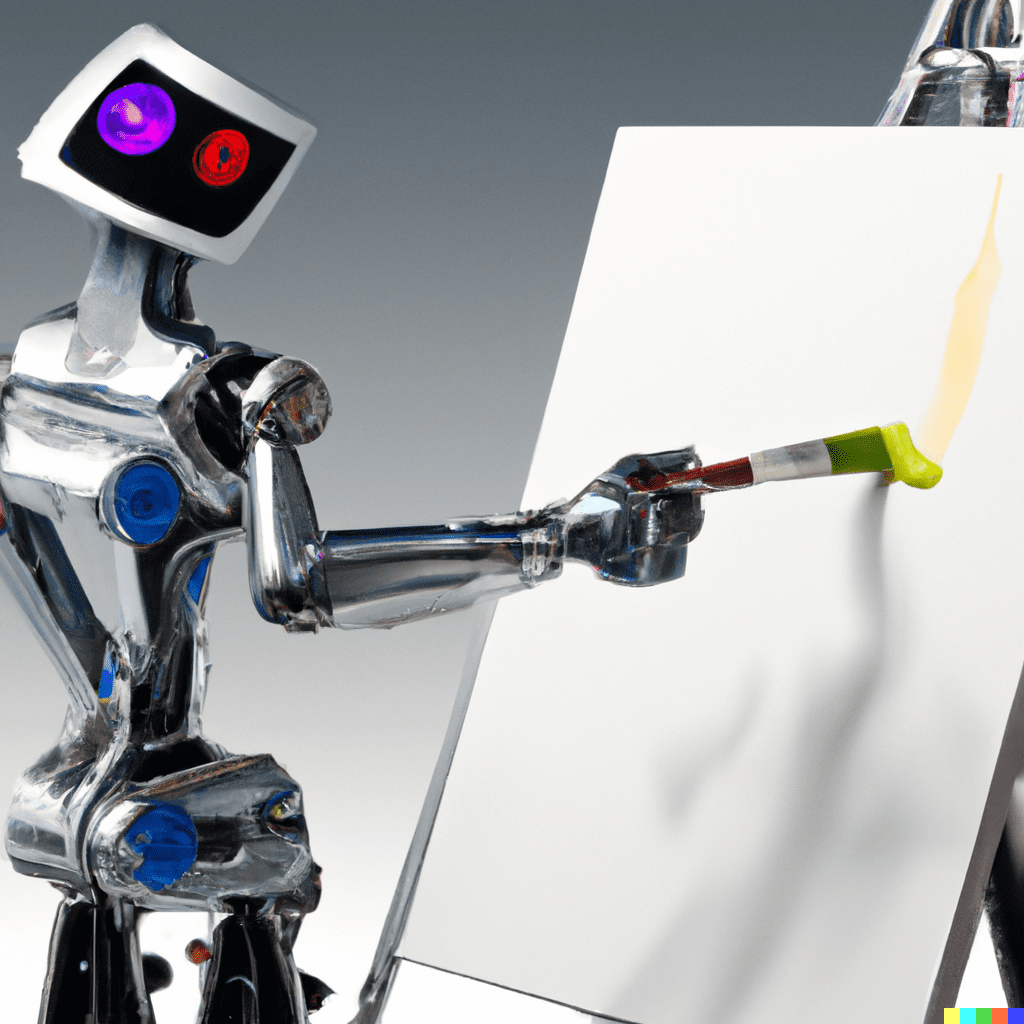

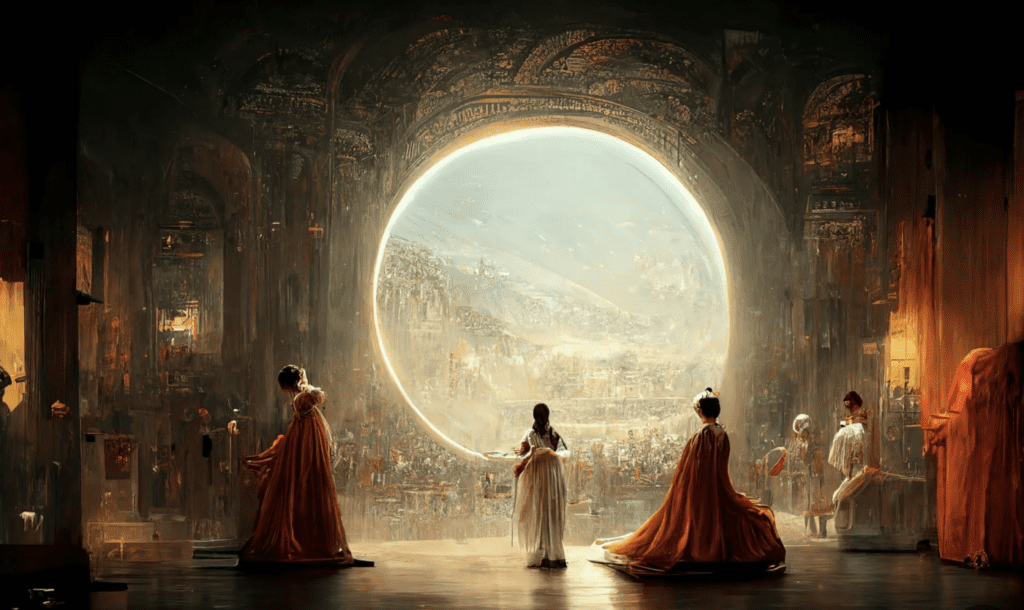

AI-generated artwork gets (too?) good

Between improvements in Generative Adversarial Networks (GANs) and the rapid development and iteration in diffusion models, AI-generated art is having what can only be described as a renaissance. With tools like Stable Diffusion, Nightcafe, Midjourney, and OpenAI’s DALL-E2 it is now possible to generate incredibly nuanced images from user-input text prompts. Artbreeder allows users to “breed” multiple images into new creations, Meta’s Make-A-Video generates videos from text, and RunwayML has changed the game when it comes to creating animations and editing videos. Many of these tools also support inpainting and outpainting, which can be used to edit and extend the scope of images.

With all of these tools revolutionizing AI art capabilities, controversy was all but inevitable, and there has been plenty of it. In September, an AI-generated image won a fine art competition, igniting heated debate about what counts as art, as well as how ownership, attribution, and copyrights will work for this new class of content. Expect this debate to intensify!

Multi-modal AI matures

In addition to AI-generated artwork, 2022 has seen a ton of research and applications at the intersection of multiple modalities. Models and pipelines that deal with multiple types of data, including language, audio, and vision, are becoming increasingly popular. The lines between these disciplines have never been more blurred, and cross-pollination has never been more fruitful.

At the heart of this collision of contexts is contrastive learning, which revamps the embedding of multiple types of data into the same space, the seminal example being Open AI’s Contrastive Language-Image Pretraining (CLIP) model.

One consequence of this is the ability to semantically search through sets of images based on input that can either text or another image. This has spurred a boom in vector search engines, with Qdrant, Pinecone, Weaviate, Milvus, and others leading the way. In a similar vein, the systematic connection between modalities is strengthening visual question answering and zero-shot and few-shot image classification.

Computer Vision buzz from big tech

As dataset sizes continue to grow, the computational and financial resources required to train large, high quality models from scratch has risen dramatically. As a result, many of the most broadly applicable advances this year were either led or supported by scientists from big tech research groups. Here’s some of the highlights.

Alphabet

Alphabet was active in computer vision this year, which saw the Google Brain team study the scaling of Vision Transformers, and Google research develop contrastive captioners (CoCa). The Google Brain team also extended their text-to-image diffusion model Imagen to the video domain with Imagen Video. DeepMind introduced a new paradigm for self-supervised learning, achieving state-of-the-art performance in a variety of transfer learning tasks. Finally, Google released Open Images V7, which adds keypoint data to more than a million images.

Amazon

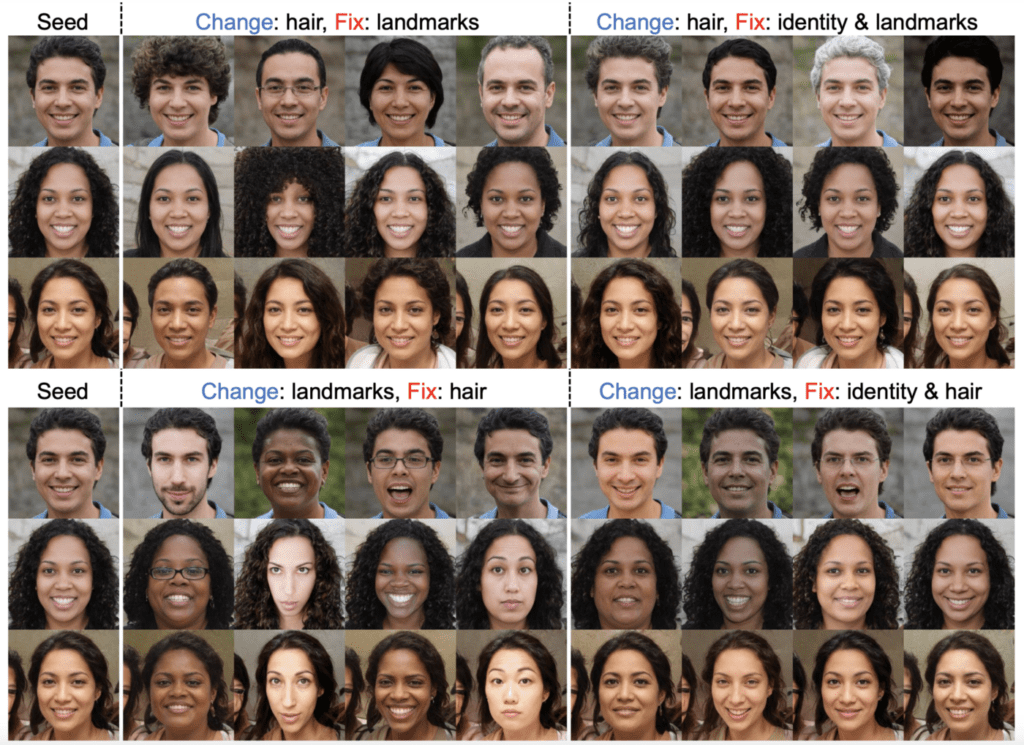

Amazon was prolific to say the least, with 40 papers accepted to just CVPR and ECCV. Highlighting this veritable barrage of research were a paper on translating images into maps, which won the best paper award at ICRA 2022, a method for assessing bias in face verification systems without complete (or any) labels, and a systematic prescription for modifying specific features in images generated by GANs, which works by recasting the problem in the language of Rayleigh quotients.

Microsoft

Microsoft did a lot of work with Transformer models. It was just January when Microsoft’s paper introducing BEiT (BERT Pre-Training of Image Transformers) was accepted at ICLR, and the the ensuing family of models has become a staple of the Transformer model landscape, with the base model accruing 1.4M+ downloads from Hugging Face in the past month alone. The BEiT family is blossoming, with papers on generative vision-language pretraining (VL-BEiT), masked image modeling with vector quantized visual tokenizers (BEiT V2), and modeling image as a foreign language.

Beyond BEiT, Microsoft has been riding the Swin Transformer wave they created last year with StyleSwin and Swin Transformer V2. Other notable works from 2022 include MiniViT: Compressing Vision Transformers with Weight Multiplexing, RegionCLIP: Region-based Language-Image Pretraining, and NICE-SLAM: Neural Implicit Scalable Encoding for SLAM.

Meta

Meta maintained a strong focus on multi-modal machine learning at the crossroads of language and vision. Audio-visual HuBERT achieved state-of-the art results in lip reading and audio-visual speech recognition. Visual Speech Recognition for Multiple Languages in the Wild demonstrates that adding auxiliary tasks to a Visual Speech Recognition (VSR) model can dramatically improve performance. FLAVA: A Foundational Language And Vision Alignment Model presents a single model that performs well across 35 distinct language and vision tasks. And data2vec introduces a unified framework for self-supervised learning that spans vision, speech, and language.

With DEiT III, researchers at Meta AI revisit the training step for Vision Transformers and show that a model trained with basic data augmentation can significantly outperform fully supervised ViTs. Meta also made progress in continual learning for reconstructing signed distance fields (SDFs), and a group of researchers including Yann LeCun shared theoretical insights into why contrastive learning works. Read this. Really.

Finally, in September Meta AI spun out PyTorch into the vendor-agnostic PyTorch Foundation, which shortly thereafter released PyTorch 2.0.

Adobe

In 2022, Adobe took the sophisticated machinery of modern computer vision and turned it to artistic tasks of manipulation like editing, re-styling, and rearranging. Third Time’s the Charm? puts Nvidia’s StyleGAN3 to work editing images and videos, introducing a video inversion scheme that reduces texture sticking. BlobGAN models scenes as collections of mid-level (between pixel-level and image-level) “blobs”, which become associated with objects in the scene without supervision, allowing for editing of scenes on the object-level. ARF: Artistic Radiance Fields accelerates the generation of artistic 3D content by combining style transfer with neural radiance fields (NeRFs).

Nvidia

Nvidia made contributions across the board, including multiple works on performing three dimensional computer vision tasks with single-view (monocular) images and videos. CenterPose sets the standard for category-level 6 degree of freedom (DoF) pose estimation using only a single-stage network; GLAMR globally situates humans in 3D space from videos recorded with dynamic (moving) cameras; and by separating the tasks of feature generation and neural rendering, EG3D can produce high-quality 3D geometry from single images.

Other works of note include GroupViT, FreeSOLO, and ICLR spotlight paper Tackling the Generative Learning Trilemma with Denoising Diffusion GANs

Electrifying new applications of Computer Vision

Computer vision now plays a role in everything from sports and entertainment to construction, to security, to agriculture, and within each of these industries there are far too many companies employing computer vision to count. This section highlights some of the key developments in some of the industries where computer vision is becoming deeply embedded.

Sports

Computer vision featured on the biggest of stages when FIFA employed a semi-automated system for offsides detection at the World Cup in Qatar. They also used computer vision to prevent stampedes at the stadium.

Other noteworthy developments include Sportsbox AI raising a $5.5M Series A led by EP Golf Ventures to bring motion tracking to golf (and other sports), and new company Jabbr tailoring computer vision for combat sports, starting with DeepStrike, a model that automatically counts punches and edits boxing videos.

Climate and Conservation

Circular economy startup Greyparrot raised an $11M Series A round for its computer vision-driven waste monitoring system. Carbon marketplace NCX, which uses cutting edge computer vision models with satellite imagery to deliver precision assessment of timber and carbon potential, raised a $50M Series B. And Microsoft announced the Microsoft Climate Research Initiative (MCRI), which will house their computer vision for climate efforts in renewable energy mapping, land cover mapping, and glacier mapping.

Autonomous vehicles

2022 was a bit of a mixed bag for the autonomous vehicles industry as a whole, with self-driving car company Argo AI shutting down operations in October, and Ford and Rivian shifting their focus from L4 (highly automated) to L2 (partial) and L3 (conditional) automation. Apple also recently announced that it was scaling back its self-driving efforts, “Project Titan”, and pushing launch back until 2026.

Nevertheless, there were some notable wins for computer vision. Researchers at MIT released the first open-source, photorealistic simulator for autonomous driving. Driver-assist unit Mobileye raised an $861M IPO after spinning out of Intel. Google acquired spatial AI and mobility startup Phiar. And Waymo launched an autonomous vehicle service in downtown Phoenix.

Health and Medicine

In Australia, engineers devised a promising no-contact computer vision-based approach for blood pressure detection, which may offer an alternative to the traditional inflatable cuffs. Additionally Google began licensing its computer vision based breast cancer detection tool to cancer detection and therapy provider iCAD.

Prominent Computer Vision papers you can’t pass up

- Tackling the generative learning trilemma with denoising diffusion GANs

- Understanding dimensional collapse in contrastive self-supervised learning

- InternImage: Exploring large-scale vision foundation models with deformable convolutions

- YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

CV tooling startups grow in size and impact

- Annotation startup Labelbox raised a $110 Million Series D

- V7 raised a $33M Series A to help teams build robust AI

- Roboflow released Roboflow 100, a new object detection benchmark

- Voxel51 raised a $12.5M Series A to help bring clarity and transparency to the world’s data

Conclusion

2022 was extremely lively for machine learning, and especially so for computer vision. The crazy thing is the rapid pace of development in research, growth in number practitioners, and adoption in industry appear to be accelerating. Let’s see what 2023 has in store!

FiftyOne Computer Vision toolset

FiftyOne is an open source machine learning toolset developed by Voxel51 that enables data science teams to improve the performance of their computer vision models by helping them curate high quality datasets, evaluate models, find mistakes, visualize embeddings, and get to production faster.

- If you like what you see on GitHub, give the project a star.

- Get started! We’ve made it easy to get up and running in a few minutes.

- Join the FiftyOne Slack community, we’re always happy to help.