How computer vision helped James Cameron wade into uncharted waters

Here at Voxel51 (the company behind the open source FiftyOne computer vision toolset) we’re computer vision practitioners and enthusiasts. As such, we’re always on the lookout for new applications of computer vision. Recently, Avatar: The Way of Water grabbed our attention for its stunning visuals and underwater cinematography. In this article, I’ll summarize some of the most interesting computer vision techniques the film employed to create such an immersive experience.

After almost 13 years, James Cameron’s long-awaited sequel to Avatar finally came out on December 16th, and it is on pace to surpass the first Avatar film as the highest grossing box office production of all time.

The protracted hiatus between installments was in large part due to the technical challenges director James Cameron and the Avatar team took upon themselves to solve: chief among these being the difficulty of creating realistic scenes in water.

Overcoming these hurdles involved the creation of new hardware, the development of novel machine learning algorithms, and humans performing physical feats they never thought possible. Computationally, the film required 18.5 petabytes of data, weeks of simulation for individual scenes, and millions of CPU hours to render the graphics [1].

Here are some of the ways Avatar: The Way of Water leveraged computer vision.

Stereoscopic fusion goes submersive

When one source of data is limited, why not use multiple? This is the idea behind sensor fusion, a technique used in computer vision to combine the strengths of multiple modalities or sources of data. Autonomous vehicles fuse together lidar, radar, and camera data, and medical imaging applications can synthesize the results from multiple anatomical scanning technologies, including CT, PET, MRI, and ultrasound.

For the original Avatar, James Cameron and Vince Pace developed a system with two cameras called Reality Camera System 1, which employed sensor fusion toward a different end: stereoscopic vision. When a person looks at the world, they are synthesizing what the left eye is seeing with what the right eye is seeing. Our eyes can look in different directions and focus to varying extents, and the brain combines this information in real time to produce a three dimensional representation of our surroundings.

With the fusion camera system, Cameron and Pace set out to bring the illusion of depth to the big screen. To accomplish this, the duo designed a system with two cameras in close proximity (inches away) but capable of being controlled independently. In contrast to the binocular nature of human vision, however, Reality Camera System 1 was designed with one camera mounted horizontally and the other mounted vertically, to give the filmmakers control over the degree of depth they wanted humans to experience. This invention contributed significantly to Avatar’s unique appearance in 3D.

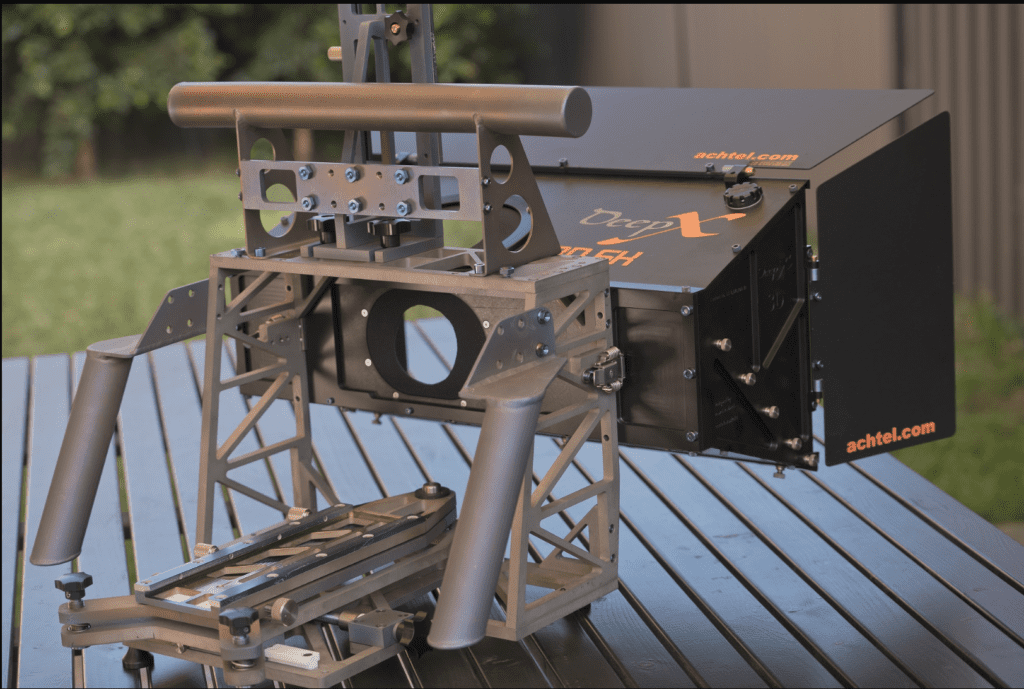

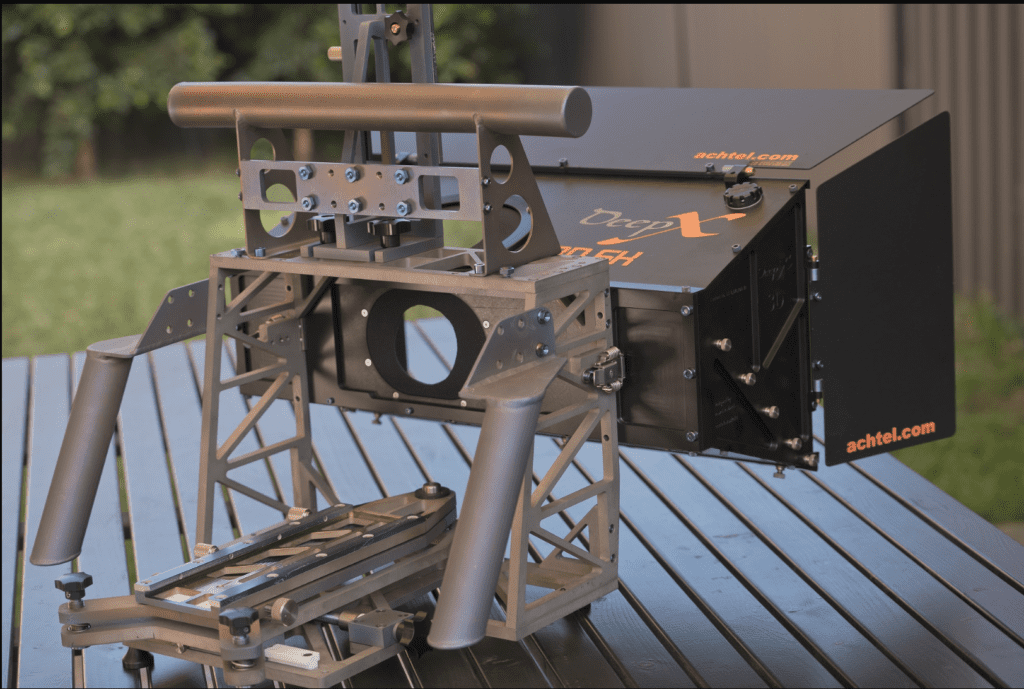

To take this technology under water, Cameron swapped Reality Camera System 1 for a new fusion system designed by cinematographer Pawel Achtel specifically for Avatar: The Way of Water and its sequels. The new system, called Deep X 3D, uses submersible lenses so that it can do away with the housing traditionally found in underwater cameras. In so doing, Cameron and Pawel eliminated camera-borne distortions from underwater stereoscopic fusion.

For scenes that took place both above and below the surface, the production crew had to take fusion a step further:

“What we essentially wound up with was a volume…for underwater and a separate volume for the air. Those two volumes had to sit right on top of one another with only an inch in between…It was two completely separate methods of capture being fused together”

James Cameron [4]

Pushing performance capture to the limit

Over the past few decades, the film, sports, and video game industries have made use of technologies for capturing and recording the movements of people and objects known as motion capture, or “mocap”. The goals of motion capture are very similar to those of standard computer vision motion tracking tasks like pose estimation and activity recognition. However, when used to animate digital characters in CGI scenes, motion capture typically requires that actors wear full-body suits with keypoint markers that are tracked by a stationary camera. This may not always be the case, as researchers are working towards markerless pose estimation. [5, 6] But these techniques aren’t production ready just yet.

For the first Avatar film, the production crew created a motion capture “stage” on which the actors donned their suits and delivered their performances. The vast stage was surrounded by 120 stationary cameras, recording the actors from all angles.

When motion capture involves the recording of more subtle features such as hand gestures and facial expressions, it is often referred to as performance capture. In Avatar, cameras mere inches from the actors’ faces captured the detailed data used to render the faces of their Na’vi avatars.

Water has a way of complicating performance capture – actually a few ways. First, the water reflects light, creating the illusion of additional markers. Second, any type of air bubble in water creates an unwanted disturbance. Notably, this includes the bubbles generated by humans when they wear scuba gear. This gear also impedes the camera’s view of the actors’ facial expressions.

To solve the first of these problems, the entire tank in which filming took place was covered in floating white balls, preventing light reflections from breaching the surface.

To address the other two complications, the entire cast received world class training in free diving, learning how to hold their breath for long periods under water. Kate Winslet set the cast record, staying under for seven minutes before coming up for air.

The result was a nearly perfect three dimensional performance capture stage. Using computer vision – what Cameron dubs his Virtual camera – he was able to map the motion of the actors onto the computer generated scene in real time:

“I could see everybody where they’re supposed to be, above or below the water…they were acting to real-time direction based on what I was seeing on the Virtual Camera”

James Cameron [4]

Moving the audience with motion grading

At a movie theater, 24 images typically flash on the screen each second. 24 ‘frames’ per second (fps) became standard for a few key practical and economic reasons: it is widely believed that 24fps is the lowest frame rate you can use and still put the smooth “motion” in “motion pictures”; and film stock wasn’t always cheap. This frame rate represented a kind of compromise between cost and quality. [8] Why precisely 24fps became the standard rather than 23, 25, or some other number, likely boils down to luck and entrenchment (and 24 being a neatly divisible number)!

While this “gold” standard is generally suitable for films consisting of actual images, for computer generated graphics, 24fps can still feel choppy. In real images, any natural noise that is present can help the eye gloss over gaps in motion. But no such noise exists in the pixel-perfection of CGI scenes.

In order to create Avatar: The Way of Water’s smooth, photorealistic appearance, James Cameron insisted that many scenes – including all of the underwater scenes – were shot using the high frame rate (HFR) of 48fps. But committing to HFR presented a new set of challenges.

First and foremost, not all scenes in the film were shot in 48fps, leading to in-film switches between frame rates. Additionally, different screens, from televisions to movie theaters support different standards. Broadcast television, for instance, plays at about 30fps. When the frame rate is decreased from HFR to 24 or 30fps, the motion interpolation can create a ‘soap-opera effect’, where our eyes are searching for the blurring that accompanies objects in motion, to no avail.

To facilitate in-film changes in frame rate and combat the soap-opera effect, Avatar: The Way of Water employs TrueCut Motion by PixelWorks, which uses a suite of computer vision algorithms to exercise control over motion blurring and the apparent shaking or vibrating of images known as judder. TrueCut Motion makes it possible to convert from 24fps to virtually any frame rate while preserving the desired cinematic quality. In effect this is a cinematic solution to frame interpolation.

Conclusion

Avatar: The Way of Water is as much a technical feat as it is a work of art. Computer vision was integral to its creation, from facilitating underwater cinematography, to enabling real-time performance capture and visualization, to making it possible to overcome the high frame rate soap-opera effect in post-production. Computer vision will only play a bigger role in film and media in the coming years.

If you want to better understand stereoscopic vision, check out the KITTI 3D benchmark dataset, which contains left and right stereoscopic images from thousands of outdoor scenes, taken with closely positioned cameras. The open source library FiftyOne makes it easy to download a subset of this dataset and get started working with the data.

To learn more about how computer vision is affecting film and entertainment, along with other industries like sports, retail, autonomous vehicles, and healthcare, look out for our Industry Spotlight series, launching later this month!

Wait, what’s FiftyOne?

FiftyOne is an open source machine learning toolset that enables data science teams to improve the performance of their computer vision models by helping them curate high quality datasets, evaluate models, find mistakes, visualize embeddings, and get to production faster.

- If you like what you see on GitHub, give the project a star.

- Get started! We’ve made it easy to get up and running in a few minutes.

- Join the FiftyOne Slack community, we’re always happy to help.

Join the FiftyOne community!

Join the thousands of engineers and data scientists already using FiftyOne to solve some of the most challenging problems in computer vision today!

- 1,275+ FiftyOne Slack members

- 2,400+ stars on GitHub

- 2,600+ Meetup members

- Used by 228+ repositories

- 55+ contributors

References

[1] How ‘Avatar: The Way of Water’ Solved the Problem of Computer-Generated H2O

[2] This is the Camera That Shot Avatar 2

[3] Deep X 3D

[4] How Avatar: the Way of Water Revolutionizes Underwater Cinematography

[5] 3D Human Pose Estimation = 2D Pose Estimation + Matching

[6] MHFormer: Multi-Hypothesis Transformer for 3D Human Pose Estimation

[7] Making of Avatar & Avatar 2: Behind-the-Scenes of James Cameron’s Epic

[8] Why 24 frames per second is still the gold standard for film