Automatically find the best deployment threshold using FiftyOne Plugins

Deploying detection models is tough. There are so many factors to consider such as inference speed, camera lenses, model to choose and more. But one issue that rises without fail at the end is always this: What in the world do I set my confidence threshold to?

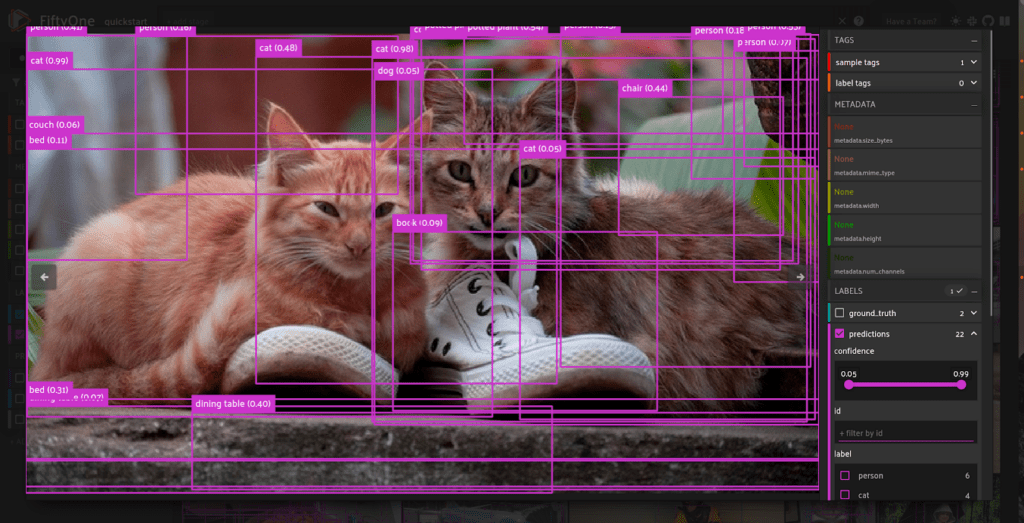

For those unfamiliar, in detection models, we need to set a threshold for which bounding boxes to keep. It is what keeps a deployed model from predicting boxes like this:

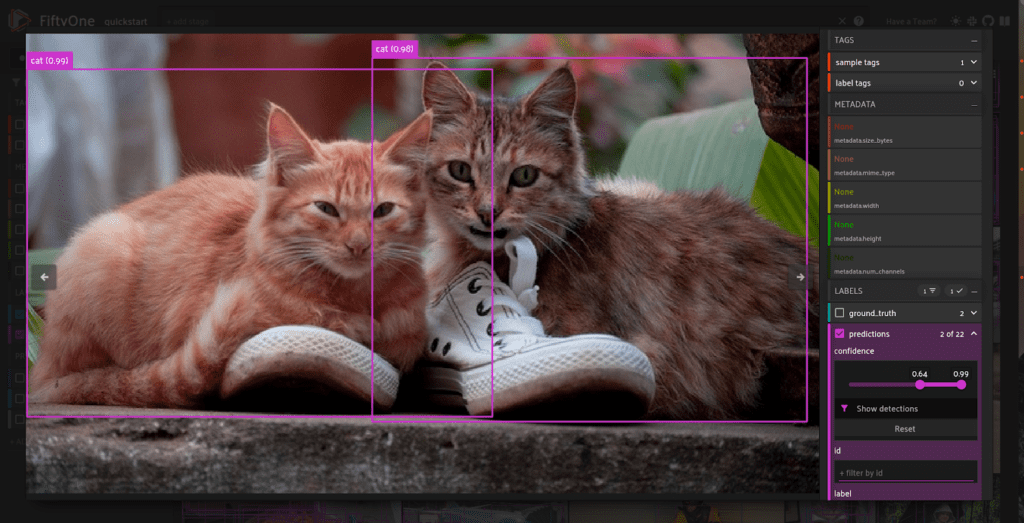

And instead predicts more like this:

Confidence thresholds help prevent potentially harmful false positives from being predicted in our deployed models. Without it, systems like self-driving cars, security systems, safety monitors or more could be making grave mistakes.

The mAP Problem

One of the difficulties of developing a detection model is that a well chosen confidence threshold and a model’s highest achievable mAP score do not have the same interests in mind. mAP or Mean Average Precision, in short, is calculated by determining if a box that was predicted fits well over the original ground truth box. The issue here is that mAP does not care about the number of boxes predicted. As long as one of those boxes match, then you will have a highly “accurate” model. This has led to an industry standard of dropping confidence thresholds to zero when determining the accuracy of your model.

While this is great in some cases such as determining the best model on a benchmark dataset, you can begin to see how it is a little backwards when it comes time to deploy your model. What comes next is this goldilocks problem of trying to keep as many boxes as possible in hope that the correct one is there, while also culling off poor boxes below the threshold as to remove false positives.

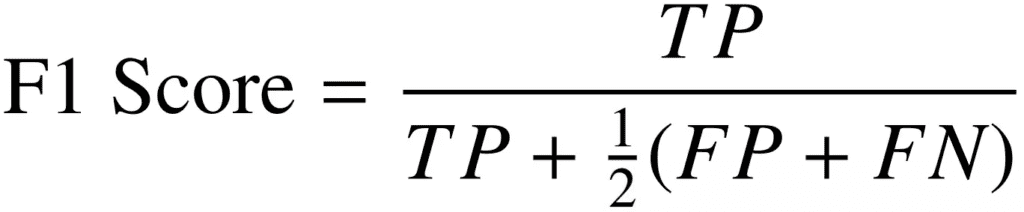

The Optimal Confidence Plugin was made to help you avoid this headache and accelerate your workflows. If mAP is not going to serve as the optimal evaluation metric for deployment, we can switch to F1 score instead. F1 score is a measure of the harmonic mean of precision and recall. It takes into account three main inputs. Our true positives, or the boxes we correctly predicted, our false positives, or the boxes we incorrectly predicted were there but were not, and our false negatives, the boxes that we did not predict and missed. This is a well balanced metric used often in computer vision and machine learning as a whole to calculate the accuracy of a model.

We can now determine using FiftyOne `evaluate_detections` to find all the true positives, false positives, and false negatives, in our dataset at any given confidence threshold!

Installation

To try the plugin, start by first installing FiftyOne and our plugin to our environment. Both can be done in your terminal with the following:

pip install fiftyone fiftyone plugins download https://github.com/danielgural/optimal_confidence_threshold #We can verify the installation of our new plugin with the command: fiftyone plugins list

Finding the Optimal Confidence Threshold

Once installed, we can kick open the FiftyOne app with the dataset of our choice. If you need help loading your dataset, check out the documentation on how to get started. We will be using the quickstart dataset for our example. We can get started with:

import fiftyone as fo

import fiftyone.zoo as foz

import numpy as np

dataset = foz.load_zoo_dataset("quickstart")

session = fo.launch_app(dataset)

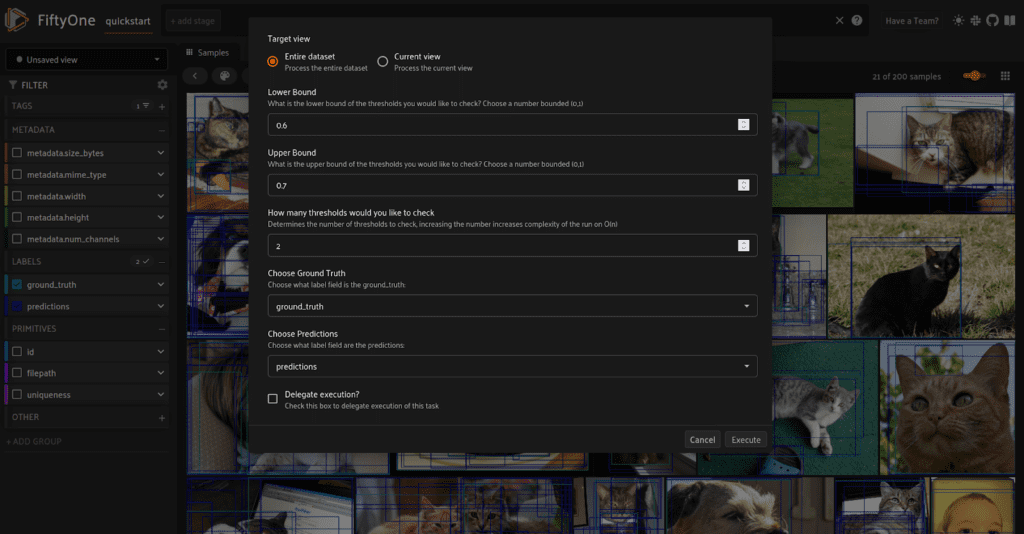

Once you are in the app, hit the backtick key ( ` ) or the browse operations button to open the operators list. Search for the optimal_confidence operator and you will be met with the following menu.

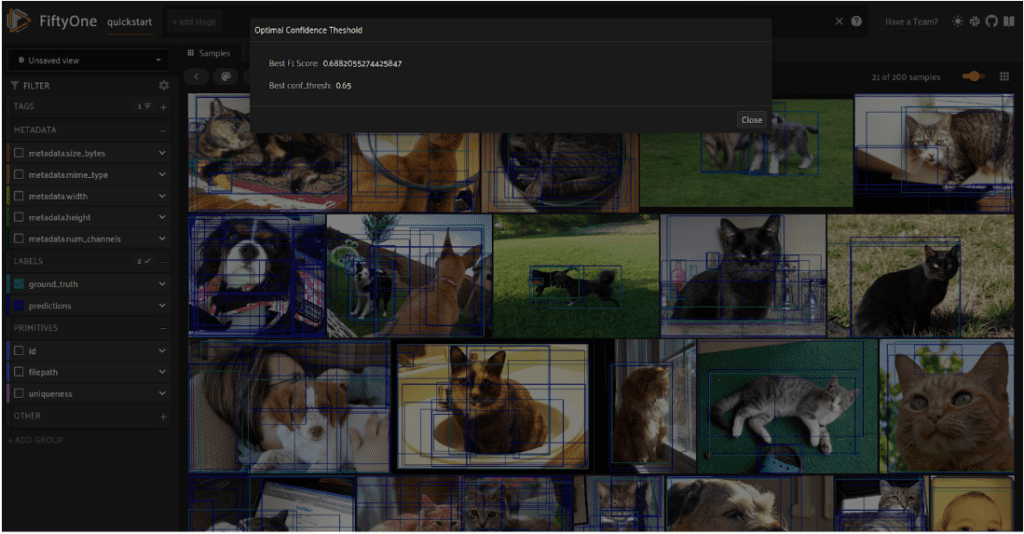

From here, you will be able to configure how you want to find your threshold. You can choose what bounds to search between and how many steps to check along the way. Then, select which labels on your dataset are the Ground Truth and which are your model predictions. Afterwards, the plugin will begin scanning the thresholds to find the best one for your model to deploy that will maximize the F1 score. Once the run concludes, the plugin will output both the optimal confidence threshold found as well as the F1 score that it achieved.

Just like that, we are able to find the confidence threshold that will help maximize effectiveness during deployment and minimize the number of false positives! All with just a couple of inputs to the Optimal Confidence Threshold plugin.

Conclusion

Don’t waste time trying to find the best confidence interval yourself. Leverage the power of FiftyOne plugins and upgrade your workflows today! If you are interested in finding more FiftyOne plugins, checkout our community repo to optimize your workflows with plugins or contribute one of your own! Plugins are highly flexible and always open source so that you can customize it exactly to your needs! Have fun exploring!