Welcome to our weekly FiftyOne tips and tricks blog where we recap interesting questions and answers that have recently popped up on Slack, GitHub, Stack Overflow, and Reddit.

Wait, what’s FiftyOne?

FiftyOne is an open source machine learning toolset that enables data science teams to improve the performance of their computer vision models by helping them curate high quality datasets, evaluate models, find mistakes, visualize embeddings, and get to production faster.

- If you like what you see on GitHub, give the project a star.

- Get started! We’ve made it easy to get up and running in a few minutes.

- Join the FiftyOne Slack community, we’re always happy to help.

Ok, let’s dive into this week’s tips and tricks!

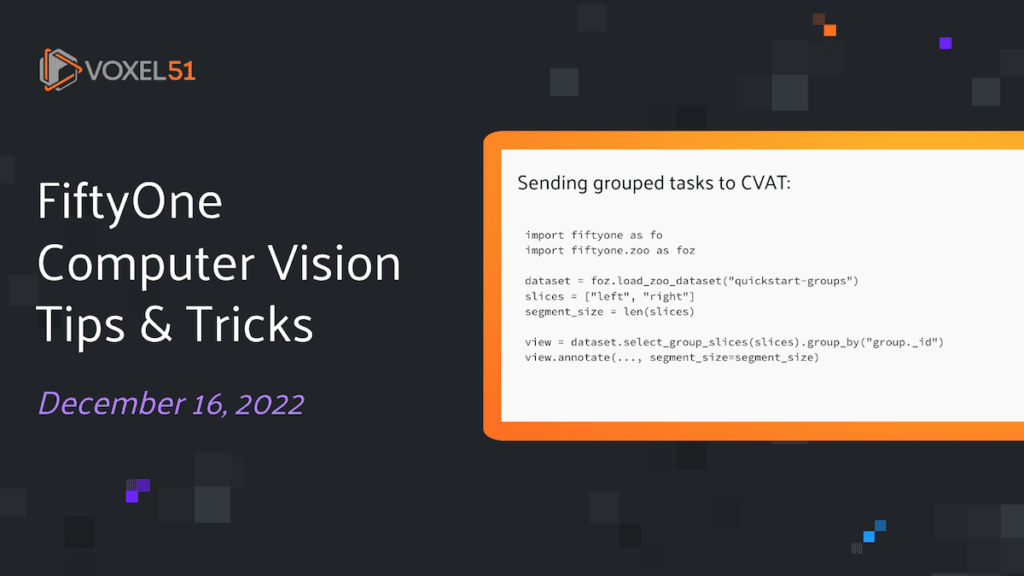

Sending grouped tasks to CVAT

Community Slack member Daniel Fortunato asked,

“I am using FiftyOne to manage a grouped dataset with three images per group. I want to send these images to CVAT for annotation. How can I import all the images in each group as a single task with multiple jobs, rather than multiple tasks with one job each?

If each group slice has the same media type, e.g. images, then you can do this by selecting the desired group slices with select_group_slices(), and grouping by the group id with group_by(). Calling select_group_slices() will return a collection of images (or more generally, a view whose media_type attribute is the same as the group slices, rather than media_type = “group”. The following group_by() operation will rearrange the resulting DatasetView such that samples from the same group are located at neighboring indices.

Once this view has been created, it can be sent to CVAT using the annotate() method, with the segment_size argument set equal to the number of images per task — here the number of selected group slices.

Putting it all together, we can see what this would look like for the Quickstart Groups dataset:

import fiftyone as fo

import fiftyone.zoo as foz

dataset = foz.load_zoo_dataset("quickstart-groups")

slices = ["left", "right"]

segment_size = len(slices)

view = dataset.select_group_slices(slices).group_by("group._id")

view.annotate(..., segment_size=segment_size)

Learn more about FiftyOne’s annotation API and FiftyOne’s integration with CVAT in the FiftyOne Docs.

Linking detections on multiple samples

Community Slack member Marijn Lems asked,

“Is it possible to relate multiple detections on different samples in FiftyOne?”

In computer vision it is often the case that multiple images or media files have objects that are related to each other. For instance, in a dataset containing faces, such as Families in the Wild, there could be multiple images that feature the same person’s face. Alternatively, in a grouped dataset like the KITTI Multiview dataset, an image and a point-cloud could feature different representations of the same object.

One way to associate these detections is by creating a Detection-level metadata field that you populate with a unique identifier for each associated group of detections. Working with the Quickstart Groups dataset, for example, linking a ground truth detection in the “left” and “right” images in the first group can be done as follows:

import fiftyone as fo

import fiftyone.zoo as foz

dataset = foz.load_zoo_dataset("quickstart-groups")

left_sample = dataset.first()

left_detection = left_sample.ground_truth.detections[0]

right_sample_id = dataset.get_group(left_sample.group.id)["right"].id

dataset.group_slice = "right"

right_sample = dataset[right_sample_id]

right_detection = right_sample.ground_truth.detections[0]

"Tie the detections together"

uuid = 0

left_detection["detection_uuid"] = uuid

left_sample.save()

right_detection["detection_uuid"] = uuid

right_sample.save()

Learn more about object detections in FiftyOne in the FiftyOne Docs.

Merging large datasets into grouped dataset

Community Slack member Oğuz Hanoğlu asked,

“I have two large image datasets which I want to merge into a grouped dataset according to their values in a certain field. What is the most efficient way to do this?”

When dealing with large datasets, you want to perform as many operations in-database as possible to avoid the long times typical of loading large datasets into memory.

One way to do this is to create a temporary clone of each source dataset, then populate a Group field on each sample in these “single slice” datasets, and finally merge these datasets into a single grouped dataset. To merge datasets ds_left and ds_right based on their values in field my_field, this would look like:

import fiftyone as fo

def group_collections(d, group_field, group_key):

dataset = fo.Dataset()

dataset.add_group_field(group_field)

group_keys = set().union(*[set(c.exists(group_key).distinct(group_key)) for c in d.values()])

groups = {_id: fo.Group() for _id in group_keys}

for group_slice, sample_collection in d.items():

_add_slice(dataset, groups, sample_collection, group_field, group_key, group_slice)

return dataset

def _add_slice(dataset, groups, sample_collection, group_field, group_key, group_slice):

tmp = sample_collection.exists(group_key).clone()

group_values = [groups[k].element(group_slice) for k in tmp.values(group_key)]

tmp.add_group_field(group_field, default=group_slice)

tmp._doc.media_type = sample_collection.media_type

tmp.set_values(group_field, group_values)

tmp._doc.group_media_types = {group_slice: sample_collection.media_type}

tmp._doc.media_type = "group"

dataset.add_collection(tmp)

tmp.delete()

dataset = group_collections({"left": ds_left, "right": ds_right}, "group", "my_field")

This approach does require the set_values() method rather than the set_field() method, as the Group instances need to be instantiated in memory in order to guarantee that they have unique id’s . However, for large datasets this approach is far more efficient than an approach which iterates through samples in the datasets to be merged.

Learn more about set_field and set_values in the FiftyOne Docs.

Annotating tagged labels from multiple fields

Community Slack member Daniel Bourke asked,

“I want to send a collection of the worst predictions to Label Studio for annotation, including both ground truth and prediction label fields. How do I do this, when the label schema for Label Studio only accepts one field?”

One way that you could do this now is to combine your incorrect ground truth and predictions into a single label field in FiftyOne first, then annotate that field in Label Studio (since it’s now just one field to re-annotate).

First, you can clone the “ground_truth” and “predictions” fields into new fields. Then, you can filter on these fields to create a view containing only the samples of interest. And finally, you can use the merge_labels() method to merge one, temporary, cloned field into the other cloned field:

dataset.clone_sample_field("ground_truth", "combined_field")

dataset.clone_sample_field("predictions", "preds_to_merge")

# Perform your filtering of the GT and Preds to only the ones you want to annotate

dataset.filter_labels("combined_field", ...).save(fields="combined_field")

dataset.filter_labels("preds_to_merge", ...).save(fields="preds_to_merge")

# Merge them into one field, "preds_to_merge" will be deleted after this

dataset.merge_labels("preds_to_merge", "combined_field")

This consolidates your desired information into a single field so that it is suitable for sending to Label Studio. Additionally, it preserves the original fields.

Learn more about merge_labels and FiftyOne’s Label Studio integration in the FiftyOne Docs.

Visualizing point clouds in the FiftyOne App

Community Slack member Marijn Lems asked,

“I have a dataset consisting of point-cloud data and I’d love to visualize this data in the FiftyOne App. How can I do this?”

In FiftyOne, you can define, populate, and perform operations on a point-cloud only dataset, but you will not be able to visualize this out-of-the-box without having an image or other media file associated with each point cloud. This is because point-clouds trigger FiftyOne’s 3d visualizer plugin, and it would be quite compute-intensive to have the sample-grid running a large number of these 3d visualizers at once.

One solution to this is to create a Grouped Dataset with the point-cloud media as one slice, and to generate an image for each point-cloud, saving these images into another group slice. As an example, you could generate a bird’s eye view image for the point clouds in the collection pcd_samples:

import numpy as np

import cv2

def my_birds_eye_view_function(pcd_sample):

return np.zeros((100, 100, 3))

import fiftyone as fo

ds = foz.load_zoo_dataset("quickstart-groups")

pcd_samples = ds.select_group_slices("pcd")

print(pcd_samples)

dataset = fo.Dataset("my-group-dataset")

dataset.add_group_field("group", default="pcd")

group_pcd_samples = []

group_bev_samples = []

for pcd_sample in pcd_samples:

group = fo.Group()

sample = fo.Sample(filepath=pcd_sample.filepath, group=group.element("pcd"))

group_pcd_samples.append(sample)

uuid = sample["filepath"]

bev_map = my_birds_eye_view_function(pcd_sample)

bev_filepath = "path/to/bev/img.png"

cv2.imwrite(bev_filepath, bev_map)

bev_sample = fo.Sample(filepath=bev_filepath)

bev_sample["group"] = group.element("bev")

group_bev_samples.append(bev_sample)

dataset.add_samples(group_pcd_samples)

dataset.add_samples(group_bev_samples)

### set group slice to images so can view

dataset.group_slice = "bev"

session = fo.launch_app(dataset)

Learn more about adding samples to a dataset in the FiftyOne Docs.

Join the thousands of engineers and data scientists already using FiftyOne to solve some of the most challenging problems in computer vision today!

- 1,200+ FiftyOne Slack members

- 2,300+ stars on GitHub

- 2,000+ Meetup members

- Used by 208+ repositories

- 51+ contributors

Join the FiftyOne community!

Join the thousands of engineers and data scientists already using FiftyOne to solve some of the most challenging problems in computer vision today!

- 1,200+ FiftyOne Slack members

- 2,300+ stars on GitHub

- 2,000+ Meetup members

- Used by 208+ repositories

- 51+ contributors

What’s next?

- If you like what you see on GitHub, give the project a star.

- Get started! We’ve made it easy to get up and running in a few minutes.

- Join the FiftyOne Slack community, we’re always happy to help.