Compare Images from the Internet to Your Computer Vision Dataset

Welcome to week eight of Ten Weeks of Plugins. During these ten weeks, we will be building a FiftyOne plugin (or multiple!) each week and sharing the lessons learned!

If you’re new to them, FiftyOne Plugins provide a flexible mechanism for anyone to extend the functionality of their FiftyOne App. You may find the following resources helpful:

What we’ve built so far:

- Week 0: 🌩️ Image Quality Issues & 📈 Concept Interpolation

- Week 1: 🎨 AI Art Gallery & Twilio Automation

- Week 2: ❓Visual Question Answering

- Week 3: 🎥 YouTube Player Panel

- Week 4: 🪞Image Deduplication

- Week 5: 👓Optical Character Recognition (OCR) & 🔑Keyword Search

- Week 6: 🎭Zero-shot Prediction

- Week 7: 🏃Active Learning

Ok, let’s dive into this week’s FiftyOne Plugin – Reverse Image Search!

Reverse Image Search Plugin ⏪🖼️🔎

Back in March 2023, we added native vector search functionality into the FiftyOne library with the release of FiftyOne 0.20. Since then, users of the FiftyOne library have been able to leverage vector search engines — at first Qdrant and Pinecone, and later also Milvus and LanceDB — to seamlessly search through billion-sample datasets.

Concretely, the way this works is that the user selects an image from their dataset, and the subsequent “similarity search” finds the k most similar images in the dataset by querying the vector search engine. In a similar vein, the user can select an object patch in one of their images and query the vector search engine for the k most similar object patches.

This functionality has proven incredibly useful for data curation and exploration. As members of the FiftyOne community began incorporating vector search into their workflows, however, a slightly different use-case emerged: reverse image search. Users wanted to be able to query their dataset with an image that is not in the dataset, and find the closest matches.For the eighth week of 10 Weeks of Plugins, I built a Reverse Image Search Plugin to enable this workflow! This plugin leverages the same vector search functionality as is used in image similarity search, but exposes an interface to the user to select a query image that is not part of the dataset. The query image can be dragged and dropped from your local filesystem, or specified via URL.

As with the YouTube Player Panel Plugin, ChatGPT was invaluable in helping me to write the JavaScript code!

Plugin Overview & Functionality

The Reverse Image Search Plugin is a joint Python/JavaScript plugin with two operators:

open_reverse_image_search_panel: opens the Reverse Image Search Panel.reverse_search_image: runs the reverse image search on the dataset given the input image.

For this walkthrough, I’ll be using a dataset of object patches (individual dog detections) from the Stanford Dogs dataset.

Creating the Similarity Index

As with FiftyOne’s core similarity search functionality, to run reverse image search on your dataset, you first need to have a similarity index. You can generate a similarity index by running compute_similarity()on your dataset from Python, specifying a model from the FiftyOne Model Zoo, and a vector search engine backend to use to construct the index. Here we use a CLIP model to compute embeddings, and Qdrant as our vector database:

!docker run -p "6333:6333" -p "6334:6334" -d qdrant/qdrant

import fiftyone as fo

import fiftyone.brain as fob

import fiftyone.zoo as foz

dataset = foz.load_zoo_dataset("quickstart")

# Index images

fob.compute_similarity(

dataset,

model="clip-vit-base32-torch",

brain_key="clip_sim",

backend="qdrant"

)

Alternatively, you can compute similarity from within the FiftyOne App:

Opening the Panel

The open_reverse_image_search_panel operator follows the same pattern as in the Concept Interpolation and YouTube Player Panel plugins.

As such, there are three ways to execute the open_reverse_image_search_panel operator and open the panel:

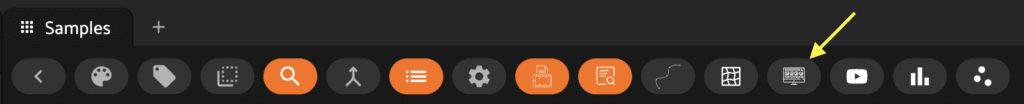

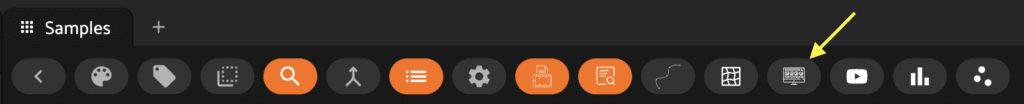

- Press the Reverse Image Search button in the Sample Actions Menu:

- Click on the

+icon next to theSamplestab and selectReverse Image Searchfrom the dropdown menu:

- Press “`” to pull up your list of operators, and select

open_reverse_image_search_panel:

Searching the Dataset

Once we have a similarity index on our dataset, we can select a query image from our local filesystem:

This uses react-dropzone to handle the input of the image.

Alternatively, we can specify an image by passing the URL of a PNG or JPEG file:

Whichever of these input options we choose, we will see a preview of the query image in the Reverse Image Search panel.

We can also specify the number of results to return, and if we have multiple similarity indexes, we can select the index to use by its brain key.

Pressing the SEARCH button will execute the query and display the similar images in the sample grid:

Installing the Plugin

If you haven’t already done so, install FiftyOne:

pip install fiftyone

Then you can download this plugin from the command line with:

fiftyone plugins download https://github.com/jacobmarks/reverse-image-search-plugin

Refresh the FiftyOne App, and you should see the Reverse Image Search button show up in the actions menu.

Lessons Learned

Handling Remote Images

One of the input options for the query image in the Reverse Image Search plugin is to specify the URL where an image resource is located. To perform the search, we need to compute the embedding vector for this image. However, we don’t want to unnecessarily write the image to a file on the user’s computer.

The solution I arrived at was to use the requests library to obtain the data for the file at that URL, and then use the io library to create a file-like object in memory without writing to disk:

from io import BytesIO import requests response = requests.get(url) image_file_like = BytesIO(response.content)

From there, we can apply the pillow library to the file-like object to create an “image” that we can pass into our embedding model:

from PIL import Image query_image = Image.open(image_file_like) index = dataset.load_brain_results(index_name) model = index.get_model() query_embedding = model.embed(query_image)

The final step is to pass this embedding vector as the query to the dataset’s sort_by_similarity() method.

Handling Local Files

When working with files from the local filesystem, the big surprise was that for security reasons, the filepath of the image we dragged and dropped into the drop zone is not accessible to us. Instead, the raw data from the image file is encoded in base64. This means that we need to first decode the data, then create a file-like object and proceed as we did in the previous case.

import base64

base64_image = file_data.split(';base64,')[1]

image_data = base64.b64decode(base64_image)

image_file_like = BytesIO(image_data)

...

Installing JavaScript Dependencies

I’m new to JavaScript and React, so for some of you this is likely obvious. Each FiftyOne JavaScript plugin is its own package, so different plugins can have different dependencies. To specify those dependencies, you must add them to the “dependencies” section of package.json.

In this plugin, we used react-dropzone, and you can see this specified here:

Once you have added the dependency, you can use it within your TypeScript file. In ReverseImageSearchPlugin.tsx, for instance, I have:

import { useDropzone } from "react-dropzone";

Conclusion

Whether you want to build a Google image search clone, or just query popular datasets for dogs similar to your pup, the Reverse Image Search plugin extends FiftyOne’s vector search functionality beyond the data in your dataset. It provides a template for working with local or remote resources, and tailoring FiftyOne to your desired workflows.

Stay tuned for the last few weeks of these ten weeks as we continue to pump out a killer lineup of plugins! You can track our journey in our ten-weeks-of-plugins repo — and I encourage you to fork the repo and join me on this journey!