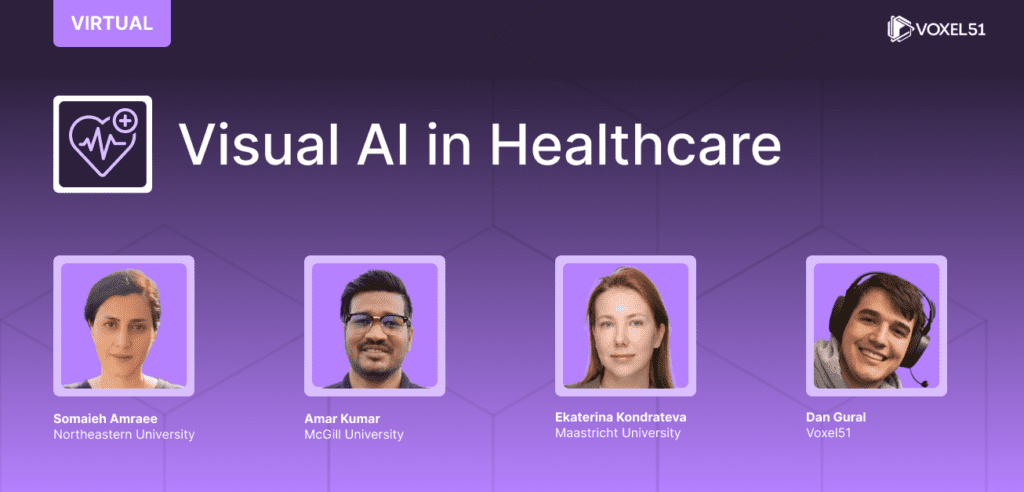

Visual AI in Healthcare

June 25, 2025 | 9 AM Pacific

Join us for the first of several virtual events focused on the latest research, datasets and models at the intersection of visual AI and healthcare.

When

June 25 at 9 AM Pacific

Where

Online. Register for the Zoom!

Register for the Zoom

Vision-Driven Behavior Analysis in Autism: Challenges and Opportunities

Somaieh Amraee

Northeastern University

Understanding and classifying human behaviors is a long-standing goal at the intersection of computer science and behavioral science. Video-based monitoring provides a non-intrusive and scalable framework for analyzing complex behavioral patterns in real-world environments. This talk explores key challenges and emerging opportunities in AI-driven behavior analysis for individuals with autism spectrum disorder (ASD), with an emphasis on the role of computer vision in building clinically meaningful and interpretable tools.

PRISM: High-Resolution & Precise Counterfactual Medical Image Generation using Language-guided Stable Diffusion

Amar Kumar

McGill University

PRISM, an explainability framework that leverages language-guided Stable Diffusion that generates high-resolution (512×512) counterfactual medical images with unprecedented precision, answering the question: “What would this patient image look like if a specific attribute is changed?” PRISM enables fine-grained control over image edits, allowing us to selectively add or remove disease-related image features as well as complex medical support devices (such as pacemakers) while preserving the rest of the image. Beyond generating high-quality images, we demonstrate that PRISM’s class counterfactuals can enhance downstream model performance by isolating disease-specific features from spurious ones — a significant advancement toward robust and trustworthy AI in healthcare.

Building Your Medical Digital Twin — How Accurate Are LLMs Today?

Ekaterina Kondrateva

Maastricht University

We all hear about the dream of a digital twin: AI systems combining your blood tests, MRI scans, smartwatch data, and genetics to track health and plan care.

But how accurate are today’s top tools like GPT-4o, Gemini, MedLLaMA, or OpenBioLLM — and what can you realistically feed them?

In this talk, we’ll explore where these models deliver, where they fall short, and what I learned testing them on my own health records.

NVIDIA’s VISTA-3D and MedSAM-2 Medical Imaging Models

Dan Gural

Voxel51

In this talk, we’ll explore two medical imaging models. First, we’ll dive into NVIDIA’s Versatile Imaging SegmenTation and Annotation (VISTA) model which combines semantic segmentation with interactivity, offering high accuracy and adaptability across diverse anatomical areas for medical imaging. Finally, we’ll explore MedSAM-2, an advanced segmentation model that utilizes Meta’s SAM 2 framework to address both 2D and 3D medical image segmentation tasks.

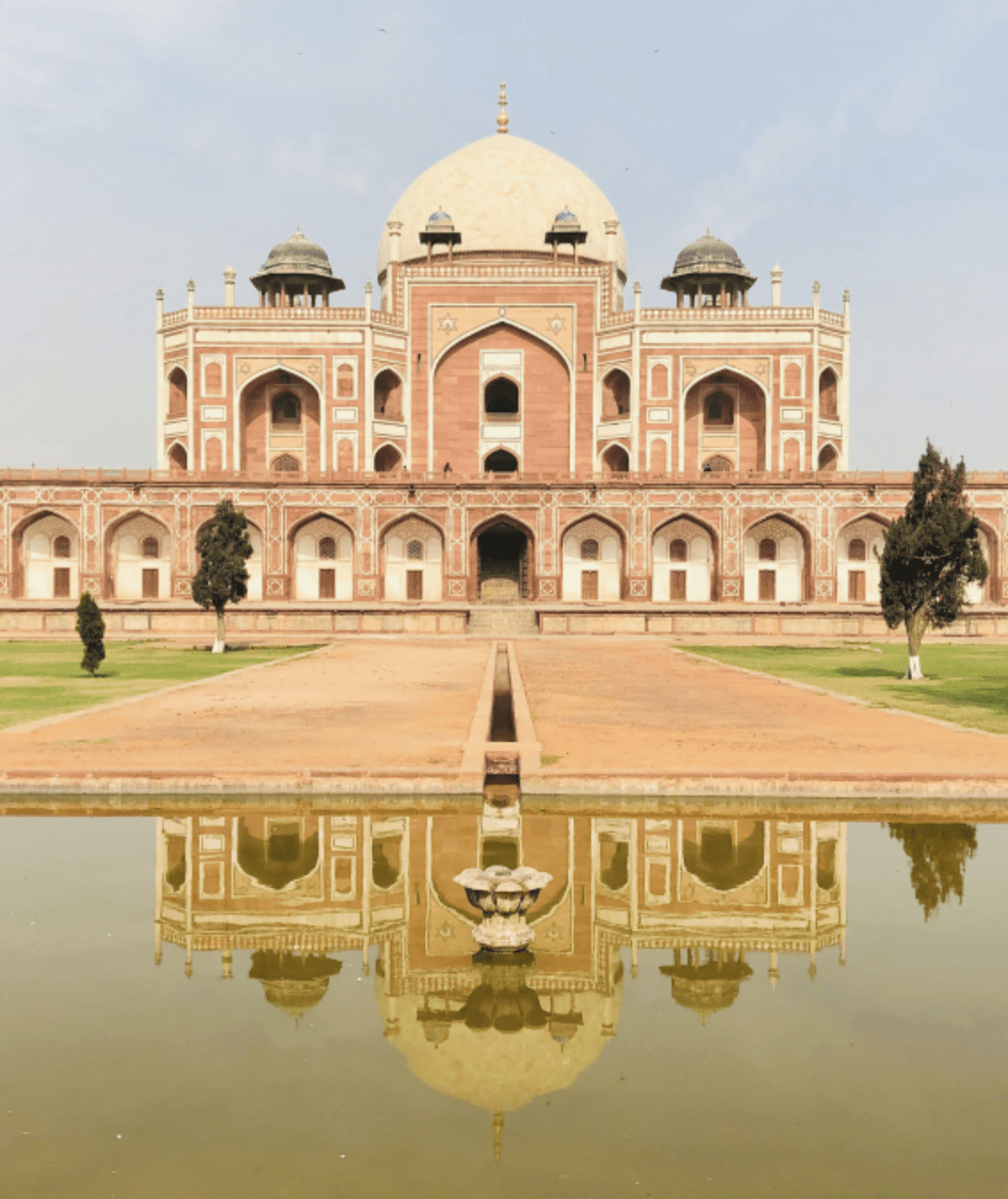

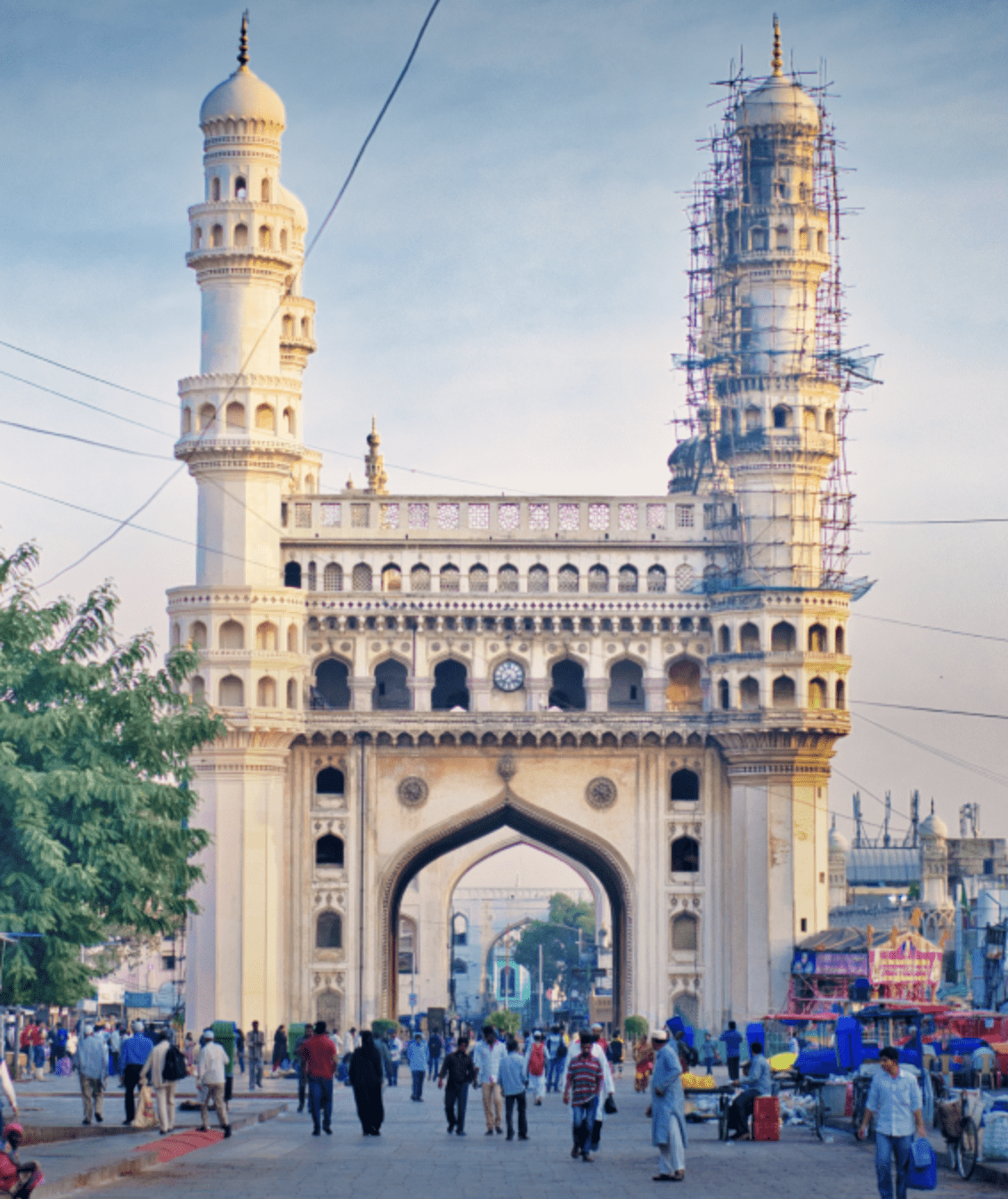

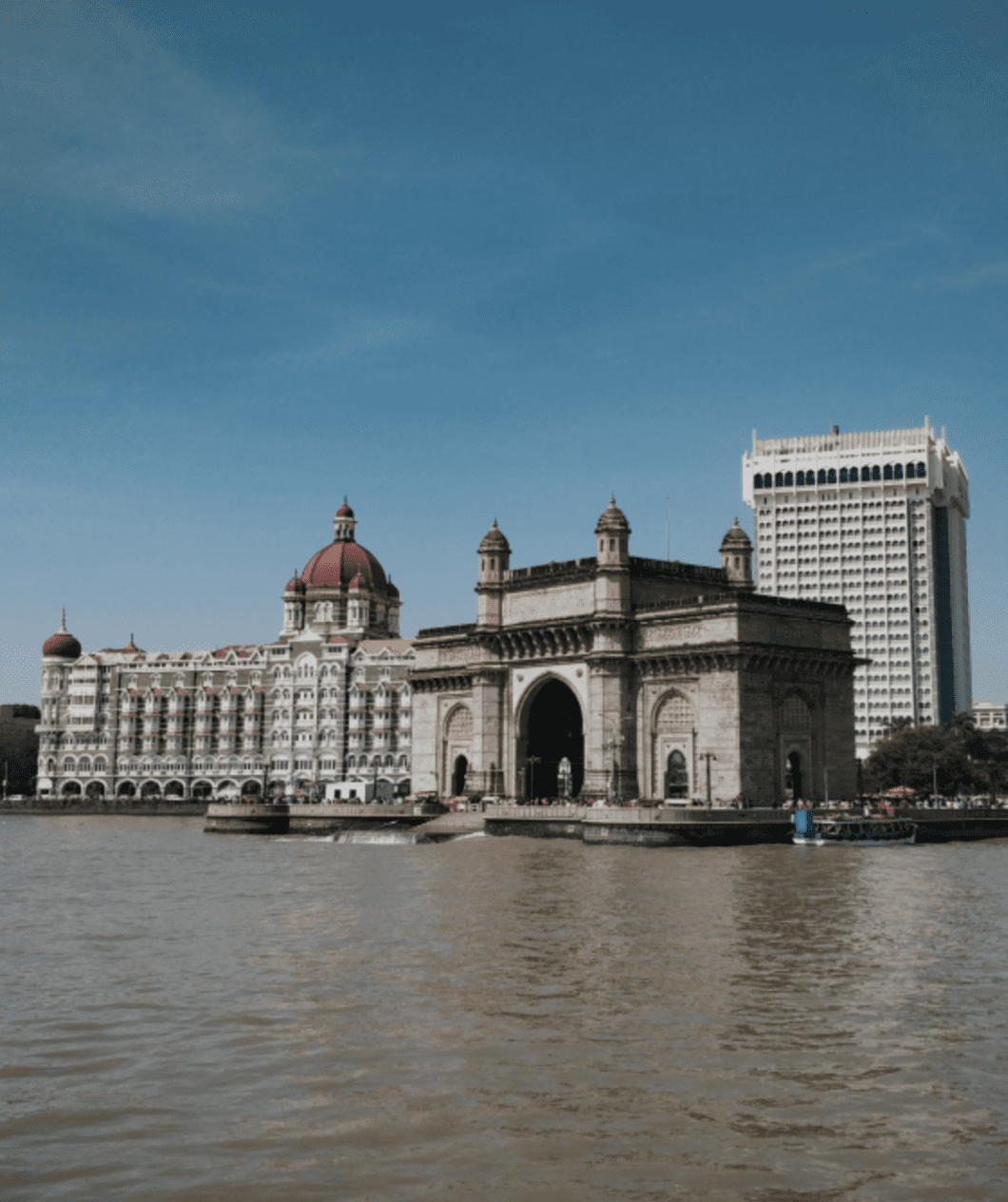

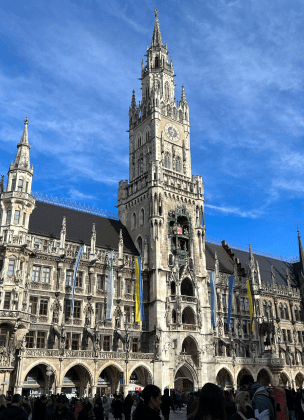

Find a Meetup Near You

Join the AI and ML enthusiasts who have already become members

The goal of the AI, Machine Learning, and Computer Vision Meetup network is to bring together a community of data scientists, machine learning engineers, and open source enthusiasts who want to share and expand their knowledge of AI and complementary technologies. If that’s you, we invite you to join the Meetup closest to your timezone.