Annotation

Imagine teaching a computer to see . You start by showing it thousands of pictures and telling it what each one contains. That act of adding labels or notes is called data annotation. In machine‑learning terms, to annotate visual data means attaching information (like class names or shapes) so a model can learn from it. Once a dataset is fully annotated, it becomes a lesson book for training an AI model. The everyday annotate definition (“to add explanatory notes”) largely aligns with the data annotation definition used in computer vision: label visual data so machines can understand it.

What is Data Annotation in Computer Vision?

In computer vision, data annotation is the practice of labeling images or videos so algorithms can learn object categories, locations, and boundaries. Common annotations include bounding boxes, segmentation masks, keypoints, and image‑level tags. The celebrated COCO dataset contains over 330 thousand images with precisely these kinds of labels. High‑quality annotation is critical to developing state‑of‑the‑art models.

Common Annotation Types & Examples

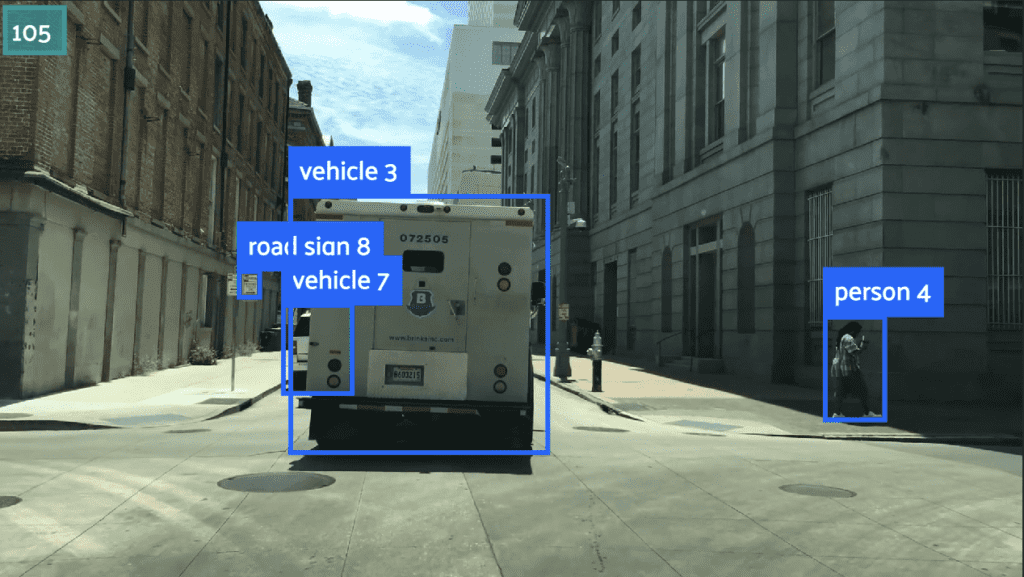

- Image classification labels: tag the whole image (e.g., “indoor” vs “outdoor”).

- Bounding boxes: draw rectangles around objects. Annotation examples with bounding boxes might include every car and pedestrian in a street scene.

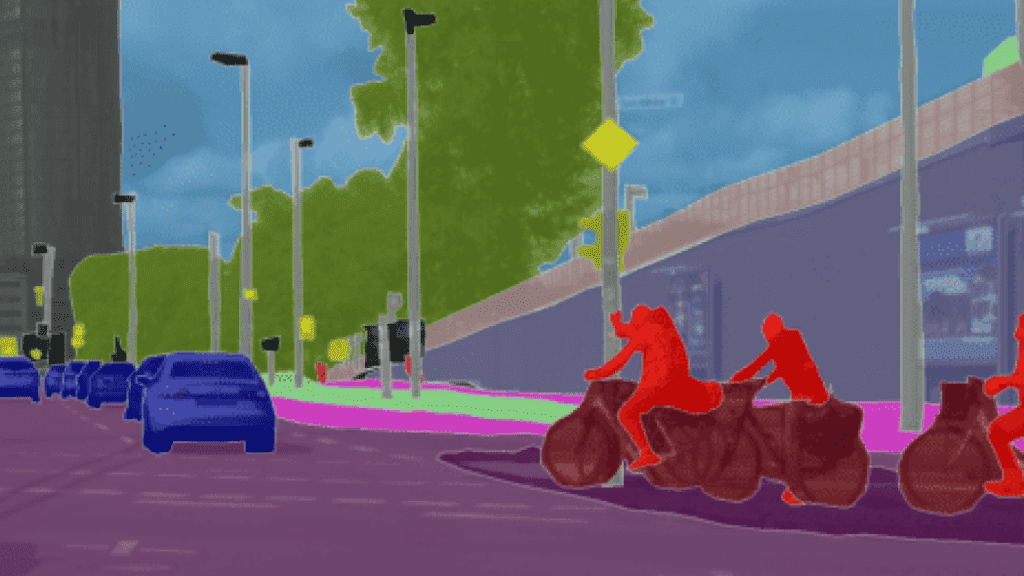

- Segmentation masks: color each pixel of an object. Example: label crop rows in an aerial image.

- Keypoints / landmarks: mark specific points (eyes, joints). Example: facial landmark detection.

- Polylines & splines: race lines such as lane markings for autonomous driving.

- 3D annotations: place 3‑D boxes in a LiDAR point cloud or segment volumetric MRI slices.

How to Annotate Visual Data

Large‑scale annotation usually happens inside specialized tools.. A traditional workflow: upload data, let human labelers (there are plenty of data annotation jobs out there) draw labels, then review for quality. Modern platforms provide hotkeys, interpolation, and model‑assisted suggestions to show how to annotate efficiently. Tools like FiftyOne integrate with CVAT, Labelbox, and others so you can curate the slices worth annotating and pull the finished labels straight back for inspection. If you’d like to speed things up even more, Voxel51’s blog covers active learning for annotation, where a model in the loop suggests the most informative samples to label next.

Why Annotations Matter

Quality annotations are the backbone of supervised learning. Errors in labels mislead the network, while accurate labels enable robust performance. Whether you’re bounding boxes around vehicles, segmenting tumors, or labeling 3‑D point clouds for multimodal perception, investing in precise annotations pays dividends at model‑deployment time. Remember: garbage in, garbage out.

Learn More: FiftyOne Annotation Guide | Voxel51 Active Learning Blog

Want to build and deploy visual AI at scale?

Talk to an expert about FiftyOne for your enterprise.

Open Source

Like what you see on GitHub? Give the Open Source FiftyOne project a star

Community

Get answers and ask questions in a variety of use case-specific channels on Discord