Enhancing YOLOv8 Segmentation: Precision, Efficiency, and Robustness

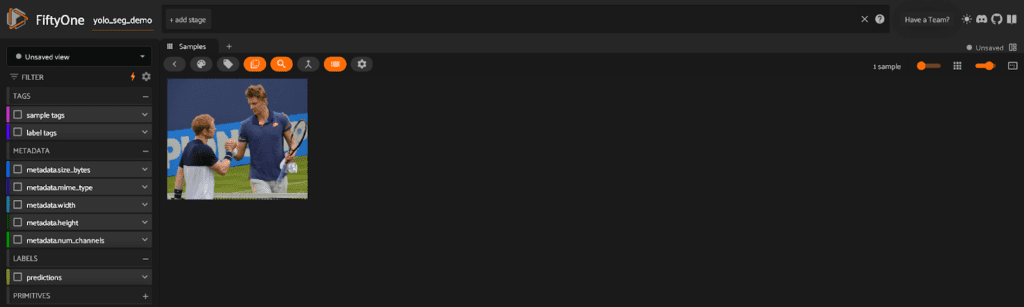

YOLO’s reputation rests on speed: one pass through the network, bounding boxes drawn, and you’re done. However, the moment instance segmentation is required, things can become more complicated. While YOLOv8 supports segmentation masks, these masks can be imperfect: parts of objects may disappear, boundaries can bleed together, and performance may degrade from ultra-fast bounding boxes to questionable outlines. Yet, real-time instance segmentation remains compelling. We created FiftyOne to ease the challenges of working with large datasets and segmentation tasks, particularly when iterating quickly with YOLO models. The goal of this article is to show how you can refine YOLO’s masks while preserving the speed that makes YOLO so appealing.

We’ve also prepared a companion Jupyter notebook that walks through the data-centric tips outlined below.

-

- Inference with YOLOv8 instance segmentation

-

- Visualizing predictions in FiftyOne

-

- Handling class imbalance with weighted sampling

-

- Synthetic occlusion for more robust masks

-

- Grad-CAM to understand how YOLO “sees” each object

Balancing Speed and Segmentation Accuracy

One-stage detectors like YOLO are optimized for bounding boxes, which works well for tasks such as drawing a box around a cat. Instance segmentation, however, demands identifying each pixel of that dog or cat. For overlapping objects, YOLO’s single-shot approach can begin to stretch thin. Two-stage approaches (Mask R-CNN and others) often produce sharper boundaries but run more slowly. If you work in robotics, real-time safety monitoring, or any domain where heavy compute overhead is not an option, YOLO’s efficiency remains compelling. The key is finding ways to refine YOLO’s segmentation rather than resorting to more resource-intensive solutions.

Real-time segmentation is already appearing in autonomous vehicles, advanced medical imaging, and streaming analytics for security cameras. As soon as you ask a single model to generate both bounding boxes and segmentation masks at high speed, you recognize that you cannot simply press “train” and expect perfect results. Although YOLO’s speed is advantageous, data curation, labeling consistency, and a purposeful training strategy have a major role to play.

Common Pitfalls in YOLOv8 Segmentation

Inconsistent Data and Bad Labels

Data quality is paramount, and pixel-level labeling invites a wide margin for error compared to bounding boxes. A bounding box can be off by a few pixels without severely harming training, but instance segmentation requires precision around edges. If your annotation tool mislabels portions of the background as part of an object or neglects certain occlusions, YOLO will internalize those inconsistencies. The result could be masks that truncate arms or merge adjacent objects. Before concluding that the model is at fault, inspect your ground truth carefully.

FiftyOne proves highly useful for diagnosing such issues by overlaying predicted versus ground-truth masks. By comparing them side by side, you can pinpoint where a label may have been erroneous or an object boundary was drawn incorrectly.

This kind of visualization is critical in fields like medical imaging, where a single mislabeled pixel may mark the difference between healthy and diseased tissue.

Class Imbalance: A Persistent Challenge

Consider a dataset containing 10,000 dog images and only 200 bird images. YOLO will excel at detecting dogs but struggle with birds. That might be acceptable if detecting dogs is your main objective, but not if both classes matter equally. Weighted sampling, oversampling, or carefully adding more data are all potential remedies. By examining per-class performance in FiftyOne, you can identify if “bird” IoU is inadequate, indicating you may need additional data or class-specific weighting. Weighted loss functions and synthetic data strategies are frequent go-tos for restoring balance so that minority classes do not become an afterthought.

Key Data Issues

| Data Issue | Impact on Segmentation | Mitigation Strategies |

| Class Imbalance | Leads to biased models that perform well on frequent classes but poorly on underrepresented ones; rare classes may be ignored. | Use oversampling or undersampling, apply weighted loss functions, or add synthetic examples to balance the class distribution. |

| Occlusions & Overlaps | Causes segmentation masks to merge or blur together when objects partially block one another, reducing the accuracy of boundaries. | Implement multi-scale training, use synthetic occlusion augmentations (e.g., cutouts or blending), and apply post-processing steps to refine masks. |

| Labeling Errors | Inaccurate or inconsistent annotations can cause the model to learn incorrect boundaries, leading to truncated or merged object masks. | Rigorously review and clean data annotations, use tools like FiftyOne to catch mislabels, and perform quality assurance on the dataset before training. |

Comprehensive Evaluation Beyond Single Metrics

It’s easy to focus exclusively on a metric like mean Intersection over Union (mIoU) or mean Average Precision (mAP), as these are convenient to quote. However, when you need refined YOLO models for mission-critical or high-stakes applications, you must look more deeply into your metrics.

Uncertainty Analysis

If your model is overly confident in every prediction, it’s either exceptionally robust or inflating its certainty. By examining predictions with low confidence, you can locate where YOLO’s coverage may be insufficient. FiftyOne can highlight these low-confidence regions so you can diagnose whether the issue arises from sparse data, class confusion, or unusual occlusions. By fixing those subsets, retraining, and iterating, you can progressively refine your model.

Edge Cases

Investigate the relatively few images that produce poor IoU. These may share unusual factors, such as very dark lighting, extreme angles, or challenging objects placed near image boundaries. Focusing your data augmentation on these edge cases can yield considerable improvements. If YOLO repeatedly struggles with small objects near the frame’s corner, multi-scale training or curated examples of small-corner objects may be valuable. The more lightweight YOLO variants, like YOLOv8n, facilitate fast experimentation so that you can adapt quickly to new findings.

Optimizing YOLOv8 for Superior Segmentation

Speeding Up Iterations

No one wants to spend a week waiting for a single training run to finish before determining whether a particular augmentation strategy worked. Smaller YOLOv8 “nano” models are a practical option for fast experimentation. When a method shows promise, you can scale up to a larger model for those final increments in accuracy. This strategy saves both time and GPU resources.

Clean Up Labels with FiftyOne

Whenever you notice strange predictions, investigate whether labels might be inaccurate. It’s not unusual to discover entire objects labeled as background or partially trimmed. With FiftyOne’s visual interface, you can directly compare predicted masks to your ground-truth annotations and resolve labeling inconsistencies. Even a well-planned training workflow will replicate errors if many segmentation masks in your dataset are off by a few pixels.

Addressing Class Imbalance

We cannot overemphasize how challenging class imbalance can be. If your dataset features wide disparities between classes, the underrepresented classes risk being overlooked. Weighted losses or oversampling are straightforward techniques in PyTorch (with WeightedRandomSampler, for instance) that ensure each class receives due attention. While it may sound basic, it can greatly reduce the “this class never shows up” problem.

Improving Robustness with Synthetic Occlusions

Real-world scenes are usually messy, with objects partially hiding behind one another. Introducing random black or blurred patches, overlays, or other forms of partial occlusion in your training images helps YOLO learn that an object can remain identifiable even when partially obscured. Although initial results may dip, the model generally adapts, leading to fewer catastrophic merges when real-world occlusions occur.

Check YOLO’s Brain with Grad-CAM

It can be insightful to discover which parts of an image YOLO relies on when generating segmentation masks. Grad-CAM (or Eigen-CAM) can highlight the critical regions that determine YOLO’s outputs. Sometimes, the model focuses exactly where you’d expect; other times, it may attend to irrelevant background details. These insights can help you decide whether to refine your data or detect any unintended correlations YOLO is learning.

Putting This into Action

At Voxel51, we developed FiftyOne to streamline these processes:

-

- Overlay predicted masks and ground-truth masks

-

- Filter by class or confidence level

-

- Tag anomalous edge cases or labeling errors

-

- Evaluate performance through detailed per-class and per-sample metrics

YOLOv8 Under Real-World Conditions

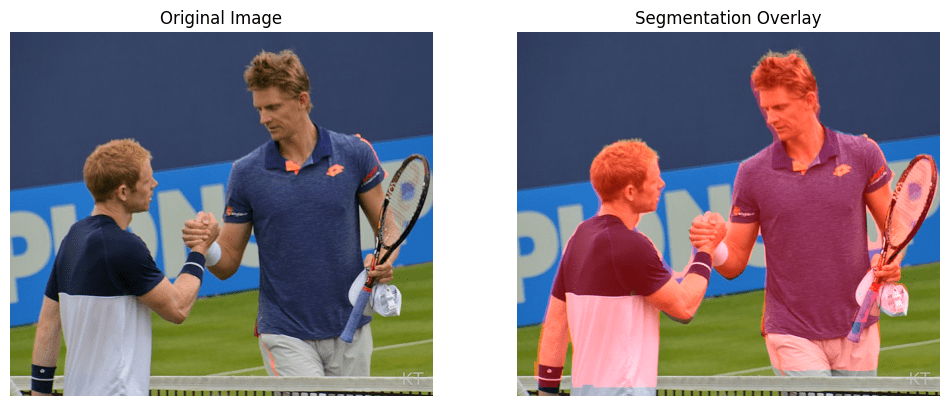

Segmentation models don’t always encounter ideal conditions. Real-world scenarios with dim lighting, blurry cameras, and noisy environments can quickly challenge even highly accurate models. The four images below demonstrate YOLOv8’s segmentation performance under common image degradations: original, dimmed, noisy, and blurred.

Interestingly, notice how YOLOv8 predictions shift with each distortion. For example, under dimmed conditions, detection confidence dips across objects, reflecting the model’s uncertainty without strong visual cues. Noise further erodes accuracy, reducing confidence sharply, while blur slightly improves predictions for some of the objects compared to dimming, even if it still mislabels clasped hands as a remote.

This visual exploration underscores the need to expose YOLOv8 models to realistic, degraded conditions during training. Incorporating augmentation strategies like slight blurring, brightness variations, and mild noise can greatly boost YOLO’s robustness in real-world applications, maintaining the fast performance users expect.

Streamlining Segmentation Workflows with FiftyOne

FiftyOne removes the guesswork from your YOLO segmentation model runs, allowing you to perform instance segmentation without manually sorting through large volumes of predictions. Quickly identify issues with your custom dataset, refine your labels, and streamline your workflow when you train YOLOv8 instance segmentation models, even lightweight versions like the nano model. Continual refinement and iterative retraining help you identify individual objects and generate accurate segmentation masks, transforming a rough dataset into a structured resource. Even if your use case is robotics, medical imaging, or everyday object detection, FiftyOne enables a detailed understanding of your data and improves real-time performance. By addressing common challenges in instance segmentation tasks, you can confidently deploy your fully trained model to reliably segment objects in real-world conditions.

Explore the Jupyter Notebook

Check out the Jupyter notebook that walks through the data-centric tips discussed above. By following the notebook, you can apply these techniques to your dataset, experiment with different augmentations, and inspect how YOLOv8 behaves under challenging conditions.

Image Citations

-

- Carine06. Ed Corrie & Kevin Anderson Shaking Hands After a Match. Photograph. Wikimedia Commons. CC BY-SA 2.0. https://commons.wikimedia.org/wiki/File:Handshake_(27106664813).jpg.

Sedlecký, David. Václav Lebeda (Voxel), Czech Musician. Photograph. June 9, 2015. Wikimedia Commons. CC BY-SA 4.0. https://commons.wikimedia.org/wiki/File:V_Lebeda_Voxel_2015.JPG.