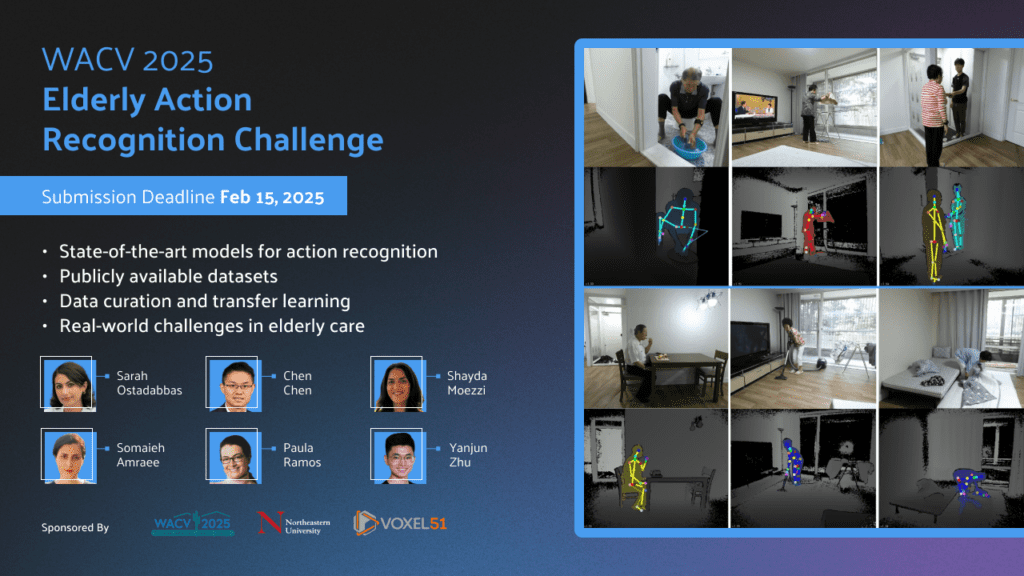

Elderly Action Recognition Challenge – WACV 2025

Submission Deadline: February 15, 2025

Elderly Action Recognition Challenge – WACV 2025

Join us for the Elderly Action Recognition (EAR) Challenge, part of the Computer Vision for Smalls (CV4Smalls) Workshop at the WACV 2025 conference!

This challenge focuses on advancing research in Activity of Daily Living (ADL) recognition, particularly within the elderly population, a domain with profound societal implications. Participants will employ transfer learning techniques with any architecture or model they want to use. For example, starting with a general human action recognition benchmark and fine-tuning models on a subset of data tailored to elderly-specific activities.

We warmly invite participants from both academia and industry to collaborate and innovate. Voxel51 is proudly sponsoring this challenge and aims to encourage solutions that demonstrate robustness across varying conditions (e.g., subjects, environments, scenes) and adaptability to real-world variability.

Challenge Objectives

Participants will:

- Develop state-of-the-art models for elderly action recognition using publicly available datasets.

- Showcase innovative techniques for data curation and transfer learning.

- Contribute to a growing body of research that addresses real-world challenges in elderly care.

Key Dates

- Challenge Launch: January 10, 2025

- Submission Deadline: February 15, 2025

- Winners Announcement: February 20, 2025, at the virtual AI, Machine Learning, and Computer Vision Meetup.

Model Development

Participants are encouraged to explore and leverage state-of-the-art human action recognition models without limitations. Creativity and originality in model architecture and training methodology are strongly encouraged.

Dataset

To mitigate overfitting and promote generalization, participants could build training datasets using publicly available resources such as ETRI-Activity3D [1], MUVIM [2], and ToyotaSmartHome [3], based on video format. Consider that there are datasets that require submitting a request before you can be downloaded.

Participants are not restricted to these datasets and are welcome to curate extensive datasets, combining multiple sources. A detailed report outlining the datasets used and their preparation and curation processes is mandatory.

Evaluation Dataset

The evaluation dataset will comprise multiple curated subsets of elderly action recognition data. Participants could access the unlabeled evaluation dataset. Here is the evaluation dataset you need to download to test your model.

Data Curation and Categorization

Participants must group activities into the following categories for efficient organization and analysis, model output should show categories and activities as well.

- Locomotion and Posture Transitions – Walking, sitting down/standing up, getting up/lying down, exercising, looking for something

- Object Manipulation – Spreading bedding/folding bedding, wiping table, cleaning dishes, cooking, vacuuming

- Hygiene and Personal Care – Washing hands, brushing teeth, taking medicine

- Eating and Drinking

- Communication and Gestures – talking, phone calls, waving a hand, shaking hands, hugging

- Leisure and Stationary Actions – Reading, watching TV

Keep in mind that the submission file should have the following categories, all lowercase: “`locomotion, manipulation, hygiene, eating, communication, and leisure.“`

Supporting Materials

Voxel51’s FiftyOne tool will assist participants in effectively curating, categorizing, and visualizing datasets. Tutorials and examples will be provided at the challenge launch.

A few supporting blogs and notebooks:

- Journey into Visual AI: Exploring FiftyOne Together — Part III Preparing a Computer Vision Challenge

- Journey into Visual AI: Exploring FiftyOne Together — Part IV Model Evaluation

Evaluation

Given a path to mp4, the evaluation script should intake the video and output category. The evaluation framework will use the following metrics to ensure a fair and comprehensive assessment:

- Primary Metric: Average Accuracy across the evaluation datasets.

- We will sample the dataset for public and private tests. Participants can only see public scores while the competition is in progress, and the private scores will be used for the final rankings.

Evaluation Script

An evaluation script will calculate all metrics and provide a leaderboard ranking. The script will not be accessible during training. In the event of tied scores, secondary metrics will determine leaderboard positions.

Winner’s Rewards

First place:

- A $500 gift card

- Opportunity to publish a blog detailing their results and approach on voxel51.com

First, second, and third place:

- Opportunity to present at a virtual Meetup and share your approach and insights with the computer vision community

- Video Presentation at CV4Smalls Workshop: Record a video showcasing your results, which will be featured during the CV4Smalls Workshop (No registration or travel required).

- Future Collaboration: Participate as a judge in the next Visual AI Hackathon hosted by Northeastern University, sharing your expertise with aspiring innovators.

Next Steps After the Challenge

- Winners will be contacted to coordinate the details of their blog publication and presentation.

- Meetup and CV4Smalls presentation materials will be scheduled in collaboration with our team.

- Judging the Hackathon will be arranged with Northeastern University closer to the event date.

Participation Rules

- Participants should register for the challenge on the Challenge webpage.

- Submissions must include

- An Eval submission CSV file, with the prediction results over the Evaluation Dataset

- A Hugging Face Link of your PyTorch model weights

- A PDF Report documenting the data curation process and datasets used. Use the following template.

- Notebooks or main codes will be requested from the top five participants on the leaderboard

- Use of external datasets or pre-trained models is permitted but must be disclosed in the report. User can leverage any architecture or model they want

- Submissions must include the required files (eval cvs file, model link, and report) or be disqualified.

- The top five positions on the leaderboard may be subject to audit by the challenge organizers. If you are among the top five, you will receive further communication regarding the process.

- Using the Discord channel is not mandatory, but it will benefit all the participants, help each other, or help them find common problems.

- More information will be provided once the submission process is ready.

Submission Platform

References

[1] Jang, J., Kim, D., Park, C., Jang, M., Lee, J., & Kim, J. (2020). ETRI-Activity3D: A Large-Scale RGB-D Dataset for Robots to Recognize Daily Activities of the Elderly. arXiv:2003.01920

[2] Denkovski, S., Khan, S. S., Malamis, B., Moon, S. Y., Ye, B., & Mihailidis, A. (2022). Multi Visual Modality Fall Detection Dataset. arXiv:2206.12740

[3] R. Dai, S. Das, S. Sharma, L. Minciullo, L. Garattoni, F. Bremond, and G. Francesca, Toyota Smarthome Untrimmed: Real-World Untrimmed Videos for Activity Detection, arXiv preprint arXiv:2010.14982, 2022. [Online]. Available: https://arxiv.org/abs/2010.14982

Workshop Keynote

In an era dominated by large-scale datasets, designing computer vision solutions for unique and often underrepresented populations demands rethinking technology and methodology. Infants, toddlers, and the elderly represent groups where data is scarce due to ethical, practical, and privacy concerns but where the potential for positive impact is immense. This keynote will explore the core challenges of building and deploying computer vision systems with limited yet precious data. We will see how approaches involving synthetic data, GenAI, stereo vision, and even models built using big data can immensely help in solutions where data is sparse, and privacy is critical.

Satya Mallick

CEO – OpenCV.org

Judges/Mentors

Sarah Ostadabbas

Northeastern University

Paula Ramos, PhD

Voxel51

Chen Chen

University of Central Florida

Yanjun Zhu

Northeastern University

Shayda Moezzi

Northeastern University

Somaieh Amraee

Northeastern University

Sai Anish Sreeramagiri

Northeastern University

Shahab Nazari

Scania Group