Voxel51 Launches Computer Vision Industry’s First Open-Source Rapid Dataset Experimentation Tool

August 12, 2020

“FIFTYONE” HELPS DATA SCIENTISTS TACKLE DATA QUALITY LIMITATIONS THAT IMPACT PREDICTIVE PERFORMANCE OF IMAGE-BASED MACHINE LEARNING MODELS

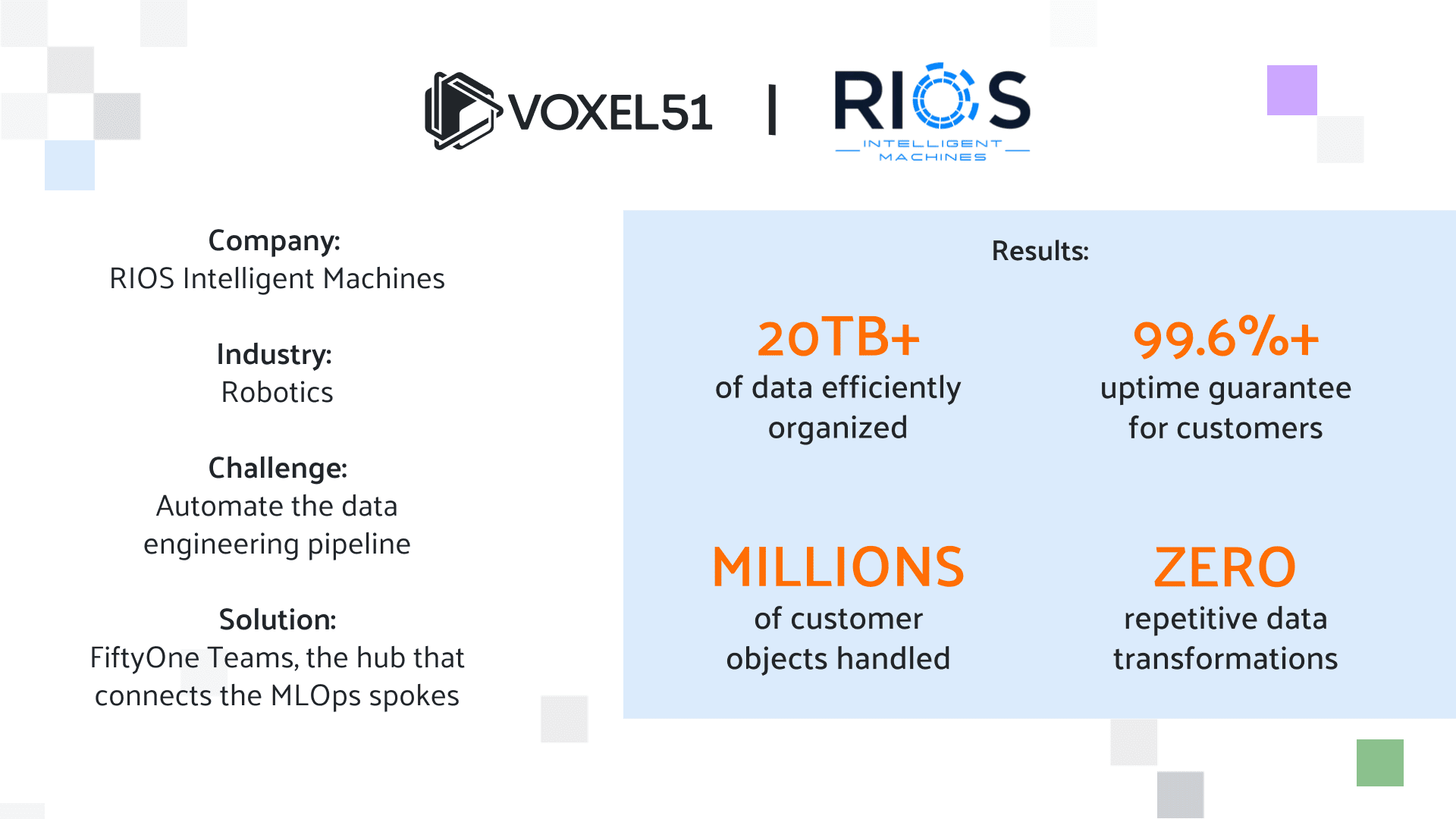

Ann Arbor, MI – Voxel51 today announced the launch of FiftyOne, the computer vision industry’s first open-source rapid dataset experimentation tool that addresses the most fundamental pain point for machine learning scientists — performance-limiting datasets.

“Nothing hinders the success of machine learning systems more than poor-quality data. Yet the process of continuous data quality management is incredibly challenging and time consuming,” said Jason Corso, Voxel51 co-founder and CEO. “We created this tool, which brings over 15 years of academic research and experience in creating computer vision and machine learning systems to offer engineers a better toolbox and a more efficient way to improve the quality, accuracy and diversity of image datasets in order to mitigate the consequences of bad data and to improve the predictive performance of production models.”

Available now at voxel51.com, the new transformative tool enables computer vision and machine learning scientists to easily bring their insight and intuition into their training data and to shrink the time-consuming and labor-intensive process of constant iteration and evaluation. FiftyOne’s interactive dashboard and Python library allows users to easily visualize, explore and analyze their data to curate superior datasets with the ability to scale to the size and level of accuracy required in order to be useful in complex, real-world applications.

“There’s no other solution that’s as easy to use to rapidly experiment with datasets and to identify limitations that stifle model performance,” said Brian Moore, Voxel51 co-founder and CTO. “We’re transforming the art of dataset curation into a science and we are eager to share the results of our work with developers and machine learning scientists across industry and academia.”

FiftyOne makes it easy to search, filter and sort images by their predicted and ground truth classifications as well as their related hardness, mistakenness, and uniqueness. FiftyOne supports the most commonly used image dataset types such as Berkeley DeepDrive, COCO, CVAT, KITTI, TFRecords and VOC. A collection of open source datasets and support for TensorFlow and PyTorch ML frameworks are also built into FiftyOne along with helper functions and tutorials. Users are able to easily view subsets of dataset samples; remove duplicate and near-duplicate images; cleanup labeling mistakes; recommend samples for annotation; rank samples by representativeness; and discover the most unique samples, which is essential to improving model performance. FiftyOne is flexible and scalable, allowing natural integration into existing workflows.

About Voxel51

Headquartered in Ann Arbor, Michigan, and founded in 2016 by University of Michigan professor Dr. Jason Corso and Dr. Brian Moore, Voxel51 is an AI software company that enables computer vision data scientists to rapidly curate and experiment with their datasets in order to build higher performing machine learning systems. For more information visit voxel51.com.