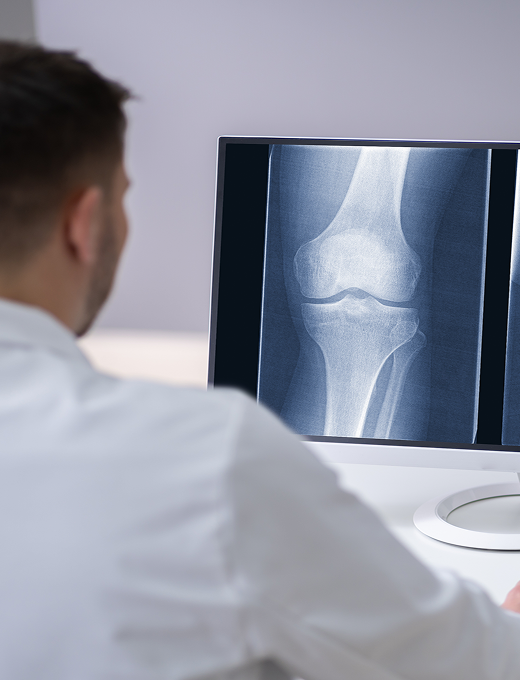

Maximize AI performance with better data

FiftyOne is the most powerful Visual AI and computer vision data platform.

FiftyOne Platform

Unlock the value of your data

In a world where data fuels AI innovation, FiftyOne puts data at the center of your workflow—helping you exploit its full potential to gain a competitive edge.

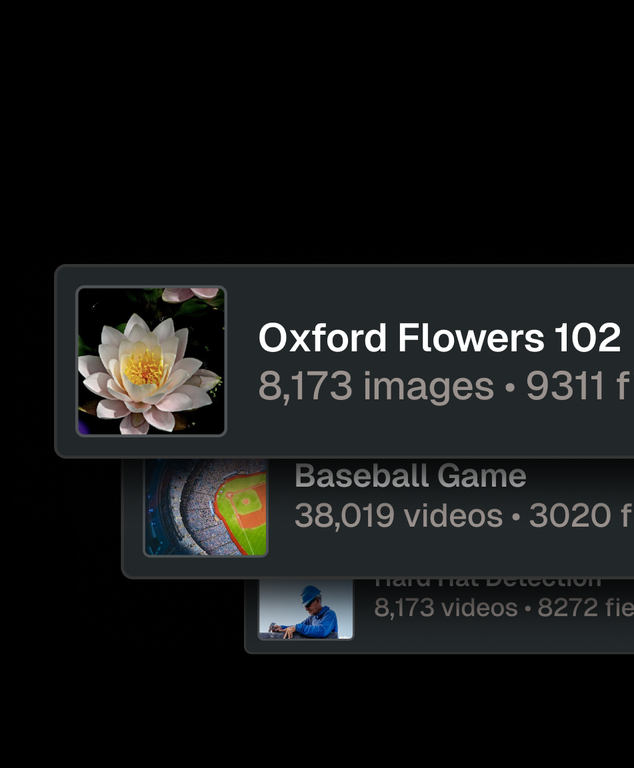

Visualize your data like never before

Identify your best performing samples, weed out low-quality data, and organize dataset views intuitively — so you can focus on building better models, faster.

- Unify multimodal data (3D, video, images, metadata)

- Slice, search, and filter massive datasets

- Analyze data patterns with embeddings

- Improve data quality with automatic filters

- Query data lake and retrieve relevant samples

Benefits & ROI

Leading enterprises build using FiftyOne

0%

increase in model accuracy

0+

months of development time saved

0%

boost in team productivity

COMPLIANCE & GOVERNANCE

Enterprise-grade security, scale, and extensibility

FiftyOne is built to meet the demands of the most sophisticated ML stacks.

Deploy anywhere

Fully customizable and extensible

Support for billions of samples

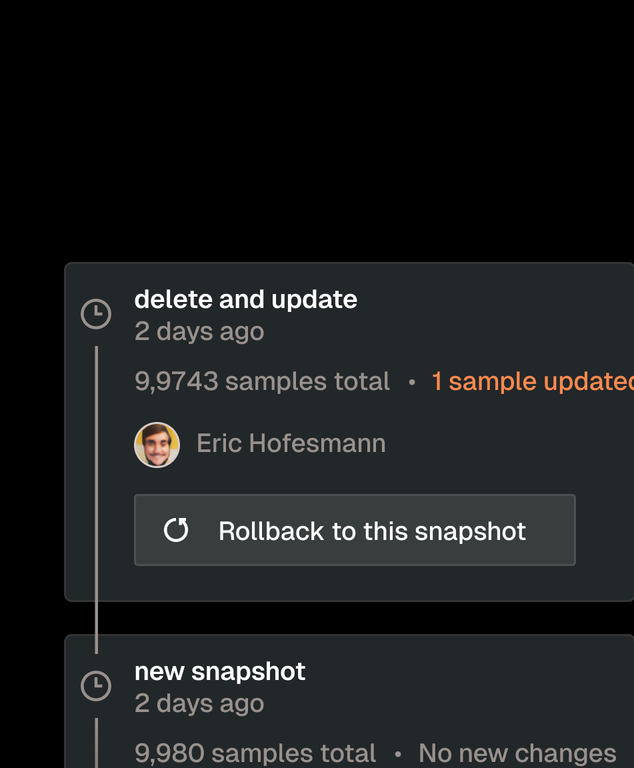

Dataset versioning

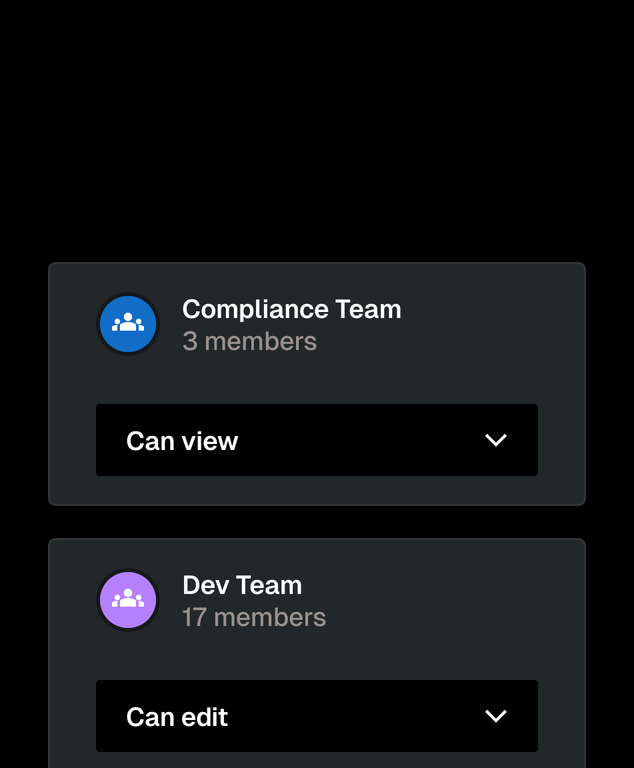

Role-based access controls

ISO 27001 certification

Get started today

Leading enterprises build using FiftyOne

30% increase in model accuracy

5+ months of development time saved

30% boost in team productivity

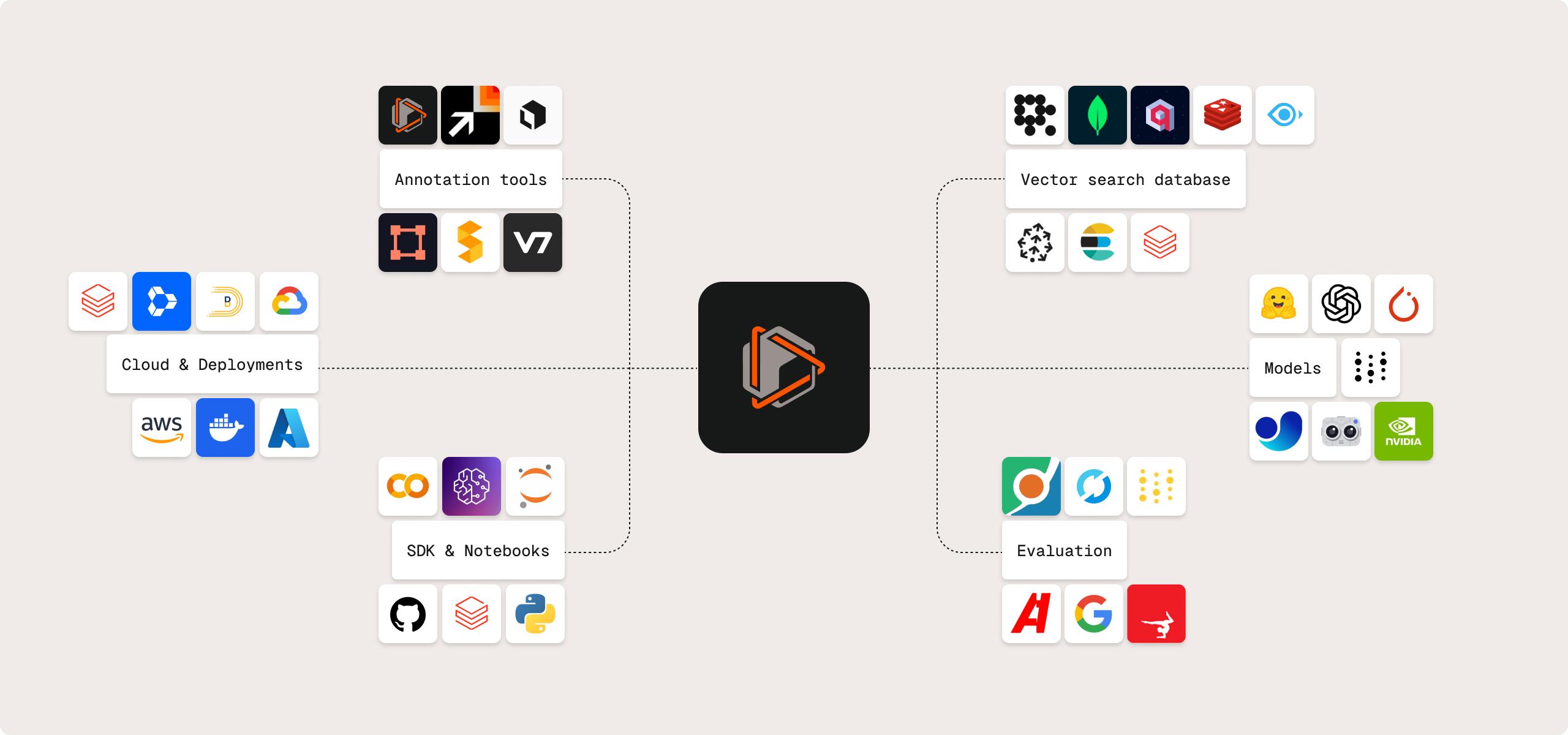

INTEGRATIONS

Your stack, your choice —

no vendor lock-in

FiftyOne seamlessly integrates with your existing tech stack, giving you the freedom to evolve your toolchain as needs change.

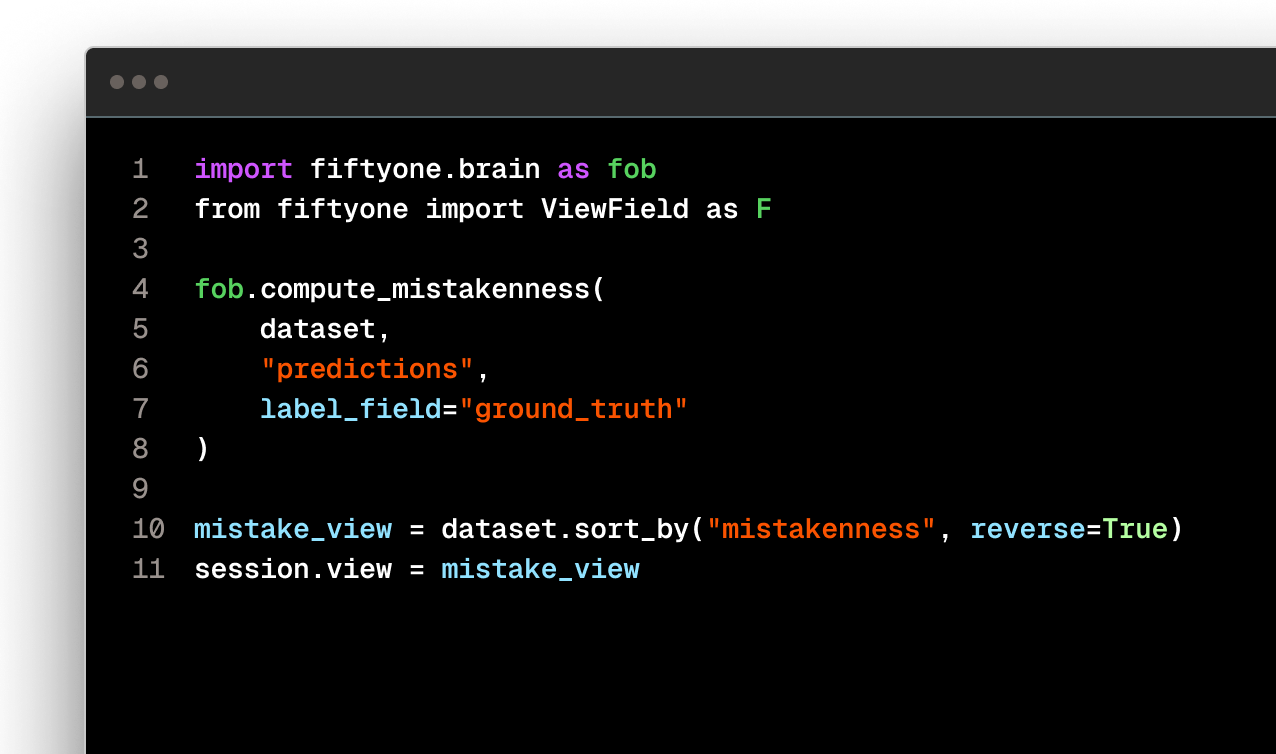

Developer resources

Loved by ML engineers

More than 3 million installs

Built on open source standards

22K+ computer vision community members

Enough data wrangling.

Request a demo.

© 2025 Voxel51 All Rights Reserved