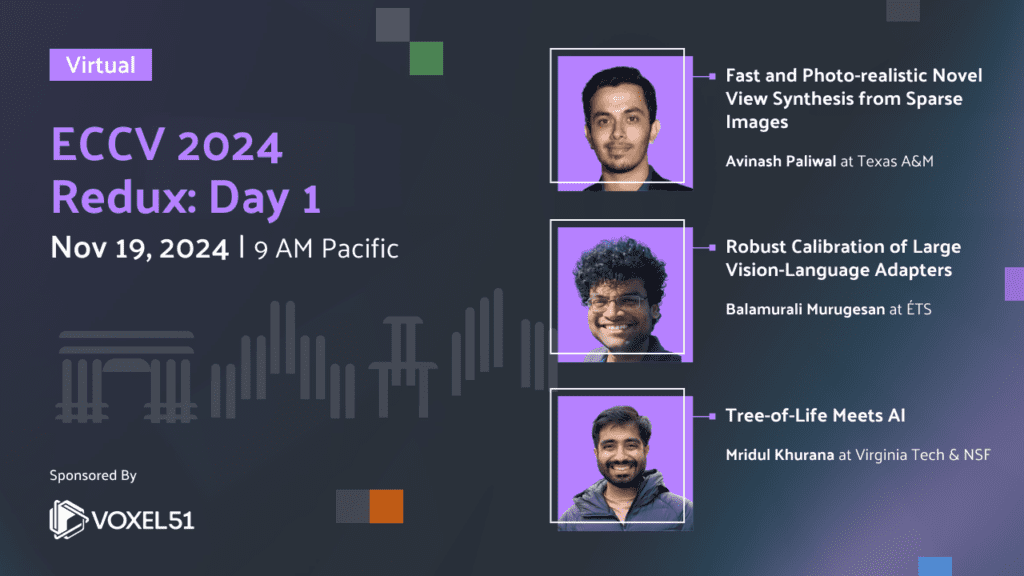

ECCV 2024 Redux: Day 1

Nov 19, 2024 at 9:00 AM Pacific

Register for the Zoom

By submitting you (1) agree to Voxel51’s Terms of Service and Privacy Statement and (2) agree to receive occasional emails.

Fast and Photo-realistic Novel View Synthesis from Sparse Images

Avinash Paliwal

Texas A&M

Novel view synthesis generates new perspectives of a scene from a set of 2D images, enabling 3D applications like VR/AR, robotics, and autonomous driving. Current state-of-the-art methods produce high-fidelity results but require a lot of images, while sparse-view approaches often suffer from artifacts or slow inference. In this talk, I will present my research work focused on developing fast and photorealistic novel view synthesis techniques capable of handling extremely sparse input views.

ECCV 2024 Paper: CoherentGS: Sparse Novel View Synthesis with Coherent 3D Gaussians

About the Speaker

Avinash Paliwal is a PhD Candidate in the Aggie Graphics Group at Texas A&M University. His research is focused on 3D Computer Vision and Computational Photography.

Robust Calibration of Large Vision-Language Adapters

Balamurali Murugesan

ÉTS

We empirically demonstrate that popular CLIP adaptation approaches, such as Adapters, Prompt Learning, and Test-Time Adaptation, substantially degrade the calibration capabilities of the zero-shot baseline in the presence of distributional drift. We identify the increase in logit ranges as the underlying cause of miscalibration of CLIP adaptation methods, contrasting with previous work on calibrating fully-supervised models. Motivated by these observations, we present a simple and model-agnostic solution to mitigate miscalibration, by scaling the logit range of each sample to its zero-shot prediction logits

ECCV 2024 Paper: Robust Calibration of Large Vision-Language Adapters

About the Speaker

Balamurali Murugesan is currently pursuing his Ph.D. in developing reliable deep learning models. Earlier, he completed his master’s thesis on accelerating MRI reconstruction. He has published 25+ research articles in renowned venues.

Tree-of-Life Meets AI: Knowledge-guided Generative Models for Understanding Species Evolution

Mridul Khurana

Virginia Tech & NSF

A central challenge in biology is understanding how organisms evolve and adapt to their environment, acquiring variations in observable traits across the tree of life. However, measuring these traits is often subjective and labor-intensive, making trait discovery a highly label-scarce problem. With the advent of large-scale biological image repositories and advances in generative modeling, there is now an opportunity to accelerate the discovery of evolutionary traits. This talk focuses on using generative models to visualize evolutionary changes directly from images without relying on trait labels.

ECCV 2024 Paper: Hierarchical Conditioning of Diffusion Models Using Tree-of-Life for Studying Species Evolution

About the Speaker

Mridul Khurana is a PhD student at Virginia Tech and a researcher with the NSF Imageomics Institute. His research focuses on AI4Science, leveraging multimodal generative modeling to drive discoveries across scientific domains.

Find a Meetup Near You

Join 27,000+ AI and ML enthusiasts who have already become members

The goal of the AI, Machine Learning, and Data Science Meetup network is to bring together a community of data scientists, machine learning engineers, and open source enthusiasts who want to share and expand their knowledge of AI and complementary technologies. If that’s you, we invite you to join the Meetup closest to your timezone.