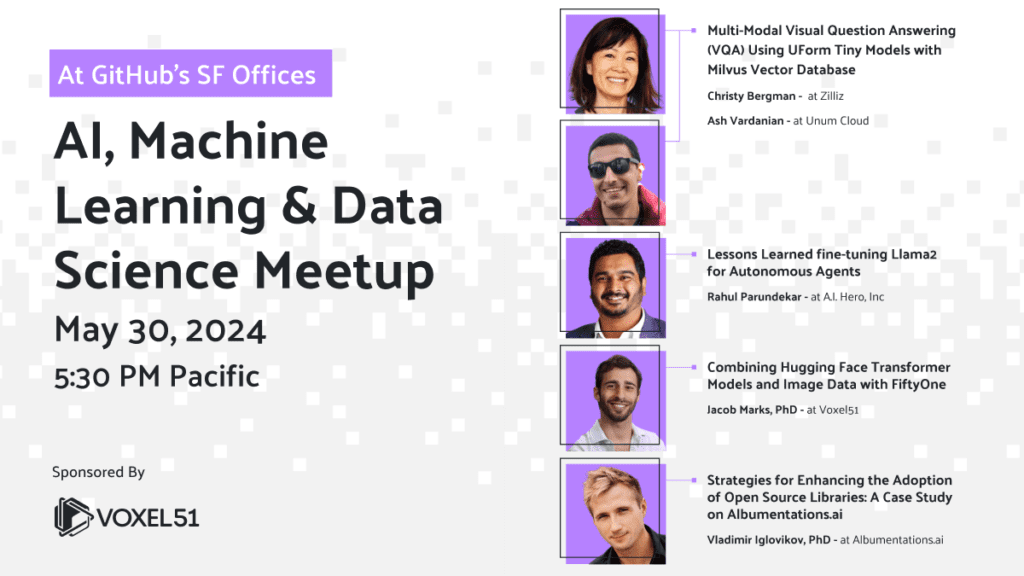

May 2024 AI, Machine Learning and Data Science Meetup

May 30, 2024 | 5:30 to 8:00 PM PT

Register for the event at GitHub's offices in San Francisco. RSVPs are limited!

By submitting you (1) agree to Voxel51’s Terms of Service and Privacy Statement and (2) agree to receive occasional emails.

Date, Time and Location

Date and Time

May 30, 5:30 PM to 8:00 PM Pacific

Location

The Meetup will take place at GitHub’s offices in San Francisco. Note that pre-registration is mandatory.

88 Colin P Kelly Jr St, San Francisco, CA 94107

Talks and Speakers

Lessons Learned fine-tuning Llama2 for Autonomous Agents

Rahul Parundekar

A.I. Hero, Inc

In this talk, Rahul Parundekar, Founder of A.I. Hero, Inc. does a deep dive into the practicalities and nuances of making LLMs more effective and efficient. He’ll share hard-earned lessons from the trenches of LLMOps on Kubernetes, covering everything from the critical importance of data quality to the choice of fine-tuning techniques like LoRA and QLoRA. Rahul will share insights into the quirks of fine-tuning LLMs like Llama2, the need for looking beyond loss metrics and benchmarks for model performance, and the pivotal role of iterative improvement through user feedback – all learned through his work on fine-tuning an LLM for retrieval-augmented generation and autonomous agents. Whether you’re a seasoned AI professional or just starting, this talk will equip you with the knowledge of when and why you should fine-tune, to the long-term strategies to push the boundaries of what’s possible with LLMs, to building a performant framework on top of Kubernetes for fine-tuning at scale.

About the Speaker

Rahul Parundekar is the founder of A.I. Hero, Inc., a seasoned engineer, and architect with over 15 years of experience in AI development, focusing on Machine Learning and Large Language Model Operations (MLOps and LLMOps). AI Hero automates mundane enterprise tasks through agents, utilizing a framework for fine-tuning LLMs with both open and closed-source models to enhance agent autonomy.

Combining Hugging Face Transformer Models and Image Data with FiftyOne

Jacob Marks, PhD

Voxel51

Datasets and Models are the two pillars of modern machine learning, but connecting the two can be cumbersome and time-consuming. In this lightning talk, you will learn how the seamless integration between Hugging Face and FiftyOne simplifies this complexity, enabling more effective data-model co-development. By the end of the talk, you will be able to download and visualize datasets from the Hugging Face hub with FiftyOne, apply state-of-the-art transformer models directly to your data, and effortlessly share your datasets with others.

About the Speaker

Jacob Marks is a Machine Learning Engineer and Developer Evangelist at Voxel51, where he leads open source efforts in vector search, semantic search, and generative AI for the FiftyOne data-centric AI toolkit.

Prior to joining Voxel51, Jacob worked at Google X, Samsung Research, and Wolfram Research. In a past life, he was a theoretical physicist: in 2022, he completed his Ph.D. at Stanford, where he investigated quantum phases of matter.

Multi-Modal Visual Question Answering (VQA) using UForm tiny models with Milvus vector database

Christy Bergman

Zilliz

UForm is a multimodal AI library that will help you understand and search visual and textual content across various languages. UForm not only supports RAG chat use-cases, but is also capable of Visual Question Answering (VQA). Compact custom pre-trained transformer models can run anywhere from your server farm down to your laptop. I’ll be giving a demo of RAG and VQA using Milvus vector database.

About the Speaker

Christy Bergman is a passionate Developer Advocate at Zilliz. She previously worked in distributed computing at Anyscale and as a Specialist AI/ML Solutions Architect at AWS. Christy studied applied math, is a self-taught coder, and has published papers, including one with ACM Recsys. She enjoys hiking and bird watching.

Ash Vardanian

Unum Cloud

About the Speaker

Ash Vardanian is the Founder of Unum Cloud. With a background in Astrophysics, his work today primarily lies in the intersection of Theoretical Computer Science, High-Performance Computing, and AI Systems Design, including everything from GPU algorithms and SIMD Assembly to Linux kernel bypass technologies and multimodal perception.

Strategies for Enhancing the Adoption of Open Source Libraries: A Case Study on Albumentations.ai

Vladimir Iglovikov

Albumentations.ai

In this presentation, we explore key strategies for boosting the adoption of open-source libraries, using Albumentations.ai as a case study. We will cover the importance of community engagement, continuous innovation, and comprehensive documentation in driving a project’s success. Through the lens of Albumentations.ai’s growth, attendees will gain insights into effective practices for promoting their open source projects within the machine learning and broader developer communities.

About the Speaker

Vladimir Iglovikov is a co-creator of Albumentations.ai, a Kaggle Grandmaster, and an advocate for open source AI technology. With a Ph.D. in physics and deep expertise in deep learning, he has significantly contributed to advancing the machine learning field.

Find a Meetup Near You

Join 12,000+ AI and ML enthusiasts who have already become members

The goal of the AI, Machine Learning, and Data Science Meetup network is to bring together a community of data scientists, machine learning engineers, and open source enthusiasts who want to share and expand their knowledge of AI and complementary technologies. If that’s you, we invite you to join the Meetup closest to your timezone.