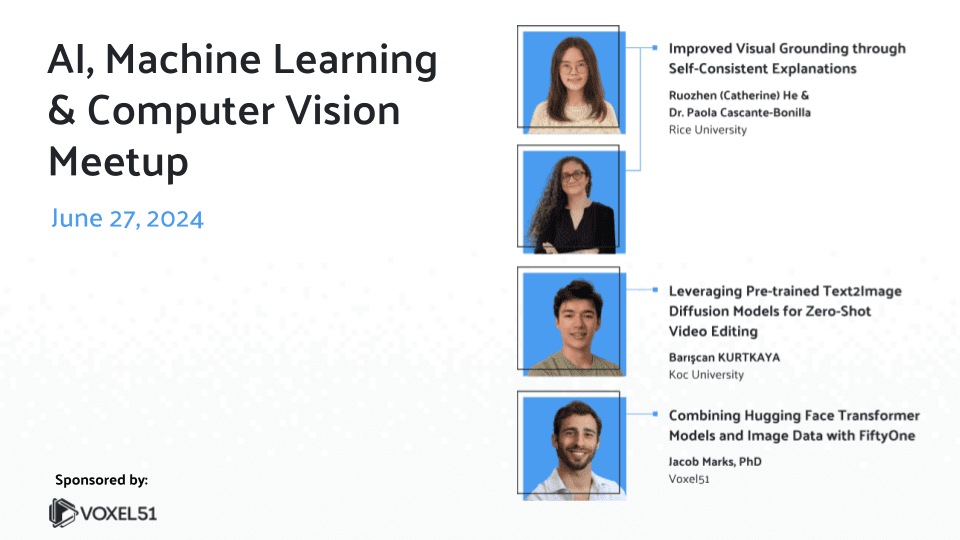

AI, Machine Learning and Computer Vision Meetup

June 27, 2024 at 10 AM Pacific

Register for the Zoom

By submitting you (1) agree to Voxel51’s Terms of Service and Privacy Statement and (2) agree to receive occasional emails.

Leveraging Pre-trained Text2Image Diffusion Models for Zero-Shot Video Editing

Barışcan Kurtkaya

KUIS AI Fellow at Koc University

Text-to-image diffusion models demonstrate remarkable editing capabilities in the image domain, especially after Latent Diffusion Models made diffusion models more scalable. Conversely, video editing still has much room for improvement, particularly given the relative scarcity of video datasets compared to image datasets. Therefore, we will discuss whether pre-trained text-to-image diffusion models can be used for zero-shot video editing without any fine-tuning stage. Finally, we will also explore possible future work and interesting research ideas in the field.

About the Speaker

Bariscan Kurtkaya is a KUIS AI Fellow and a graduate student in the Department of Computer Science at Koc University. His research interests lie in exploring and leveraging the capabilities of generative models in the realm of 2D and 3D data, encompassing scientific observations from space telescopes.

Improved Visual Grounding through Self-Consistent Explanations

Dr. Paola Cascante-Bonilla

Rice University

Ruozhen (Catherine) He

Rice University

Vision-and-language models that are trained to associate images with text have shown to be effective for many tasks, including object detection and image segmentation. In this talk, we will discuss how to enhance vision-and-language models’ ability to localize objects in images by fine-tuning them for self-consistent visual explanations. We propose a method that augments text-image datasets with paraphrases using a large language model and employs SelfEQ, a weakly-supervised strategy that promotes self-consistency in visual explanation maps. This approach broadens the model’s working vocabulary and improves object localization accuracy, as demonstrated by performance gains on competitive benchmarks.

About the Speakers

Dr. Paola Cascante-Bonilla received her Ph.D. in Computer Science at Rice University in 2024, advised by Professor Vicente Ordóñez Román, working on Computer Vision, Natural Language Processing, and Machine Learning. She received a Master of Computer Science at the University of Virginia and a B.S. in Engineering at the Tecnológico de Costa Rica. Paola will join Stony Brook University (SUNY) as an Assistant Professor in the Department of Computer Science.

Ruozhen (Catherine) He is a first-year Computer Science PhD student at Rice University, advised by Prof. Vicente Ordóñez, focusing on efficient algorithms in computer vision with less or multimodal supervision. She aims to leverage insights from neuroscience and cognitive psychology to develop interpretable algorithms that achieve human-level intelligence across versatile tasks.

Combining Hugging Face Transformer Models and Image Data with FiftyOne

Jacob Marks, PhD

Voxel51

Datasets and Models are the two pillars of modern machine learning, but connecting the two can be cumbersome and time-consuming. In this lightning talk, you will learn how the seamless integration between Hugging Face and FiftyOne simplifies this complexity, enabling more effective data-model co-development. By the end of the talk, you will be able to download and visualize datasets from the Hugging Face hub with FiftyOne, apply state-of-the-art transformer models directly to your data, and effortlessly share your datasets with others.

About the Speaker

Jacob Marks, PhD is a Machine Learning Engineer and Developer Evangelist at Voxel51, where he leads open source efforts in vector search, semantic search, and generative AI for the FiftyOne data-centric AI toolkit. Prior to joining Voxel51, Jacob worked at Google X, Samsung Research, and Wolfram Research.

Find a Meetup Near You

Join 12,000+ AI and ML enthusiasts who have already become members

The goal of the AI, Machine Learning, and Data Science Meetup network is to bring together a community of data scientists, machine learning engineers, and open source enthusiasts who want to share and expand their knowledge of AI and complementary technologies. If that’s you, we invite you to join the Meetup closest to your timezone.