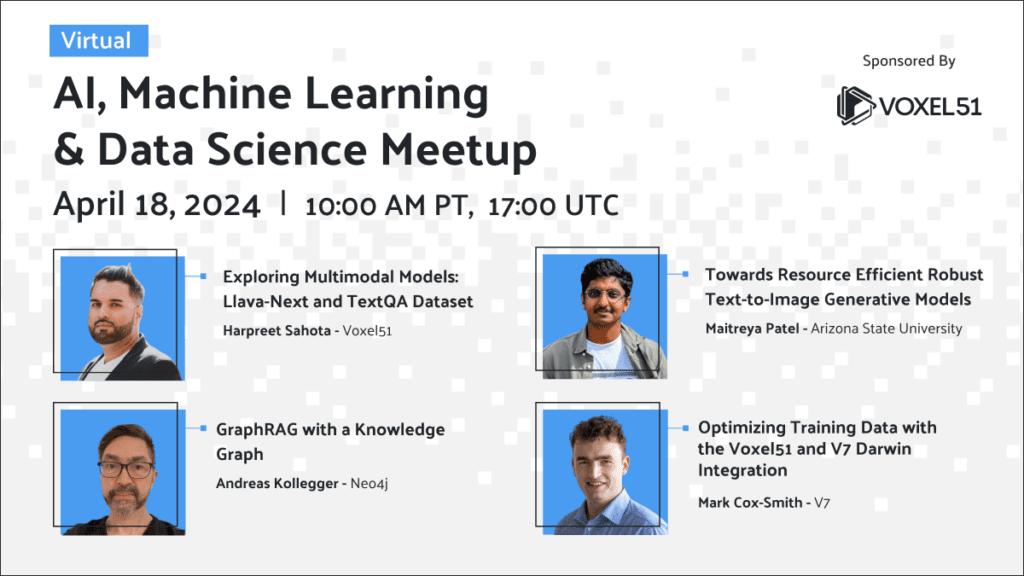

April 2024 AI, Machine Learning and Data Science Meetup

April 18, 2024 | 10 AM PT, 17:00 UTC

Register for the Zoom

By submitting you (1) agree to Voxel51’s Terms of Service and Privacy Statement and (2) agree to receive occasional emails.

Talks and Speakers

Towards Resource Efficient Robust Text-to-Image Generative Models

Maitreya Patel

Arizona State University

Text-to-image (T2I) diffusion models (such as Stable Diffusion XL, DALL-E 3, etc.) achieve state-of-the-art (SOTA) performance on various compositional T2I benchmarks, at the cost of significant computational resources. For instance, the unCLIP (i.e., DALL-E 2) stack comprises T2I prior and diffusion image decoder. The T2I prior model itself adds a billion parameters, increasing the computational and high-quality data requirements. Maitreya propose the ECLIPSE, a novel contrastive learning method that is both parameter and data-efficient as a way to combat these issues

About the Speaker

Maitreya Patel is a PHD student studying at Arizona State University focusing on model performance and efficiency. Whether it is model training or inference, Maitreya strives to make optimizations to make AI more accessible and powerful.

GraphRAG with a Knowledge Graph

Andreas Kollegger

Neo4j

Knowledge Graphs place information in context using graph structures to express local and global semantics. When used in a RAG context, particular access patterns emerge that map natural language to different graph data patterns. We’ll review both the model and the matching code.

About the Speaker

Andreas Kollegger is a founding member of Neo4j, now responsible for researching the use of Knowledge Graphs for GenAI applications.

Optimizing Training Data with the Voxel51 and V7 Darwin Integration

Mark Cox-Smith

V7

One of the most expensive parts of a machine learning project is obtaining high quality training data. In this talk, Mark will discuss how the integration between Voxel51 and the V7 Darwin platform can help you optimize the subset of data to be labeled with the goals of reducing costs whilst maintaining quality.

About the Speaker

Mark Cox-Smith is a Principal Solutions Architect at V7 where he helps customers to connect their labeling workflows into their MLOps stack.

Exploring Multimodal Models: Llava-Next and TextQA Dataset

Harpreet Sahota

Voxel51

In this session, you’ll get hands-on with the newest LlaVa model, LlaVa-next! You’ll learn how to use fiftyone to visually vibe check the performance of both the Vicuna-7B and Mistral-7B backbones models on the TextQA dataset.

About the Speaker

Harpreet Sahota is a hacker-in-residence and machine learning engineer with a passion for deep learning and generative AI. He’s got a deep interest in RAG, Agents, and Multimodal AI.

Find a Meetup Near You

Join 12,000+ AI and ML enthusiasts who have already become members

The goal of the AI, Machine Learning, and Data Science Meetup network is to bring together a community of data scientists, machine learning engineers, and open source enthusiasts who want to share and expand their knowledge of AI and complementary technologies. If that’s you, we invite you to join the Meetup closest to your timezone.