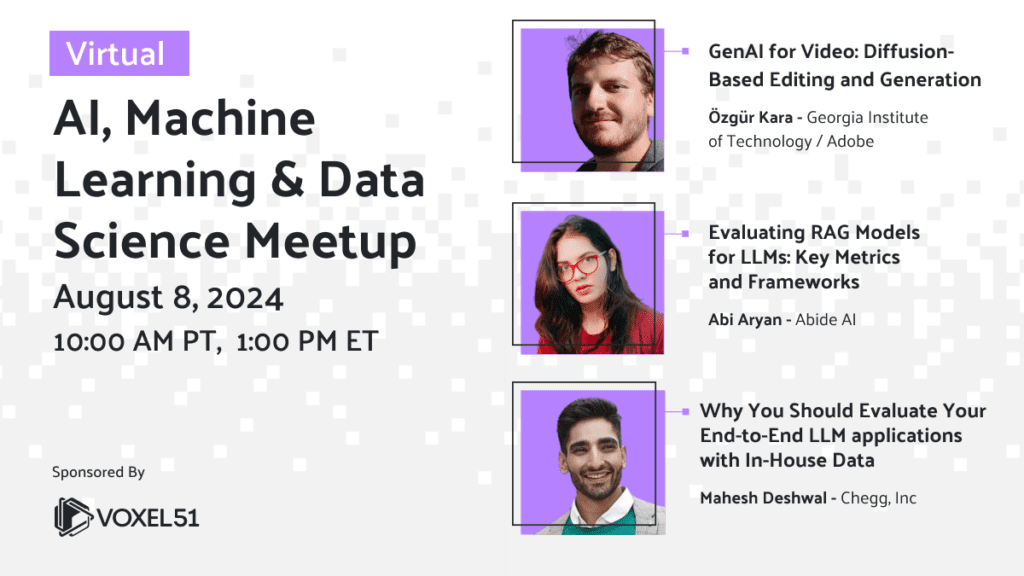

AI, Machine Learning and Computer Vision Meetup

Aug 8, 2024 at 10 AM Pacific

Register for the Zoom

By submitting you (1) agree to Voxel51’s Terms of Service and Privacy Statement and (2) agree to receive occasional emails.

GenAI for Video: Diffusion-Based Editing and Generation

Özgür Kara

Georgia Institute of Technology / Adobe

Recently, diffusion-based generative AI models have gained popularity due to their wide applications in the image domain. Additionally, there is growing attention to the video domain because of its ubiquitous presence in real-world applications. In this talk, we will discuss the future of GenAI in the video domain, highlighting recent advancements and exploring its potential and impact on video editing and generation. We will also examine the challenges and opportunities these technologies present, offering insights into how they can revolutionize the video industry.

About the Speaker

Ozgur Kara is a PhD student in the Computer Science Department at the University of Illinois at Urbana-Champaign. He earned his Bachelor’s degree in Electrical and Electronics Engineering from Boğaziçi University. His research focuses on generative AI and computer vision, particularly on generative AI and its applications in video.

Evaluating RAG Models for LLMs: Key Metrics and Frameworks

Abi Aryan

Abide AI

Evaluating the model performance is the key for ensuring effectiveness and reliability of LLM models. In this talk, we will look into the intricate world of RAG evaluation metrics and frameworks, exploring the various approaches to assessing model performance. We will discuss key metrics such as relevance, diversity, coherence, and truthfulness and examine various evaluation frameworks, ranging from traditional benchmarks to domain-specific assessments, highlighting their strengths, limitations, and potential implications for real-world applications.

About the Speaker

Abi Aryan is the founder of Abide AI and a machine learning engineer with over eight years of experience in the ML industry building and deploying machine learning models in production for recommender systems, computer vision, and natural language processing—within a wide range of industries such as ecommerce, insurance, and media and entertainment. Previously, she was a visiting research scholar at the Cognitive Sciences Lab at UCLA where she worked on developing intelligent agents. Also, she has authored research papers on AutoML, multi agent systems, and LLM cost modeling and evaluations and is currently authoring LLMOps: Managing Large Language Models in Production for O’Reilly Publications.

Why You Should Evaluate Your End-to-End LLM applications with In-House Data

Mahesh Deshwal

Chegg, Inc

This task discusses end-to-end NLP evaluations, focusing on key areas, common pitfalls, and the workings of production evaluation systems. It also explores how to fine-tune in-house LLMs as judges using custom data for more accurate performance assessments.

About the Speaker

Mahesh Deshwal is a Data Scientist and AI researcher with over 5.5 years of experience in using ML and AI to solve business problems, particularly in Computer Vision, NLP, recommendation, and personalization. As the author of the paper PHUDGE and an active open source contributor, he excels in delivering end-to-end solutions, from user requirements to deploying scalable models using MLOps.

Find a Meetup Near You

Join 12,000+ AI and ML enthusiasts who have already become members

The goal of the AI, Machine Learning, and Data Science Meetup network is to bring together a community of data scientists, machine learning engineers, and open source enthusiasts who want to share and expand their knowledge of AI and complementary technologies. If that’s you, we invite you to join the Meetup closest to your timezone.