A guide to downloading, visualizing, and evaluating models on the ActivityNet dataset using FiftyOne

Year over year, the ActivityNet challenge has pushed the boundaries of what video understanding models are capable of. Training a machine learning model to classify video clips and detect actions performed in videos is no easy task. Thankfully, the teams behind datasets like Kinetics and ActivityNet have provided massive amounts of video data to the computer vision community to assist in building high-quality video models.

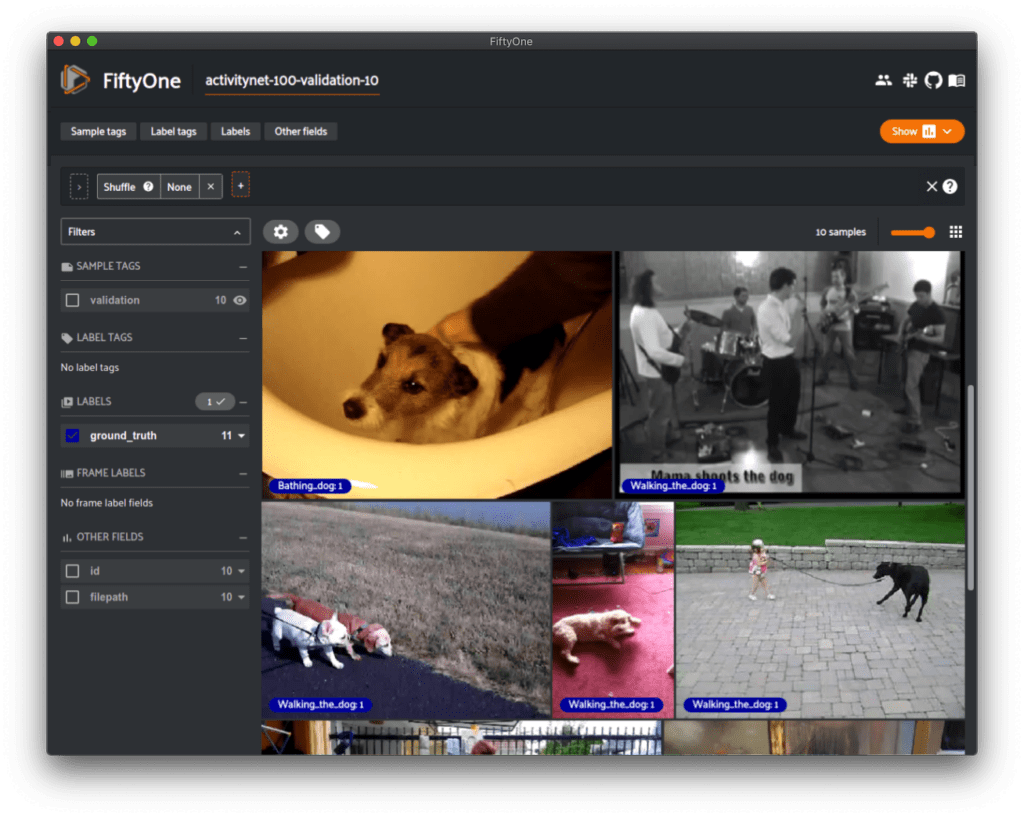

We’re announcing a partnership with the ActivityNet team to make the ActivityNet datasets natively available in the FiftyOne Dataset Zoo letting you access and visualize the dataset more easily than ever before as well as evaluate and analyze models trained on the dataset. FiftyOne is an open-source tool designed for dataset curation and model analysis. Downloading a subset of ActivityNet is now as easy as:

import fiftyone as fo

import fiftyone.zoo as foz

dataset = foz.load_zoo_dataset(

"activitynet-200",

classes=["Walking the dog"],

split="validation",

max_samples=10,

)

Setup

To run the examples in this post, you need to install FiftyOne.

pip install fiftyone

The ActivityNet Dataset

There are two popular versions of ActivityNet, one with 100 activity classes and a newer version with 200 activity classes.

These versions contain 9682 and 19994 videos respectively across their training, testing, and validation splits with over 8k and 23k labeled activity instances.

These labels are temporal activity detections which are each represented by a start and stop time for the segment as well as a label.

Downloading the Dataset

Downloading the entirety of the ActivityNet requires filling out this form to gain access to the dataset. However, many use cases involve specific subsets of ActivityNet. For example, you may be training a model specifically to detect the class “Bathing dog”.

The integration between FiftyOne and ActivityNet means that the dataset can now be accessed through the FiftyOne Dataset Zoo. Additionally, it makes it easier than ever to download specific subsets of ActivityNet directly from YouTube. You can download all samples of the class “Bathing dog” from the above example like so:

import fiftyone.zoo as foz

dataset = foz.load_zoo_dataset(

"activitynet-200",

classes=["Bathing dog"],

)

Other useful parameters include the ability to specify the dataset split to download,max_samples if you are only interested in subsets of the dataset, max_duration to define the maximum length of videos you want to download and more.

If you are working with the full ActivityNet dataset, you can use the source_dir parameter to point to the location of the dataset on disk and easily load it into FiftyOne to visualize it and analyze your models.

dataset = foz.load_zoo_dataset("activitynet-100", source_dir="/path/to/dataset")

ActivityNet Model Evaluation

In this section, we cover how to add your custom model predictions to a FiftyOne dataset and evaluate temporal detections following the ActivityNet evaluation protocol.

Adding Model Predictions to Dataset

Any custom labels and metadata can be easily added to a FiftyOne dataset. In this case, we need to populate a TemporalDetection field named predictions with the model predictions for each sample. We can then visualize the predictions in the FiftyOne App.

for sample in dataset:

predictions = model.predict(sample.filepath)

detections = []

for prediction in predictions:

detection = fo.TemporalDetection(

label=prediction["label"],

support=prediction["segment"],

)

detections.append(detection)

sample["predictions"] = fo.TemporalDetections(

detections=detections,

)

sample.save()

session = fo.launch_app(dataset)

Computing mAP

The ActivityNet evaluation protocol used to evaluate temporal activity detection models trained on the dataset is similar to the object detection evaluation protocol for the COCO dataset. Both involve computing the mean average precision of detections, either temporal or spatial, across the dataset by matching predictions with ground truth annotations.

The specifics of the ActivityNet mAP computation can be found here as it has been reimplemented in FiftyOne. After having added your predictions to the FiftyOne dataset, you can call the evaluate_detections() method.

results = dataset.evaluate_detections(

"predictions",

gt_field="ground_truth",

method="activitynet",

eval_key="eval",

compute_mAP=True,

)

print(results.mAP())

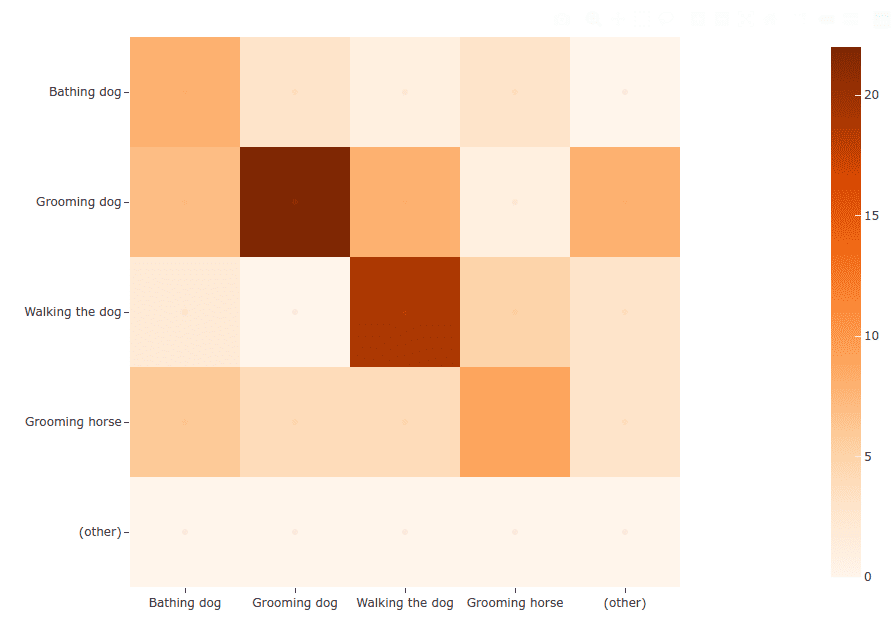

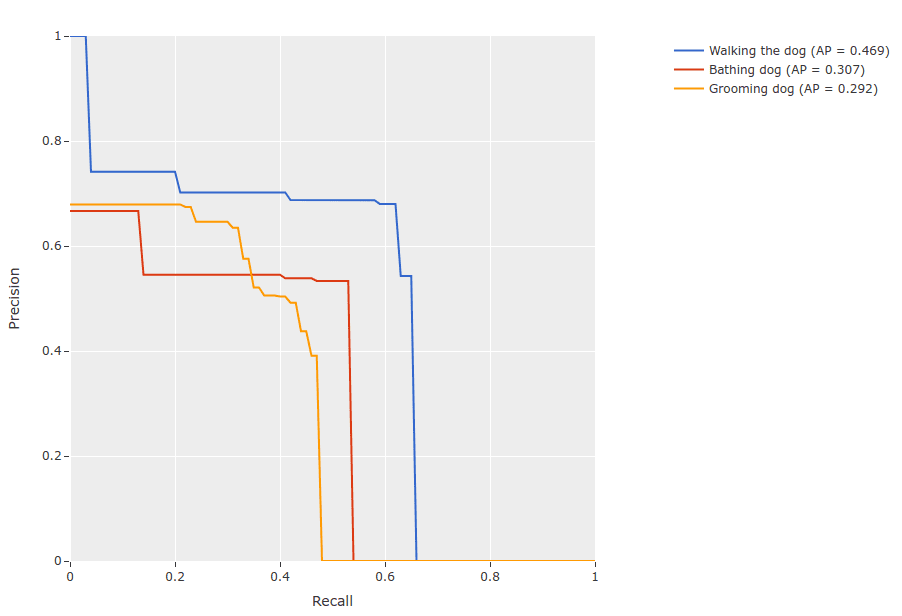

The evaluation results object can also be used to compute PR curves and confusion matrices of your model’s performance.

results.plot_pr_curve() results.plot_confusion_matrix()

|

|

Additional metrics can be computed using the DETAD tool introduced to diagnose the performance of action detection models on ActivityNet and THUMOS14. A follow-up post will explore how to incorporate DETAD analysis into our FiftyOne workflow to analyze models trained on ActivityNet.

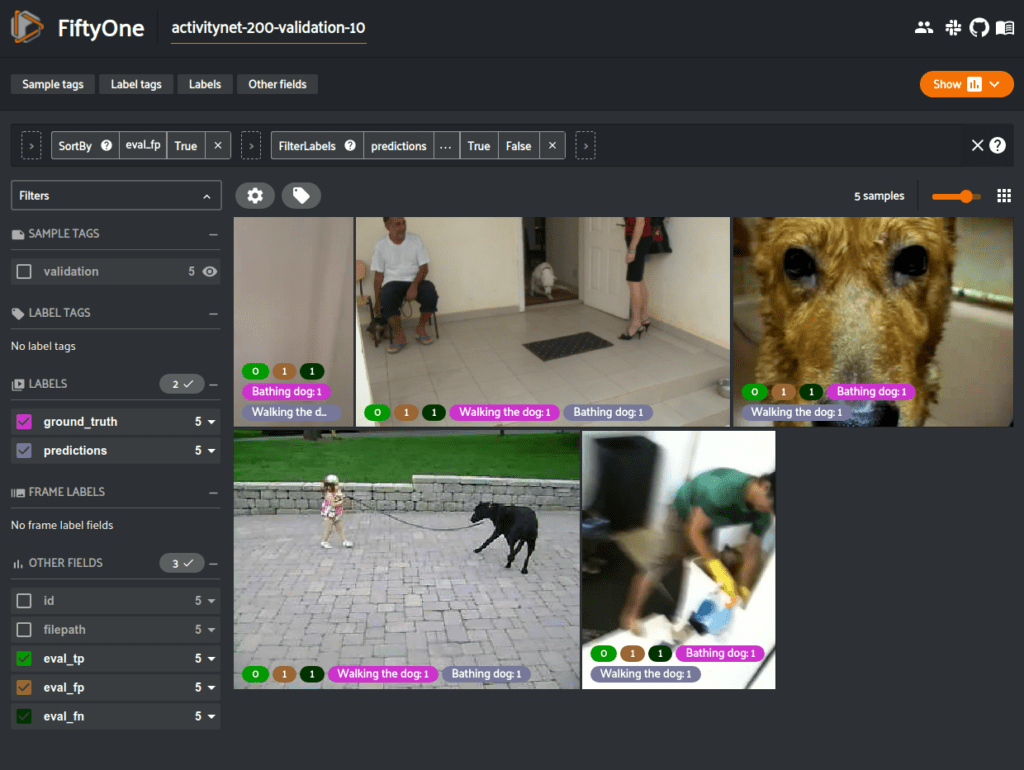

Analyzing Results

One of the primary benefits of the FiftyOne implementation of the ActivityNet evaluation protocol is that it stores not only dataset-wide metrics like mAP but also individual label-level results. Specifying the eval_key parameter when calling evaluate_detections() will populate fields on our dataset containing the individual sample- and label-level results of whether a predicted or ground truth temporal detection was a true positive, false positive, or false negative.

Using the powerful querying capabilities of FiftyOne, we can really dig into the model results and explore specific cases of where the model performed well or poorly. This analysis quickly informs us of the type of data that the model struggles with that we need to incorporate more into the training scheme. For example, in one line we can find all predictions that had high confidence but were evaluated as false positives.

from fiftyone import ViewField as F

view = dataset.filter_labels(

"predictions",

(F("confidence") > 0.8) & (F("eval") == "fp"),

)

From here you can use the to_clips() method to convert the dataset of full videos to a view of only the clips of videos according to the annotations of a field like our TemporalDetection predictions.

clips_view = view.to_clips("predictions")

# Update the view in the App

session.view = clips_view

The samples in these evaluation views are videos that the model was not able to correctly understand. One way to resolve this is to train on more samples similar to those that the model failed on. If you are interested in using this workflow in your own projects, check out the compute_similarity() method of the FiftyOne Brain.

Summary

ActivityNet is one of the leading video datasets in the computer vision community that is included in the popular yearly ActivityNet challenge. The integration between ActivityNet and FiftyOne makes it easier than ever to access the dataset, visualize it in the FiftyOne App, and analyze your model performance to figure out how to build better activity classification and detection models.