Welcome to our weekly FiftyOne tips and tricks blog where we recap interesting questions and answers that have recently popped up on Slack, GitHub, Stack Overflow, and Reddit.

Wait, what’s FiftyOne?

FiftyOne is an open source machine learning toolset that enables data science teams to improve the performance of their computer vision models by helping them curate high quality datasets, evaluate models, find mistakes, visualize embeddings, and get to production faster.

- If you like what you see on GitHub, give the project a star

- Get started! We’ve made it easy to get up and running in a few minutes

- Join the FiftyOne Slack community, we’re always happy to help

Ok, let’s dive into this week’s tips and tricks!

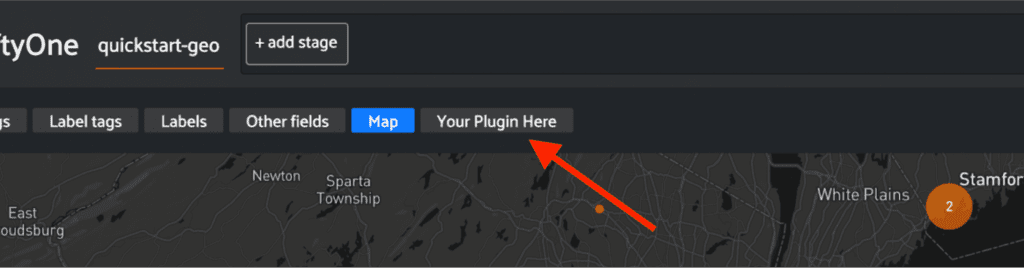

Custom Plugins for the FiftyOne App

Community Slack member Gerard Corrigan asked,

“I’d like to integrate FiftyOne with another app. How can I do that?”

With the latest FiftyOne 0.17.0 release you can now customize and extend the FiftyOne App’s behavior. For example, if you need a unique way to visualize individual samples, plot entire datasets, or fetch FiftyOne data, a custom plugin just might be the ticket!

Learn more about how to develop custom plugins for the FiftyOne App on GitHub.

Exporting visualization options from the FiftyOne App

Community Slack member Murat Aksoy asked,

“Is there a way to export videos from the FiftyOne App? Specifically after an end-user makes adjustments to visualization options such as which labels to show, opacity, etc?”

The best workflow to accomplish this would be:

- Interactively select/filter in the FiftyOne App in IPython/notebook

- Press the “bookmark” icon to save your current filters into the view bar

- Render the labels for the current view from Python via:

session.view.draw_labels(...)The drawlabels()method provides a bunch of options for configuring the look-and-feel of the exported labels, including font sizes, transparency, etc.

In a nutshell, you can use the FiftyOne App to visually filter, but you must use drawlabels()in Python to trigger the rendering and provide any look-and-feel customizations you want, like transparency.

Learn more about the drawlabels()method in the FiftyOne Docs.

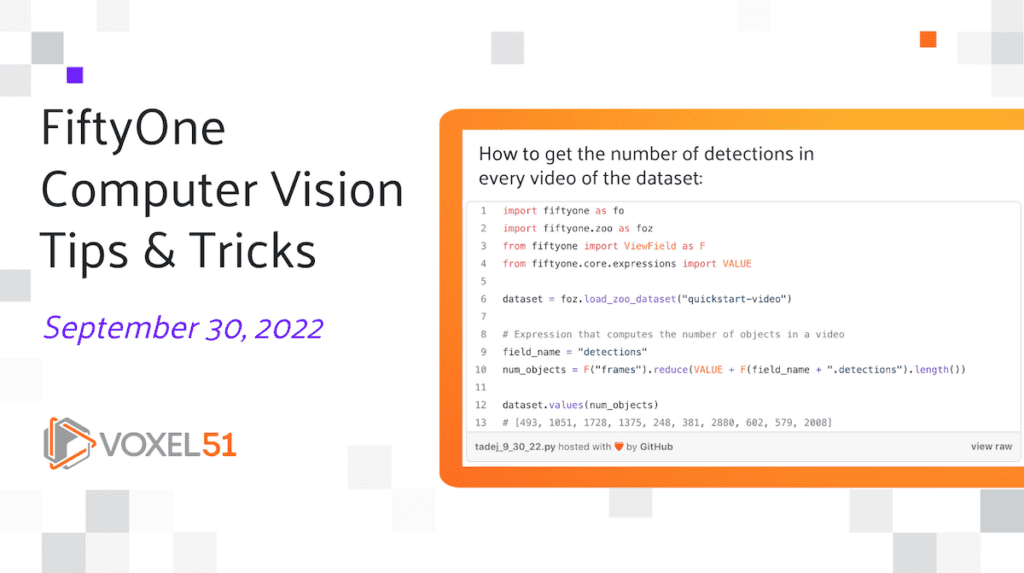

Retrieving aggregations per video

Community Slack member Tadej Svetina asked,

“I have a video dataset and I am interested in getting some aggregations (let’s say count of detections) per video. How do I do that?”

You can actually use the values()aggregation for this along with some pretty advanced view expression usage. Specifically, you can reduce the frames in each video to a single value based on the length of the detections in a field of each video. For example, here’s a way to get the number of detections in every video of the dataset.

import fiftyone as fo

import fiftyone.zoo as foz

from fiftyone import ViewField as F

from fiftyone.core.expressions import VALUE

dataset = foz.load_zoo_dataset("quickstart-video")

# Expression that computes the number of objects in a video

field_name = "detections"

num_objects = F("frames").reduce(VALUE + F(field_name + ".detections").length())

dataset.values(num_objects)

# [493, 1051, 1728, 1375, 248, 381, 2880, 602, 579, 2008]Or you can modify this code slightly to get a dictionary mapping of video IDs to the number of objects in each video:

id_num_objects_map = dict(zip(*dataset.values(["id", num_objects])))Learn more about how to use reduce()in the FiftyOne Docs.

Working with polylines and labels using the CVAT integration

Community Slack member Guillaume Dumont asked,

“I am using the CVAT integration with a local CVAT server and somehow, in the cases where the polylines have the same label_id, the last one would override the previous one when downloading annotations. This ended up leaving a single polyline where I expected there to be many. Any ideas what’s going on here?”

When callingto_polylines()you want to make sure to use the mask_types="thing"rather than the default mask_types="stuff"which will give each segment a unique ID. You can also directly annotate semantic segmentation masks and let FiftyOne manage the conversion to polylines for you.

Here’s the relevant snippet from the Docs in regards to mask_types:

mask_types(“stuff”) — whether the classes are “stuff” (amorphous regions of pixels) or “thing” (connected regions, each representing an instance of the thing).Can be any of the following:

– “stuff” if all classes are stuff classes

– “thing” if all classes are thing classes

– a dict mapping pixel values to “stuff” or “thing” for each class

Learn more about the CVAT integration and to_polylines()and semantic segmentation in the FiftyOne Docs.

Exporting only landscape orientation images

Community Slack member Stan asked,

“How would I go about exporting only images in a certain orientation, for example landscape vs portrait? I have a script to tag images as landscape by checking if width is greater than height and then removing all images and annotations for the images that are not in the landscape orientation. What would be the FiftyOne approach for this?”

Here’s a way to isolate landscape image samples, and then remove all other samples, using for example the quickstart dataset:

import fiftyone as fo

import fiftyone.zoo as foz

from fiftyone import ViewField as F

dataset = foz.load_zoo_dataset("quickstart")

dataset.compute_metadata()

# Create a view that only contains landscape images

view = dataset.match(F("metadata.width") > F("metadata.height"))

print(view) # note that size shrunk from 200 to 147 samples

# Visualize only landscape images in the App

session = fo.launch_app(view)

# If you want to delete the portrait images from the dataset

view.keep()Learn more about the FiftyOne Dataset Zoo quickstart dataset in the FiftyOne Docs.

What’s next?

- If you like what you see on GitHub, give the project a star

- Get started! We’ve made it easy to get up and running in a few minutes

- Join the FiftyOne Slack community, we’re always happy to help